You can download the new machine learning cheat sheet here (PDF format, 14 pages.)

Originally published in 2014 and viewed more than 200,000 times, this is the oldest data science cheat sheet – the mother of all the numerous cheat sheets that are so popular nowadays. I decided to update it in June 2019. While the first half, dealing with installing components on your laptop and learning UNIX, regular expressions, and file management hasn’t changed much, the second half, dealing with machine learning, was rewritten entirely from scratch. It is amazing how things have changed in just five years!

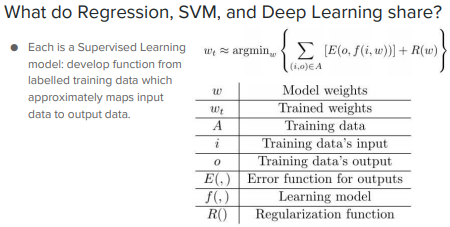

Source for picture: see here (original) or here (PDF)

Written for people who have never seen a computer in their life, it starts with the very beginning: buying a laptop! You can skip the first half and jump to sections 5 and 6 if you are already familiar with UNIX. This new cheat sheet will be included in my upcoming book Machine Learning: Foundations, Toolbox, and Recipes to be published in September 2019, and available (for free) to Data Science Central members exclusively. This cheat sheet is 14 pages long.

Content

1. Hardware

2. Linux environment on Windows laptop

3. Basic UNIX commands

4. Scripting languages

5. Python, R, Hadoop, SQL, DataViz

6. Machine Learning

- Algorithms

- Getting started

- Applications

- Data sets and sample projects

To not miss this type of content in the future, subscribe to our newsletter. For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn, or visit my old web page here.