Summary: The shortage of data scientists is driving a growing number of developers to fully Automated Predictive Analytic platforms. Some of these offer true One-Click Data-In-Model-Out capability, playing to Citizen Data Scientists with limited or no data science expertise. Who are these players and what does it mean for the profession of data science?

In a recent poll the question was raised “Will Data Scientists be replaced by software, and if so, when?” The consensus answer:

Data Scientists automated and unemployed by 2025.

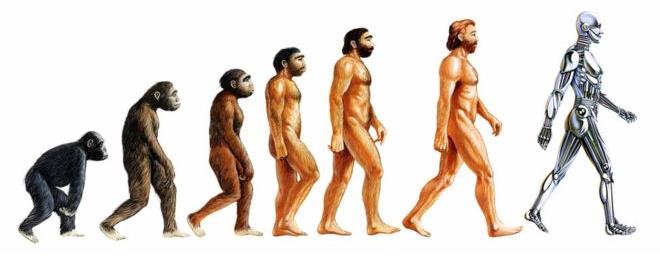

Are we really just grist for the AI mill? Will robots replace us?

As part of the broader digital technology revolution we data scientists regard ourselves as part of the solution not part of the problem. But as part of this fast moving industry built on identifying and removing pain points it’s possible to see that we are actually part of the problem.

Seen as a good news / bad news story it goes like this. The good news is that advanced predictive analytics are gaining acceptance and penetration at an ever expanding rate. The bad news is that there are not enough well trained data scientists to go around meaning we’re hard to find and expensive once you find us. That’s the pain.

A fair number of advanced analytic platform developers see this too and the result is a rising number of Automated Predictive Analytic platforms that actually offer One-Click Data-In-Model-Out.

While close in trends are easy to see, those that may fundamentally remake our professional environment over the next three to five years can be a little more difficult to spot. I think Automated Predictive Analytics is one of those.

This topic is too broad for one article so I’ll devote this blog to illustrating what these platforms claim they can do and give you a short list of participants you can check out for yourself. You may have thought that the broader issue is whether or not predictive analytics can actually be automated. It’s not. When you examine these companies you’ll see that boat has sailed.

The broader issues for future discussion are:

- Is this is a good or bad thing,

- How can we integrate it into the reality of the practice of our day-to-day data science lives, and

- How will this impact our profession over the next three to five years?

What Exactly Is Automated Predictive Analytics

Automated Predictive Analytics are services that allow a data owner to upload data and rapidly build predictive or descriptive models with a minimum of data science knowledge.

Some will say that this is the benign automation of our overly complex toolkit, simplifying tasks like data cleaning and transformation that don’t require much creativity, or the simultaneous parallel generation of multiple ML models to rapidly arrive at a champion model. This would be akin to the evolution from the hand saw to the power saw to the CNC cutting machine. These are enhancements that make data scientists more productive so that’s a good thing.

However, ever since Gartner seized on the term Citizen Data Scientist and projected that this group would grow 5X more quickly than data scientists, analytic platform developers have seen this group possessing a minimum of data science knowledge as a key market for expansion.

Whether this is good or bad I’ll leave for later. For right now we need to acknowledge that the direction of development is toward systems so simplified that only a minimum expertise with data science is required.

A Little History

The history of trying to automate our tool kit is actually quite long. In his excellent 2014 blog, Thomas Dinsmore traces about a dozen of these events all the way back to UNICA in 1995. Their Pattern Recognition Workbench used automated trial and error to optimize a predictive model.

He tracks the history through MarketSwitch in the late 1990’s (You Can Fire All Your SAS Programmers), to KXEN (later purchased by SAP), through efforts by SAS and SPSS (now IBM), ultimately to the open source MLBase project and the ML Optimizer by the consortium of UC Berkeley and Brown University to create a scalable ML platform on Spark. All of these in one form or another took on the automation of either data prep or model tuning and selection or both.

What characterized this period ending just a few years ago is that all of these efforts were primarily aimed at simplifying and making efficient the work of the data scientist.

As far back as about 2011 though, and with many more entrants since 2014 are a cadre to platform developers who now seek One-Click Data-In-Model-Out simplicity for the non-data scientist.

Sorting Out the Market

As you might expect there is a continuum of strategies and capabilities present in these companies. These range from highly simplified UIs that still require the user to go through the steps of cleaning, discovery, transformation, model creation, and model selection all the way through to true One-Click Data-In-Model-Out.

On the highly simplified end of the scale are companies like BigML (www.BigML.com) targeting non-data scientist. BigML leads the user through the classical steps in preparing data and building models using a very simplified graphical UI. There’s a free developer mode and very inexpensive per model pricing.

Similarly Logical Glue (www.logicalglue.com) also targets non-data scientist using the theme ‘Data Science is not Rocket Science’. Like BigML it still requires the user to execute five simplified data modeling steps using a graphical UI.

But to keep our focus on the true One-Click Data-In-Model-Out Platforms we’ll focus on these five:

(This is not intended to be an exhaustive list but drawn from platforms I’ve looked at over the last few months.)

- PurePredictive (www.PurePredictive.com)

- DataRPM (www.DataRPM.com)

- DataRobot (www.DataRobot.com)

- Xpanse Analytics (www.xpanseanalytics.com)

- ForecastThis (www.forecastthis.com)

Essentially all of these are cloud based though a few can also be implemented on-prem or even in workstation.

To be included in this list means that each of the common data science steps is fully automated even if there is an expert override. It may be that it takes several ‘clicks’ to get through the process, not just one. PurePredictive for example is very close to one-click while DataRPM is closer to five. One key differentiator is that they must select the appropriate ML algorithms from a fairly large library and run them simultaneously, including working the tuning parameters and deriving ensembles. Beyond this, their strategies and capabilities reflect different go-to-market strategies.

DataRPM and Xpanse Analytics have well developed front end data blending capabilities while the others start with analytic flat files.

PurePredictive and DataRPM make no bones about pitching directly to the non-data scientist while DataRobot and Xpanse Analytics have expert modes trying to appeal to both amateurs and professionals. ForecastThis presents as a platform purely for data scientists.

Claims and Capabilities

As to accuracy I’ve only personally tested one, PurePredictive where I ran about a dozen datasets that I had previously scored on other analytic platforms. The results were surprisingly good with a few coming in slightly more accurate than my previous efforts and a few coming in slightly less so, but with no great discrepancies. Some of these data sets I left intentionally ‘dirty’ to test the data cleansing function. The claim of one-click simplicity however was absolutely true and each model completed in only two or three minutes.

Some Detail

PurePredictive (www.PurePredictive.com)

Target: Non-data scientist. One Click MPP system runs over 9,000 ML algorithms in parallel selecting the champion model automatically. (Note: Their meaning of 9.000 different models is believed to be based on variations in tuning parameters and ensembles using a large number of ML native algorithms.)

- Blending: no, starts with analytic flat file.

- Cleanse: yes

- Impute and Transform: yes

- Select ML Algorithms to be utilized: runs over 9.000 simultaneously including many variations on regression, classification, decision trees, neural nets, SVMs, BDMs, and a large number of ensembles.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Currently only by API.

DataRPM (www.DataRPM.com)

Target: Non-data scientist. One Click MPP system for recommendations and predictions. UI based on ‘recipes’ for different types of DS problems that lead the non-data scientist through the process.

- Blending: yes.

- Cleanse: yes

- Impute and Transform: yes

- Select ML Algorithms to be utilized: runs many but types not specified

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Deploy via API.

DataRobot (www.DataRobot.com)

Target: Non-data scientist but with expert override controls for Data Scientists. Theme: ‘Data science in the cloud with a copilot’. Positioned as a high performance machine learning automation software platform and a practical data science education program that work together.

- Blending: no, starts with analytic flat file.

- Cleanse: yes

- Impute and Transform: yes

- Select ML Algorithms to be utilized: Random Forests, Support Vector Machines, Gradient Boosted Trees, Elastic Nets, Extreme Gradient Boosting, ensembles, and many more.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Deploy via API or exports code in Python, C, or JAVA.

Xpanse Analytics (www.xpanseanalytics.com)

Target: Both Data Scientist and non-data scientist. Differentiates based on the ability to automatically generate and test thousands of variables from raw data using a proprietary AI based ‘deep feature’ engine.

- Blending: yes.

- Cleanse: yes

- Impute and Transform: yes

- Select ML Algorithms to be utilized: yes – exact methods included not specified.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Believed to be via API.

ForecastThis, Inc. (www.forecastthis.com)

Target: Data Scientist. The DSX platform is designed to make the data scientist more efficient by automating model building including many advanced algorithms and ensemble strategies. For modeling only, not data prep.

- Blending: no.

- Cleanse: no

- Impute and Transform: no

- Select ML Algorithms to be utilized: A library of deployable algorithms, including Deep Neural Networks, Evolutionary Algorithms, Heterogeneous Ensembles, Natural Language Processing and many proprietary algorithms.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. R, Python and Matlab plus API.

Since ForecastThis is a modeling platform only with no prep or discovery capabilities, it’s worth mentioning that there are one-click data prep platforms out there. One worth mentioning as it comes with a particularly good pedigree is Wolfram Mathematica. Wolfram makes a real Swiss army knife data science platform and while the ML capabilities are not one click they make the claim to automatically preprocess data, including missing-values imputation, normalization, and feature selection with built-in machine learning capabilities.

Next time, more about whether you should be comfortable adopting any of these and what the implications might be for the profession of data science.

Additional articles on Automated Machine Learning

Next Generation Automated Machine Learning (AML) (April 2018)

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025 – Update! (July 2017)

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: