Summary: A year ago we wrote about the emergence of fully automated predictive analytic platforms including some with true One-Click Data-In Model-Out capability. We revisited the five contenders from last year with one new addition and found the automation movement continues to move forward. We also observed some players from last year have now gone in different directions.

Just about a year ago we wrote the original article for which this is the update. Although it was just a year ago it seems like much longer ago that several newly emerged analytic platform vendors were touting fully automated machine learning platforms, the most extreme and interesting of which were literally One-Click Data-In-Model-Out.

With advances in AI and cloud-based massive parallel processing it was apparent that these folks were out to dramatically simplify the process of building deployment-ready models.

Why? Well certainly one driver remains the structural shortage of well trained and affordable data scientists. Hiring is still a problem so trying to do more with fewer DS assets is a reasonable objective. By the way this applies not only to the personnel costs but also the platform costs if you’ve centralized around one of the major platforms like SAS or SPSS. So the premise is that fewer trained data scientists can use these tools to produce the same number and quality of models that required a much larger group much longer to do in the past.

The second driver is the on-going push by some analytic platform vendors to ‘democratize’ predictive analytics, by which they mean to make it possible for the citizen data scientist (aka lesser or untrained amateurs) to build some of these models directly. There are just a whole lot more analysts and citizen data scientists than there are fully trained data scientists and if you want to sell platforms, you’d really like to tap this much larger market. Gartner says this segment will grow 5X more rapidly than the professional data scientist market.

So to accomplish either or both of these objectives you have to:

- Make the process of model building extremely simple (which we know it is not).

- Give reasonably good results very quickly.

- Be very affordable.

If these vendors are successful this may well reduce demand for fully qualified data scientists and makes it reasonable to ask:

Will We Be Automated and Unemployed by 2025?

Automated Predictive Analytics is Here to Stay

Automated predictive analytics covers a spectrum of features and capabilities ranging from the true One-Click platforms to those that seek to simplify the process but still need the user to go through several steps (where they may be directed or coached at each step).

Automated predictive analytics covers a spectrum of features and capabilities ranging from the true One-Click platforms to those that seek to simplify the process but still need the user to go through several steps (where they may be directed or coached at each step).

Also, these vendors all seem to be carefully treading the middle ground between a very simplified appeal to amateurs (excuse me, Citizen Data Scientists) and their current professional DS market so as not to alienate their current base or so over simplify that it’s all uncontrolled black box.

The core of all these offerings is running lots of different modeling algorithms in parallel and then pointing out the winner. This isn’t new. SAS and SPSS have had this capability for several years. In their version the user simply pulls down as many model algorithms as they want to try onto the graphical interface, hooks them to the data source, and they run in parallel.

The first principle difference is that these One-Clicks select the algorithms to be run. Actually it’s more like picking them all except for the ones that absolutely don’t make sense. Then they claim to automatically tune the hyper parameters based on your data set.

Hyper parameter tuning is a time consuming pain requiring expert understanding. If these platforms can do this successfully that may truly be a benefit but only if two things are true:

- The results have to satisfactorily accurate.

- They have to be transparent and show what adjustments they’ve made. The best ones will let data scientists make their own tuning adjustments to see if they can do better.

The Middle Ground – Simplified Multi-Step

Simplifying and automating the creation of predictive models falls along a continuum ranging from one-at-a-time custom R code, through the large scale simplified professional platforms like SAS and SPSS, through a middle ground ,and finally to the One-Clicks.

I rather like this simplified multi-step middle ground even though that’s not the focus of this article. It gives me some comfort that lesser trained folks still must go through and think about each of the primary steps:

- Blending

- Explore

- Cleaning

- Impute and transform

- Feature engineering and selection

- Model

- Evaluate

- Deploy

It’s also an opportunity for more highly trained folks to mentor, review, train, and perhaps most important, monitor what these less experienced modelers are doing.

Last year we called out two as examples and they’ve both survived into the current year. You might look at these as examples of this genre.

BigML (www.BigML.com) appears to be targeting primarily non-data scientist. BigML leads the user through the classical steps in preparing data and building models using a very simplified graphical UI. Their roots are in decision trees but in the last year they’ve added significant new algorithms. There’s a free developer mode and very inexpensive per model pricing.

Logical Glue (www.logicalglue.com) also appears to be targeting non-data scientist. A year ago their tag line was ‘Data Science is not Rocket Science’. That’s gone now for the more sober sounding ‘Effortless Intelligence’. It appears they’re now strongly focused on the financial markets and particularly the sort of models that lend themselves to transparency and explainability in that highly regulated market.

One-Click Data-In-Model-Out Platforms

This is not an easy market in which to find all the players so we’ll focus primarily on those that we identified last year plus one other new entrant we’ve become aware of. If you know of others please list them in the comments. I’d like to keep track of them.

We aren’t trying to be as rigorous as a Gartner or a Forrester in defining who’s in and who’s out in this target segment and if you’re interested in these providers you’ll need to do some significant digging on your own.

Last year we reviewed five platforms. This year two from last year have dropped out, and one new candidate has been added.

The Drop Outs

ForecastThis, Inc. (www.forecastthis.com)

A year ago ForecastThis was a data scientist-focused DSX platform for running parallel algorithms with no blending, cleaning, or impute/transform capability. During the last year they’ve recreated themselves as a very interesting DSX platform for time series forecasts based on deep learning and optimization. They’re now focused on investment managers and quantitative analysts. They also no longer make any One-Click claims.

DataRPM (www.DataRPM.com)

Last year DataRPM was already a fully functional One-Click system targeting non-data scientists. In April they were acquired by Progress Software Corp. as part of their new portfolio for conquesting the industrial internet of things (IIoT). DataRPM has been made over into a platform for predictive maintenance which seems a good fit. However, since they no longer offer a general purpose platform, we wish them well.

This Year’s Four One-Click Data-In-Model-Out Contenders

(In alphabetic order)

DataRobot (www.DataRobot.com)

Target: Both non-data scientist and data scientists with access to expert override controls for data scientists. Positioned as a high performance machine learning automation software platform with a good program of educational offerings to bring the less experienced up to speed.

- Blending: no, starts with analytic flat file.

- Cleanse: yes

- Impute and Transform: yes

- Feature Selection: yes

- Select ML Algorithms to be utilized: Random Forests, Support Vector Machines, Gradient Boosted Trees, Elastic Nets, Extreme Gradient Boosting, ensembles, and many more.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Hyper Parameters during model development: yes

- Select and deploy: User selects. Deploy via API or exports code in Python, C, or JAVA.

MLJAR (www.mljar.com)

Target: Appears to be both Data Scientist and non-data scientists. New this year, MLJAR is still in beta but is up and is a fully functional One-Click with a few features yet to be introduced.

- Blending: no, analytic flat file in.

- Cleanse: not yet

- Impute and Transform: in development

- Feature Select: yes

- Select ML Algorithms to be utilized: yes

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Cloud API or on prem.

PurePredictive (www.PurePredictive.com)

Target: Non-data scientist. One Click MPP system runs over 9,000 ML algorithms in parallel selecting the champion model automatically. (Note: Their meaning of 9.000 different models is believed to be based on variations in tuning parameters and ensembles using a large number of ML native algorithms.) I participated in their beta test a year ago and using models I had built in the past, had some results that were better than mine and some that were marginally lower. Easy to use.

- Blending: no, starts with analytic flat file.

- Cleanse: yes

- Impute and Transform: yes

- Feature Selection: yes

- Select ML Algorithms to be utilized: runs over 9.000 simultaneously including many variations on regression, classification, decision trees, neural nets, SVMs, BDMs, and a large number of ensembles.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Currently only by API.

Xpanse Analytics (www.xpanseanalytics.com)

Target: Both Data Scientist and non-data scientist. Differentiates based on the ability to automatically generate and test thousands of variables from raw data using a proprietary AI based ‘deep feature’ engine.

- Blending: yes.

- Cleanse: yes

- Impute and Transform: yes

- Feature Select: yes – claims AI based.

- Select ML Algorithms to be utilized: yes – exact methods included not specified.

- Run Algorithms in Parallel: yes

- Adjust Algorithm Tuning Parameters during model development: yes

- Select and deploy: User selects. Believed to be via API.

Extinct by 2025?

I have no doubt that this is the beginning of a valuable phase of automation and simplification for data science tools. It may take the full seven years between now and 2025 to get there but the genie is out of the bottle. Whether these four companies make it is up to their own talents and mysteries of the market place.

I had some hands on experience with Pure Predictive and hosted a DataScienceCentral webinar for DataRobot. Both look like solid offerings, taking nothing away from the other two platforms.

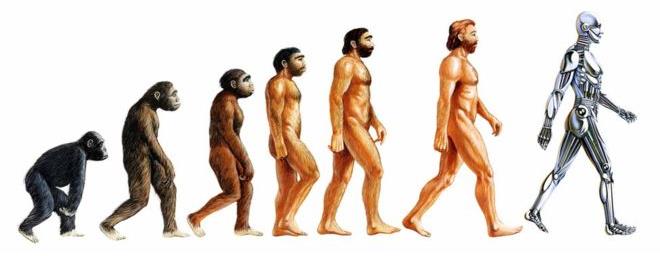

As this picks up momentum my bet is that you’ll see these features incorporated into the major analytic platforms. The features being automated require a great deal of skill and experience to get right. Data cleaning, transforms, imputes, and hyper parameter tuning are complex but not particularly creative. So as we observed a year ago, this is akin to the evolution from the hand saw to the power saw to the CNC cutting machine. Efficiency is a good thing.

In my conversation with DataRobot, Greg Michaelson the Director of their R&D lab related a story that points to the future of data scientists. Their platform does a number of sophisticated transforms that take care of most of the feature engineering. But in the development of a loan risk model, Greg pointed out that there was an engineered feature the machine could not have foreseen. While debt-to-income ratio is a common engineered variable in loan risk modeling, the model was significantly improved when debt-to-income was enhanced by adding the value of the proposed loan to the debt. It’s this sort of domain expertise and creative insight that will keep data scientists employed well into the future.

Additional articles on Automated Machine Learning

Next Generation Automated Machine Learning (AML) (April 2018)

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025! (April 2016)

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: