We are seeing a bunch of AI regulatory announcements from both sides of the Atlantic

President Biden with his executive order on safe, secure and trustworthy AI, and Prime Minister Rishi Sunak on his AI safety summit (which is a closed door event).

Both these initiatives are dominated by a specific view i.e. safety security and risk

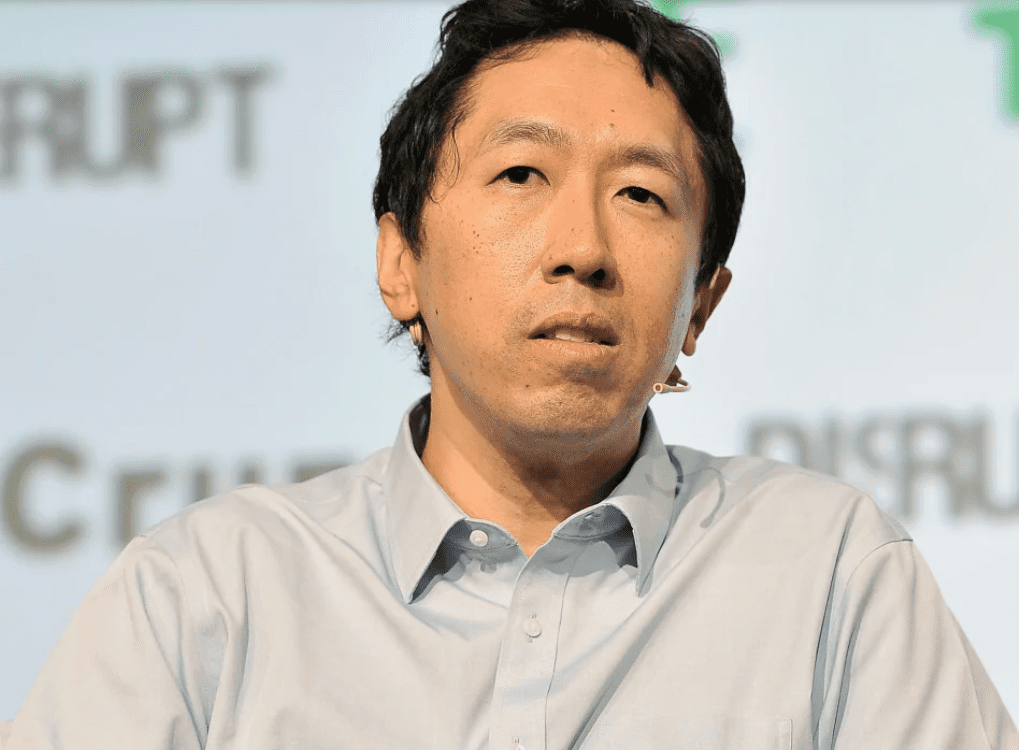

Against this, Andrew Ng stands as one of the few voices of reason who says big tech is lying about AI extinction danger.

I like Andrew Ng views and I think we should elaborate on these ideas more.

So much so, I jokingly said “Andrew Ng for President”

But on a more serious note – this is a very serious issue and a lot is at stake.

Lets break down key points from Andrew Ng:

1) AI extinction of humanity is a bad idea.

2) Big tech is promoting this idea to create heavy regulation which will cripple competition – especially from open source.

3) The combination of existential threats and licensing is an even worse idea.

4) These could crush innovation and reward the big players.

5) The White House’s use of the Defense Production Act—typically reserved for war or national emergencies—distorts AI through the lens of security, for example with phrases like “companies developing any foundation model that poses a serious risk to national security.”

6) like electricity and encryption, AI is a dual-use technology ie can be used for civilian or military purposes, but conflating AI safety for civilian use cases and military applications is a mistake.

7) It’s also a mistake to set reporting requirements based on a computation threshold for model training. Today’s supercomputer is tomorrow’s pocket watch. So as AI progresses, more players — including small companies will run into this threshold.

8) Over time, governments’ reporting requirements tend to become more burdensome.

9) The right place to regulate AI is at the application layer ex healthcare, self-driving cars

10) Adding burdens to foundation model development unnecessarily slows down AI’s progress.

I agree with all these points

Image source: Andrew Ng