A podcast with Fabrizio del Maffeo of Axelera AI

As CEO and Co-founder of Axelera AI, Fabrizio del Maffeo is focused on harnessing the potential of the fifth generation of RISC (reduced instruction set computing) platforms in edge AI applications. Axelera announced early availability of its in-memory Metis AI platform in September 2023.

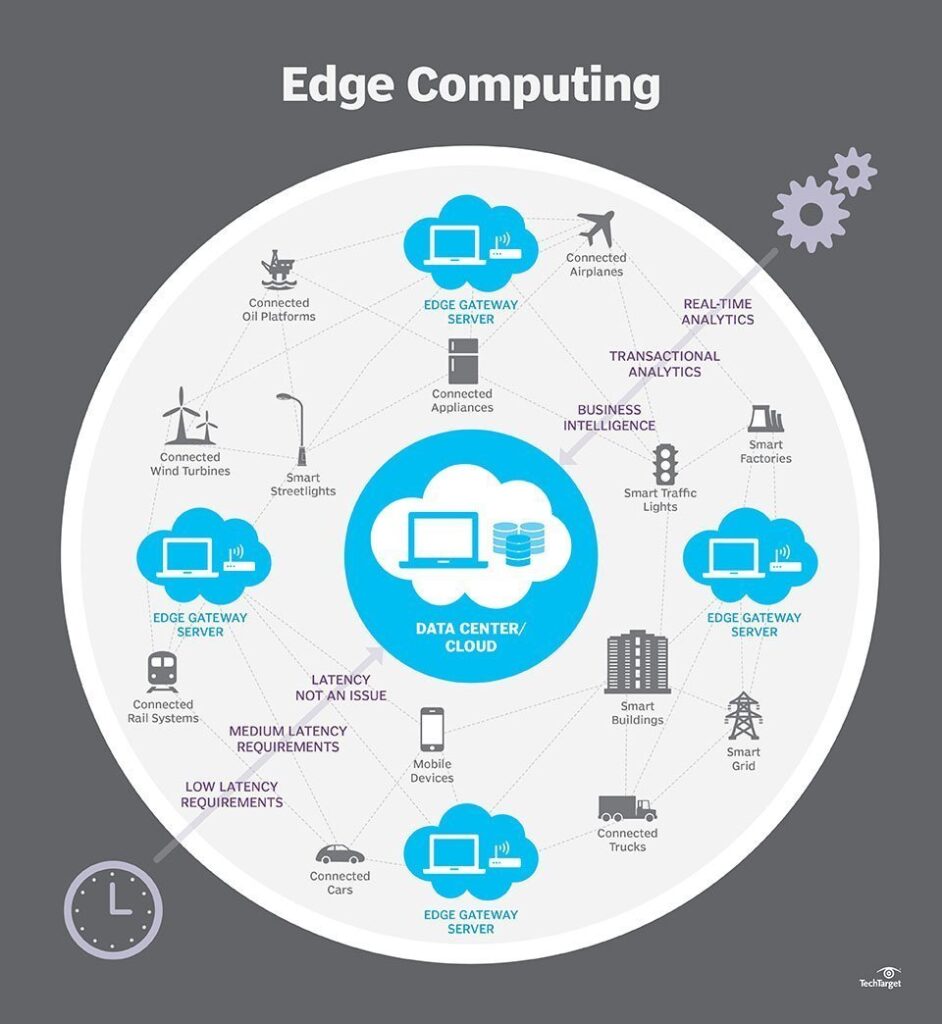

Edge AI is the use of artificial intelligence in an edge computing environment. Edge computing for its part moves the responsibility for processing critical workloads closer to where the remote demand for information is, such as in a mobile transportation, warehouse, or factory environment. Proximity to the end user means more efficient use of energy and network bandwidth.

Users of the 50 billion + devices that are currently internet connected are underserved, del Maffeo points out. Much of edge computing’s promise is still unrealized, with data taking far too many round trips back to central data centers. The result is massive inefficiency and a poor user experience due to latency that could be substantially reduced with the help of better design.

The issue isn’t just putting more compute and storage closer to the demand, del Maffeo says. GPUs crafted for gaming purposes aren’t designed for AI, much less AI at the edge. The Nvidia H100 GPUs in demand by major public cloud providers for their data centers draw 700 watts per unit, with each unit weighing as much as three kilograms. The challenge of edge AI is handling high volumes of table multiplications with limited power and network bandwidth.

To tackle this challenge, Axelera took advantage of memory potential in the RISC-V architecture to merge memory and processing functions, as well as parallelize processing at the edge in new ways. The ultimate goal of platforms such as Axelera’s Metis AI is ambitious: large language model (LLM) processing at the edge.

Del Maffeo in this podcast explores a wide range of edge use cases, from smart cities, to automotive, to retail. Hope you find the discussion as illuminating as I have.