The recent upheavals at OpenAI and OpenAI’s Chief Scientist’s apprehensions regarding the “safety” of AI have ignited a fresh wave of concerns and fears about the march towards Artificial General Intelligence (AGI) and “Super Intelligence.”

AI safety concerns the development of AI systems aligned with human values and do not cause harm to humans. Some of the AI safety concerns that Ilya Sutskever, OpenAI’s Chief Scientist and one of the leading AI scientists behind ChatGPT, has expressed are:

- The potential for AI to replace most human jobs and create massive unemployment

- The risk of AI systems becoming misaligned with human goals or developing their own goals that conflict with ours

- The challenge of ensuring that AI systems are transparent, accountable, and controllable by humans

- The ethical and social implications of creating and deploying powerful AI systems that can negatively and incomprehensibly affect hundreds of millions of lives

Sutskever reportedly led the board coup at OpenAI that ousted CEO Sam Altman (Altman has since been reinstated at OpenAI) over these AI safety concerns, arguing that Altman was pushing for commercialization and growth too fast and too far without adequate attention to the safety and social impact of OpenAI’s AI technologies.

The wild agitation about AI “becoming misaligned with human goals or developing their own goals that conflict with ours” is an overwhelmingly essential and critical topic. If you want a refresher on how AI can go wrong, watch the movie “Eagle Eye.” In the movie, an AI model (ARIIA) was designed to protect American lives, but that “desired outcome” wasn’t counter-balanced against other desired outcomes to prevent unintended consequences.

With these concerns about AI models going rogue, I’m going to present a multi-part series on what would be required to create a counter scenario – “AI and Justice in a Brave New World” – that not only addresses the AI concerns and fears but establishes a more impartial and transparent government, legal, and judicial system that promotes a fair playing field in which everyone can participate (have a voice), benefit, and prosper.

AI’s Role: Laws and Regulations Enforcement, not Creation

The Challenge. In this AI scenario, elected officials would still make society’s laws and regulations. Unfortunately, these laws and regulations are not enforced fairly, consistently, or unbiasedly in today’s legal and judicial systems. Some people and organizations use their money and influence to bend the laws and regulations in their favor, while others face discrimination and injustice because of their identity, background, or position in society. This creates a system of inequality and distrust that leads to social disenfranchisement and political extremism. We need to ensure that the laws and regulations are enforced with fairness, equity, consistency, and transparency for everyone.

The Solution. To address the unfair, inconsistent, and biased enforcement issue, we could build AI models that would be responsible for enforcing the laws and regulations legislated by elected officials. With AI in charge of enforcement, there will be no room for prejudice or biased decision-making when enforcing these laws and regulations. Instead, AI systems would ensure that the laws and regulations are applied equitably, fairly, and transparently to everyone. Finally, justice would truly be blind.

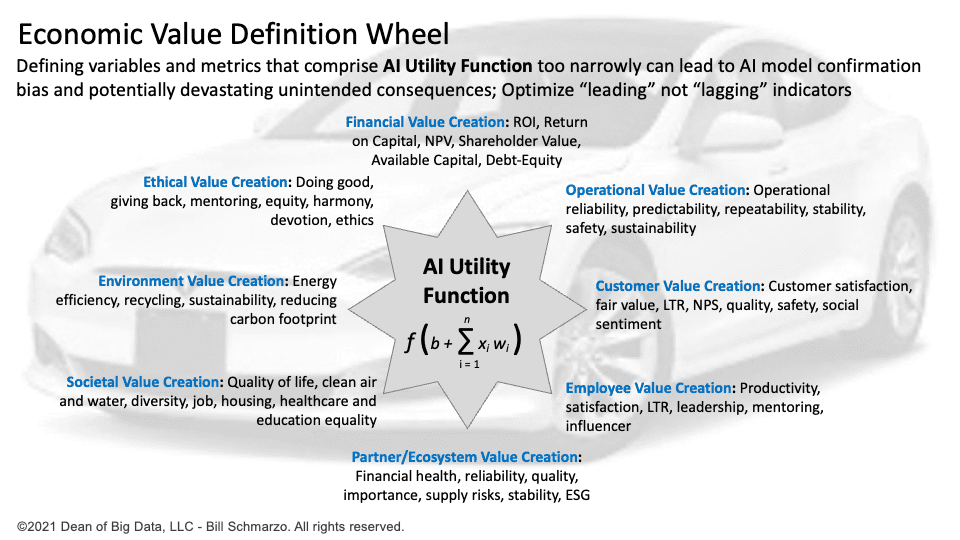

This requires that our legislators define the laws and regulations and collaborate across a diverse set of stakeholders to define the desired outcomes, the importance or value of these desired outcomes, and the variables and metrics against which adherence to those outcomes could be measured. This would necessitate substantial forethought in defining the laws and regulations and identifying the potential unintended consequences of these laws and regulations to ensure that the variables and metrics to flag, avoid, or mitigate those unintended consequences are clearly articulated. Yes, our legislators would need to think more carefully and holistically about including variables and metrics across a more robust set of measurement criteria that can help ensure that the AI models deliver more relevant, meaningful, responsible, and ethical outcomes (Figure 1).

Figure 1: Economic Value Definition Wheel

Developing a Healthy AI Utility Function

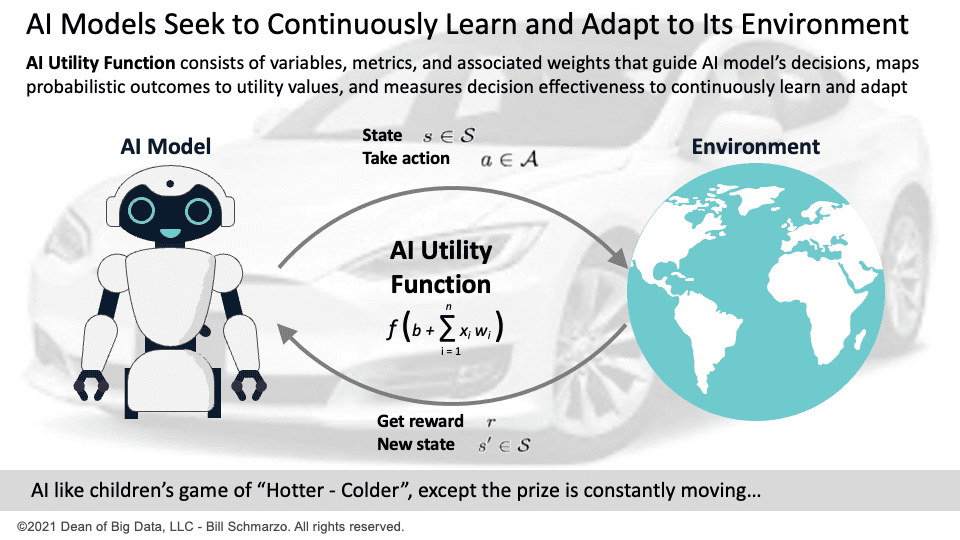

Here is the good news: those desired outcomes, their weights, and the metrics and variables against which the AI model will use to make its decisions and actions could be embedded into the AI Utility Function, the beating heart of the AI model.

The AI Utility Function is a mathematical function that evaluates different actions or decisions that the AI system can take and chooses the one that maximizes the expected utility or value of the desired outcomes.

Figure 2: The AI Utility Function

Analyzing the performance of the AI Utility Function provides a means to audit the AI model’s performance and provide the ultimate transparency into why the AI model made the decisions or actions that it did. However, several deficiencies exist today that limit the AI Utility Function’s ability to enforce society’s laws and regulations.

The table below outlines some AI Utility Function challenges and what AI engineers can do to address these challenges in designing and developing a healthy AI Utility Function.

| AI Utility Function Challenge | Potential AI Utility Function Design Options |

| An AI utility function is not always well-defined, measurable, or achievable. | – Clarify the objective and desired outcomes that the AI model is trying to optimize and ensure alignment with the problem statement, stakeholder needs, and ethical principles (“Thinking Like a Data Scientist” methodology) – Choose the relevant data, algorithms, and KPIs/metrics to quantify the objective or desired outcomes and validate their quality, relevance, and reliability. – Evaluate the feasibility, practicality, and desirability of the objective or criterion and consider the trade-offs, constraints, and risks involved in achieving it. |

| An AI utility function is not always consistent, stable, or optimal. | – Monitor the objective or criterion that the AI model is trying to optimize and update it as needed to reflect the changes in the operating environment. – Use a multi-objective optimization approach to balance conflicting objectives and outcomes using Pareto-optimal solutions representing the best compromise. – Incorporate uncertainty and robustness into the objective or criterion and use stochastic or adaptive algorithms that handle noise, variability, and unpredictability. |

| An AI utility function is not always transparent, understandable, or explainable. | – Document the objective or desired outcomes the AI model aims to optimize and explain its rationale, assumptions, limitations, and implications. – Demand interpretable, understandable, and explainable data, algorithms, and metrics that can reveal the logic, reasoning, and evidence behind the model’s actions. – Mandate a feedback loop that evaluates outcomes’ effectiveness, continuously learns and adapts the AI Utility Function based upon actual versus predicted outcomes, and enables oversight and intervention. |

Table 1: Addressing AI Utility Function Challenges

Summary: AI and Justice in a Brave New World Part 1

Maybe I’m too Pollyannish. But I refuse to turn over control of this conversation to folks who are only focused on what could go wrong. Instead, let’s consider those concerns and create something that addresses those concerns and creates something that benefits everyone.

Part 2 will examine a real-world case study about using AI to deliver consistent, unbiased, and fair outcomes…robot umpires. We will also discuss how we can create AI models that act more human. Part 3 will try to pull it all together by highlighting the potential of AI-powered governance to ensure AI’s responsible and ethical application to society’s laws and regulations.