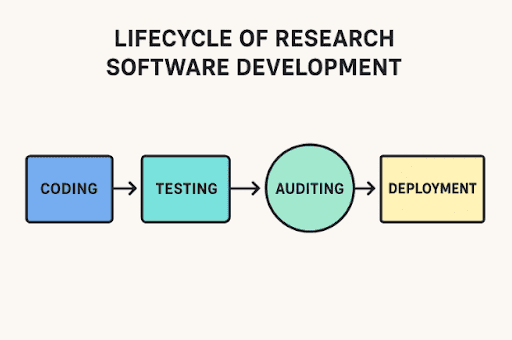

Code audits in R&D-driven applications

Writing software for research isn’t like making a shopping app. It often controls lab instruments, runs detailed simulations, or works through huge sets of experimental… Read More »Code audits in R&D-driven applications