Here we describe a simple methodology to produce predictive scores that are consistent over time and compatible across various clients, to allow for meaningful comparisons and consistency in actions resulting from these scores, such as offering a loan. Scores are used in various contexts, such as web page rankings in search engines, credit score, risk score attached to loans or credit card transactions, the risk that someone might become a terrorist, and more. Typically a score is a function of a probability attached to some particular future event. They are built using training sets.

The reasons why scores can become meaningless over time is because data evolves. New features (variables) are added that were not available before, the definition of a metric is suddenly changed (for instance, the way income is measured) resulting in new data not compatible with prior data, and faulty scores. Also, when external data is gathered across multiple sources, each source may compute it differently, resulting in incompatibilities: for instance, when comparing individual credit scores from two people that are costumers at two different banks, each bank computes base metrics (income, recency, net worth, and so on) used to build the score, in a different way. Sometimes the issue is caused by missing data, especially when users with missing data are very different from those with full data attached to them.

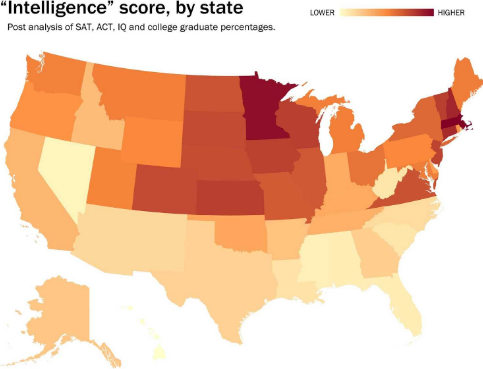

Source for picture: Washington Post

Methodology

The idea to solve this problem is pretty simple. Let’s say that you have two sets of data A and B, for instance corresponding to two different time periods: before, and after a change in the way the data is gathered or the scores are computed. Accordingly, you have two types of scores: S(A), computed on A, and T(B), computed on B. You proceed as follows.

- Compute the scores T(A) on A, using the scoring system T.

- Calibrate T on A; let Z be the calibrated score. Z might be a simple transformation (mapping) of T, so that Z(A) and S(A) have same mean (or median) and same variance. You can calibrate using more than two parameters, for instance, you might also want the kurtosis and/or skewness to be preserved.

- The new score to use moving forward, also called re-scaled score, is Z. It is compatible with the previous score S.

- Keep a log of all the changes happening to your score over time (for instance, the change from S to T, followed by transforming T into Z. This is similar to versioning in software development.

You can make it more robust if there is a transition period between A and B, when both scores S and T can be computed on overlapping data. This is the case if the score S can still be computed (in parallel with T) exactly up to 3 months after the score T was introduced.

An example of how this works in practice is given in this article, in a very similar context. In that article, I discuss a scoring algorithm that blends two sub-scoring procedures, for increased performance: one based on robust decision trees (applying to a subset of the data set, say A), and one based on robust regression. Some data (say B) can not be properly scored using the decision trees, and must be scored with the regression. You then apply the regression-based scoring to the whole data set, and then re-scale the score derived from the regression, so that it produces scores compatible with those generated with decision trees, on A. Moving forward, whether you have to use decision trees or regression, you get a consistent score everywhere. A detailed implementation with Excel spreadsheet and source code, is available here.

For more on this scoring technology, with application to scoring internet traffic (measuring its quality depending on the traffic source) read my technical article (PDF), here. Score preservation is discussed pages 22-26. Or you might want to check my patent on this topic, here.

To not miss this type of content in the future, subscribe to our newsletter. For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn, or visit my old web page here.

DSC Resources

- Book and Resources for DSC Members

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Find a Job

- Post a Blog | Forum Questions