Actually, this is about two R versions (standard and improved), a Python version, and a Perl version of a new machine learning technique recently published here. We asked for help to translate the original Perl script to Python and R, and finally decided to work with Naveenkumar Ramaraju, who is currently pursuing a master’s in Data Science at Indiana University. So the Python and R versions are from him.

We believe that this code comparison and translation will be very valuable to anyone learning Python or R with the purpose of applying it to data science and machine learning.

The code

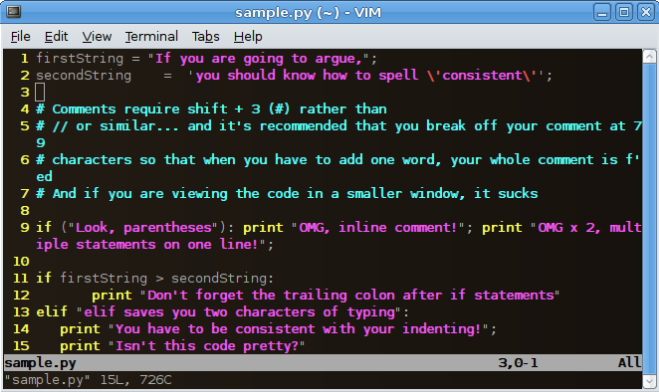

The source code is easy to read and has deliberately made longer than needed to provide enough details, avoid complicated iterations, and facilitate maintenance.The main output file is hdt-out2.txt. The input data set is HDT-data3.txt. You need to read this article (see section 4 after clicking, it has been updated) to check out what the code is trying to accomplish. In short, it is an algorithm to classify blog posts as popular or not based on extracted features (mostly, keywords in the title.)

The code has been written in Perl, R and Python. Perl and Python run faster than R. Click on the relevant link below to access the source code, available as a text file. The code, originally written in Perl, was translated to Python and R by Naveenkumar Ramaraju.

- Python version

- Perl version

- R version

- Improved R version

For those learning Python or R, this is a great opportunity.

Note regarding the R implementation

Required library: hash (R doesn’t have inbuilt hash or dictionary without imports.) You can use any one of below script files.

- Standard version is the literal translation of the Perl code with same variable names to the maximum extent possible.

- Improved version uses functions, more data frames and more R-like approach to reduce code running time (~30 % faster) and less lines of code. Variable names would vary from Perl. Output file would have comma(,) as delimiter between IDs.

Instructions to run: Place the R file and HDT-data3.txt (input file) in root folder of R environment. Execute the ‘.R’ file in R studio or using command line script: > Rscript HDT_improved.R R is known to be slow in text parsing. We can optimize further if all inputs are within double quotes or no quotes at all by using data frames.

Julia version

This was added by Andre Bieler. The comments below are from him.

For what its worth, I did a quick translation from Python to Julia (v0.5) and attached a link to the file below, feel free to share it. I stayed as close as possible to the Python version, so it is not necessarily the most “Julian” code. A few remarks about benchmarking since I see this briefly mentioned in the text:

- This code is absolutely not tuned for performance since everything is done in global scope. (In Julia it would be good practice to put everything in small functions)

- Generally for run times of only a few 0.1 s Python will be faster due to the compilation times of Julia.

Julia really starts paying off for longer execution times. Click here to get the Julia code.

Resources

Top DSC Resources

- Article: Difference between Machine Learning, Data Science, AI, Deep Learnin…

- Article: What is Data Science? 24 Fundamental Articles Answering This Question

- Article: Hitchhiker’s Guide to Data Science, Machine Learning, R, Python

- Tutorial: Data Science Cheat Sheet

- Tutorial: How to Become a Data Scientist – On Your Own

- Tutorial: State-of-the-Art Machine Learning Automation with HDT

- Categories: Data Science – Machine Learning – AI – IoT – Deep Learning

- Tools: Hadoop – DataViZ – Python – R – SQL – Excel

- Techniques: Clustering – Regression – SVM – Neural Nets – Ensembles – Decision Trees

- Links: Cheat Sheets – Books – Events – Webinars – Tutorials – Training – News – Jobs

- Links: Announcements – Salary Surveys – Data Sets – Certification – RSS Feeds – About Us

- Newsletter: Sign-up – Past Editions – Members-Only Section – Content Search – For Bloggers

- DSC on: Ning – Twitter – LinkedIn – Facebook – GooglePlus

Follow us on Twitter: @DataScienceCtrl | @AnalyticBridge