Bad data is worse than no data at all.

Bad data is worse than no data at all.- What is “good” data and where do you find it?

- Best practices for data analysis.

There’s no such thing as perfect data, but there are several factors that qualify data as good [1]:

- It’s readable and well-documented,

- It’s readily available. For example, it’s accessible through a trusted digital repository.

- The data is tidy and re-usable by others with a focus on ease of (re-)executability and reliance on deterministically obtained results [2].

Following a few best practices will ensure that any data you collect and analyze will be as good as it gets.

1. Collect Data Carefully

Good data sets will come with flaws, and these flaws should be readily apparent. For example, an honest data set will have any errors or limitations clearly noted. However, it’s really up to you, the analyst, to make an informed decision about the quality of data once you have it in hand. Use the same due diligence you would take in making a major purchase: once you’ve found your “perfect” data set, perform more web-searches with the goal of uncovering any flaws.

Some key questions to consider [3] :

- Where did the numbers come from? What do they mean?

- How was the data collected?

- Is the data current?

- How accurate is the data?

Three great sources to collect data from

US Census Bureau

U.S. Census Bureau data is available to anyone for free. To download a CSV file:

- Go to data.census.gov[4]

- Search for the topic you’re interested in.

- Select the “Download” button.

The wide range of good data held by the Census Bureau is staggering. For example, I typed “Institutional” to bring up the population in institutional facilities by sex and age, while data scientist Emily Kubiceka used U.S. Census Bureau data to compare hearing and deaf Americans [5].

Data.gov

Data.gov [6] contains data from many different US government agencies including climate, food safety, and government budgets. There’s a staggering amount of information to be gleaned. As an example, I found 40,261 datasets for “covid-19” including:

- Louisville Metro Government estimated expenditures related to COVID-19.

- State of Connecticut statistics for Connecticut correctional facilities.

- Locations offering COVID-19 testing in Chicago.

Kaggle

Kaggle [7] is a huge repository for public and private data. It’s where you’ll find data from The University of California, Irvine’s Machine Learning Repository, data on the Zika virus outbreak, and even data on people attempting to buy firearms. Unlike the government websites listed above, you’ll need to check the license information for re-use of a particular dataset. Plus, not all data sets are wholly reliable: check your sources carefully before use.

2. Analyze with Care

So, you’ve found the ideal data set, and you’ve checked it to make sure it’s not riddled with flaws. Your analysis is going to be passed along to many people, most (or all) of whom aren’t mind readers. They may not know what steps you took in analyzing your data, so make sure your steps are clear with the following best practices [3]:

- Don’t use X, Y or Z for variable names or units. Do use descriptive names like “2020 prison population” or “Number of ice creams sold.”

- Don’t guess which models fit. Do perform exploratory data analysis, check residuals, and validate your results with out-of-sample testing when possible.

- Don’t create visual puzzles. Do create well-scaled and well-labeled graphs with appropriate titles and labels. Other tips [8]: Use readable fonts, small and neat legends and avoid overlapping text.

- Don’t assume that regression is a magic tool. Do test for linearity and normality, transforming variables if necessary.

- Don’t pass on a model unless you know exactly what it means. Do be prepared to explain the logic behind the model, including any assumptions made.

- Don’t leave out uncertainty. Do report your standard errors and confidence intervals.

- Don’t delete your modeling scratch paper. Do leave a paper trail, like annotated files, for others to follow. Your predecessor (when you’ve moved along to better pastures) will thank you.

3. Don’t be the weak link in the chain

Bad data doesn’t appear from nowhere. That data set you started with was created by someone, possibly several people, in several different stages. If they too have followed these best practices, then the result will be a helpful piece of data analysis. But if you introduce error, and fail to account for it, those errors are going to be compounded as the data gets passed along.

References

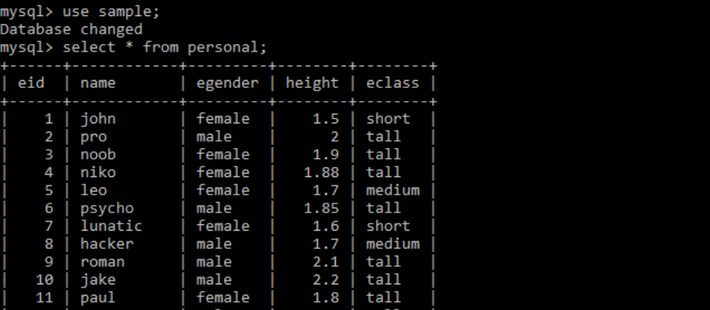

Data set image: Pro8055, CC BY-SA 4.0 via Wikimedia Commons

[2] Learning from reproducing computational results: introducing three …

[3] How to avoid trouble: principles of good data analysis

[4] United States Census Bureau

[5] Better data lead to better forecasts

[6] Data.gov

[7] Kaggle

Bad data is worse than no data at all.

Bad data is worse than no data at all.