I was asked this question: “What distributions do we need to use Deep Learning?”

This is a question with a multi-faceted answer.

The direct answer is that algorithms which are traditionally referred to as deep learning (MLP, CNN, LSTM) do not need to know the distribution in advance since they are model free (as opposed to model based).

A good explanation below

Note that the terminology used in this paper (model free vs model based) is normally used in context of Reinforcement learning.

But in this context, it is the same meaning as parametric vs non-parametric (as per the sentence in bold and italics)

Model-based and Model-free Machine Learning Techniques for Diagnost…

Machine Learning methods for prediction, classification, forecasting and data-mining

Both model-based and model-free techniques may be employed for prediction of specific clinical outcomes or diagnostic phenotypes. The application of model-based approaches heavily depends on the a priori statistical statements, such as specification of relationship between variables (e.g. independence) and the model-specific assumptions regarding the process probability distributions (e.g., the outcome variable may be required to be binomial). Examples of model-based methods include generalized linear models. Logistic regression is one of the most commonly used model-based tools, which is applicable when the outcome variables are measured on a binary scale (e.g., success/failure) and follow Bernoulli distribution10. Hence, the classification process can be carried out based on the estimated probabilities. Investigators have to carefully examine and confirm the model assumptions and choose appropriate link functions. Since the statistical assumptions do not always hold in real life problems, especially for big incongruent data, the model-based methods may not be applicable or may generate biased results.

In contrast, model-free methods adapt to the intrinsic data characteristics without the use of any a priori models and with fewer assumptions. Given complicated information, model-free techniques are able to construct non-parametric representations, which may also be referred as (non-parametric) models, using machine learning algorithms or ensembles of multiple base learners without simplification of the problem.

In the present study, several model-free methods are utilized, e.g., Random Forest11, AdaBoost12, XGBoost13, Support Vector Machines14, Neural Network15, and SuperLearner16. These algorithms benefit from constant learning, or retraining, as they do not guarantee optimized classification/regression results. However, when trained, maintained and reinforced properly and effectively, model-free machine learning methods have great potential in solving real-world problems (prediction and data-mining). The morphometric biomarkers that were identified and reported here may be useful for clinical decision support and assist with diagnosis and monitoring of Parkinson’s disease.

There is a caveat though

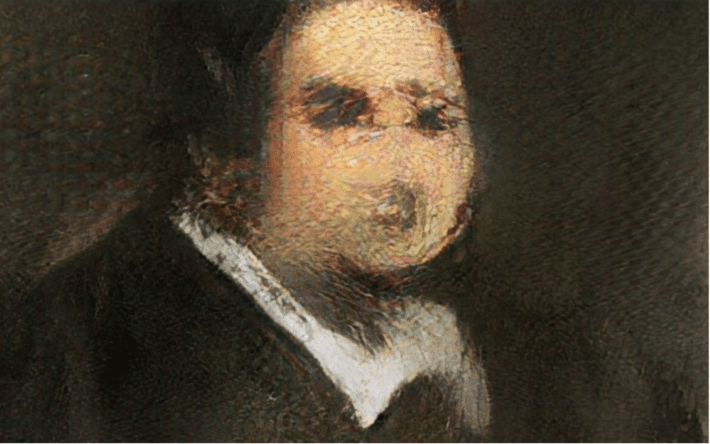

GANs (a type of deep learning model) can be used to learn a representation. This is needed when GANs are used to generate synthetic data. See this link

Generative adversarial networks GANs for synthetic dataset generati…

We show that synthetic data generative methods such as GANs are learning the true data distribution of the training dataset and are capable of generating new data points from this distribution with some variations and are not merely reproducing the old (training) data the model has been trained on.

That’s what I mean by a multi-faceted answer

Image source: Christies – the highest priced artwork created using a GAN – Portra…