I recently read a very popular article entitled 5 Reasons “Logistic Regression” should be the first thing you learn when becoming a Data Scientist. Here I provide my opinion on why this should no be the case.

It is nice to have logistic regression on your resume, as many jobs request it, especially in some fields such as biostatistics. And if you learned the details during your college classes, good for you. However, for a beginner, this is not the first thing you should learn. In my career, being an isolated statistician (working with marketing guys, sales people, or engineers) in many of my roles, I had the flexibility to choose which tools and methodology to use. Many practitioners today are in a similar environment. If you are a beginner, chances are that you would use logistic regression as a black-box tool with little understanding about how it works: a recipe for disaster.

Here are 5 reasons it should be the last thing to learn:

- There are hundred of types of logistic regression, some for categorical variables, some with curious names such as Poisson regression. It is confusing for the expert, and even more for the beginner, and for your boss.

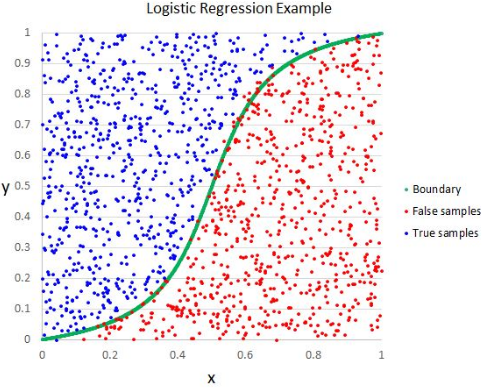

- If you transform your response (often a proportion or a binary response such as fraud or no fraud in this context) you can instead use a linear regression. While purists claim that an actual logistic regression is more precise (from a theoretical perspective), model precision is irrelevant: it is the quality of your data that matters. A model with 1% extra accuracy does not help if your data has 20% of noise, or your theoretical model is a rough approximation of the reality.

- Unless properly addressed (e.g. using ridge or Lasso regression), it leads to over-fitting and lack of robustness against noise, missing or dirty data. The algorithm to compute the coefficients is unnecessarily complicated (iterative algorithm using techniques such as gradient optimization.)

- The coefficients in a logistic regression are not easy to interpret. When you explain your model to decision makers or non-experts, few will understand.

- The best models usually blend multiple methods together to capture / explain as much variance as possible. I never used pure logistic regression in my 30-year long career as a data scientist, yet I have developed an hybrid technique that is more robust, easier to use and to code, and leading to simple interpretation. It blends “impure” logistic regression with “impure” decision trees in a way that works great, especially for scoring problems in which your data is “impure.” You can find the details here.

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn.

DSC Resources

- Subscribe to our Newsletter

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions