This is a popular question recently posted on Quora, with my answer viewed more than 8,000 times so far. I am re-posting it here. This post is much more detailed than my initial answer.

——-

My answer may appear sarcastic, after all, I am a math PhD and have published in journals such as Journal of Number Theory. But I left academia long ago, yet still doing what I think is ground-breaking research in math, indeed, in my opinion, superior to what I did during my postdoc years; but I believe many mathematicians would view my recent articles as “bad math”. In short, it is written in simple English, accessible to a large audience, free of complex proof, arcane theory, or esoteric formula. There is no way it could ever be published in any scientific journal (I haven’t tried, so I could be wrong on this, though it would require TONS of time that I don’t have, to format and edit to make it suitable for such publications.) I will go as far as to say that my research is essentially “anti-math”. You can see my most recent article here: New Decimal Systems – Great Sandbox for Data Scientists and Mathema….

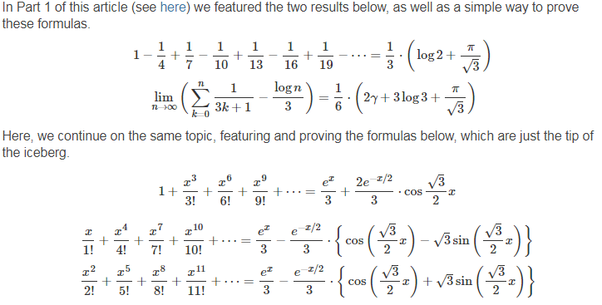

Here is another example of “bad math” from me: the formula are correct, possibly discovered by me for the first time (I doubt, but you never know) but the simple way I “prove” them would be considered bad math.

To read this article, featuring these formula, with a proof that a college student in her first year could understand, click here. I read elsewhere that math research is now so specialized, that soon nobody will be able to add anything new. Some have said that proving theorems would soon be performed by AI, rather than humans. And in my proof of the above formula, I actually used an API to automatically compute some integrals.

Yet, in general, I disagree, and I believe that the “top priests” in the mathematical community are making it very hard for anyone else to be accepted and contribute. To the contrary, if you read my articles, they are easily accessible, and even discuss fundamental concepts with plenty of low hanging fruits for research (including business applications and state-of-the-art number theory) that “high priests” mathematicians are not interested in anymore.

Hopefully, I contribute to make the field of mathematics appealing to people who were turned off during their high school or college classes, due to the abundance of jargon, the lack of interesting applications, and the top-down approach (highly technical before describing potential applications in hard-to-understand language, which is the opposite of my approach.) At the same time, I never had to lower the quality or value of my research to make it accessible (and fun to read) to a large audience of non-experts. The only change is my writing style.

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn.

DSC Resources

- Subscribe to our Newsletter

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions