This article was written by Adit Deshpande.

This is the 3rd installment of a new series called Deep Learning Research Review.

Introduction to Natural Language Processing:

Introduction

Natural language processing (NLP) is all about creating systems that process or “understand” language in order to perform certain tasks. These tasks could include

- Question Answering (What Siri, Alexa, and Cortana do)

- Sentiment Analysis (Determining whether a sentence has a positive or negative connotation)

- Image to Text Mappings (Generating a caption for an input image)

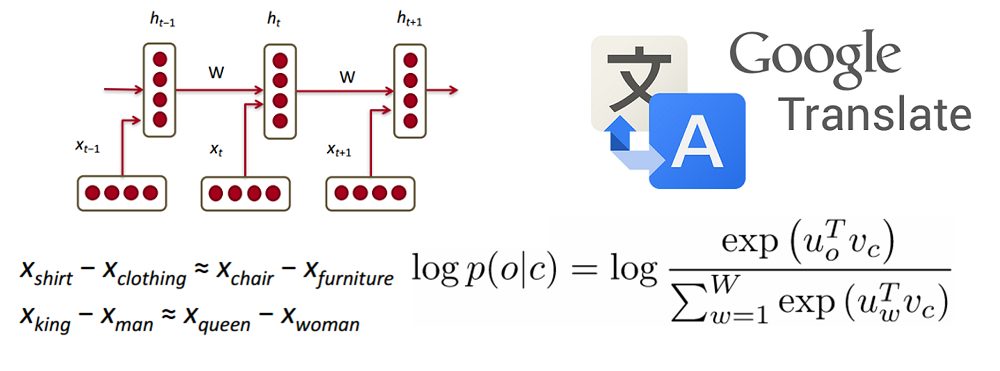

- Machine Translation (Translating a paragraph of text to another language)

- Speech Recognition

- Part of Speech Tagging

- Name Entity Recognition

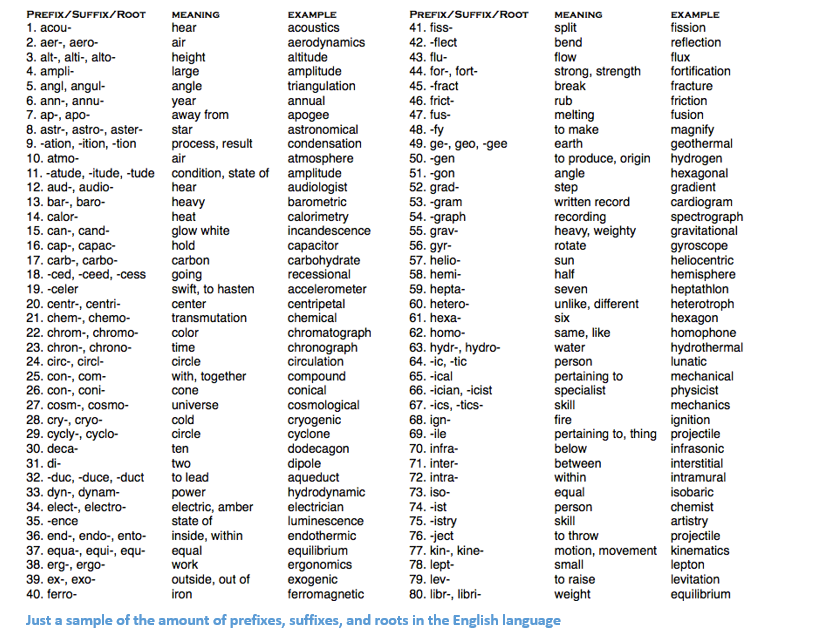

The traditional approach to NLP involved a lot of domain knowledge of linguistics itself. Understanding terms such as phonemes and morphemes were pretty standard as there are whole linguistic classes dedicated to their study. Let’s look at how traditional NLP would try to understand the following word.

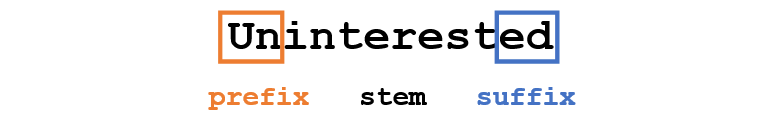

Let’s say our goal is to gather some information about this word (characterize its sentiment, find its definition, etc). Using our domain knowledge of language, we can break up this word into 3 parts.

We understand that the prefix “un” indicates an opposing or opposite idea and we know that “ed” can specify the time period (past tense) of the word. By recognizing the meaning of the stem word “interest”, we can easily deduce the definition and sentiment of the whole word. Seems pretty simple right? However, when you consider all the different prefixes and suffixes in the English language, it would take a very skilled linguist to understand all the possible combinations and meanings.

How Deep Learning Fits In:

Deep learning, at its most basic level, is all about representation learning. With CNNs, we see the composition of different filters that are used to classify objects into categories. Here, we’re going to take a similar approach with creating representations of words through large datasets.

Overview of This Post:

This post will be structured in a way where we’ll go through the basic building blocks of building deep networks for NLP and then go into talking about some applications through recent research papers. It’ll feel normal to not exactly know why we’re using RNNs or why an LSTM is helpful, but hopefully by the end of the research papers, you’ll have a better sense of why deep learning techniques have helped NLP so much.

To read the full original article, including the three papers Memory Networks, Tree LSTMs for Sentiment Analysis, and Neural Machine Translation, click here. For more deep learning related articles on DSC click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles