While organizations shift towards establishing a data culture across the enterprise, many are still struggling to get their data right. Pulling data from disparate sources and getting varying formats and representations of what is supposed to be the same information – causes serious roadblocks in your data journey. Teams experience delays and mistakes while carrying out their routine operations or extracting insights from datasets. Such problems force businesses to introduce a data standardization mechanism – that ensures data is present in a consistent and uniform view across the organization.

Let’s take a deeper look at the data standardization process: what it means, the steps it entails, and how you can achieve a standard data view in your enterprise.

What is data transformation? Simply put, data standardization is the process of transforming data values from an incorrect format to a correct one. To enable a standardized, uniform, and consistent data view across the organization, the data values must conform to the required standard – in context of the data fields they belong to.

Examples of Data Standardization

For example, the same customer’s record residing at two different locations should not contain the discrepancies in first and last names, email address, phone number, and residential address:

| Source 1 | |||||

| Name | Email Address | Phone Number | DOB | Gender | Residential Address |

| John Oneel | [email protected] | 5164659494 | 14/2/1987 | M | 11400 W Olimpic BL # 200 |

| Source 2 | ||||||

| First Name | Last Name | Email Address | Phone Number | DOB | Gender | Residential Address |

| Johnathan | O’neal | john.neal_gmail.com | +1 516-465-9494 | 2/14/1987 | Male | 11400 W Olympic 200 |

In the example above, you can see the following types of inconsistencies:

- Structural: The first source covers Customer Name as a single field, while the second one stores it as two fields – First and Last Name.

- Pattern: The first source has a valid email pattern enforced on the email address field, while the second one is visibly missing the @ symbol.

- Data type: The first source only allows digits in the Phone Number field, while the second one has a string type field that contains symbols and spaces as well.

- Format: The first source has date of birth in the format MM/DD/YYYY, while the second one has it in the format DD/MM/YYYY.

- Domain value: The first source allows Gender value to be stored as M or F, while the second source stores the complete form – Male or Female.

Such data inconsistencies lead you to make serious mistakes that can cause your business to lose a lot of time, cost, and effort. For this reason, implementing an end-to-end mechanism for standardization of data is crucial to maintain your data hygiene.

Techniques for Standardizing Data

Data standardization is a simple four-step process. But depending on the nature of inconsistencies present in your data and what you are trying to achieve, the methods and techniques used for standardization can vary. Here, we present a generic rule of thumb that any organization can use to overcome their standardization errors.

Define what the standard is

To attain any state, you must first define what the state actually is. In the first step of any data standardization process is to identify what is needed to be achieved. The best way to know what you need is to understand business requirements. You need to scan your business processes to see what data is required and in which format. This will help you to set a baseline for your data requirements.

A data standard definition helps identify:

- The data assets crucial to your business process,

- The necessary data fields of those assets,

- The data type, format, and pattern their values must conform to,

- The range of acceptable values for these fields, and so on.

Test datasets against the defined standard

Once you have a standard definition, the next step is to test how well your datasets are performing against them. One way to assess this is to use data profiling tools that generate comprehensive reports and find information like the percentage of values conforming to the data field’s requirements, such as:

- Do values follow the required data type and format?

- Do values lie outside of the acceptable range?

- Do values use shortened forms, such as abbreviations and nicknames?

- Are addresses standardized as needed – such as USPS standardization for US addresses?

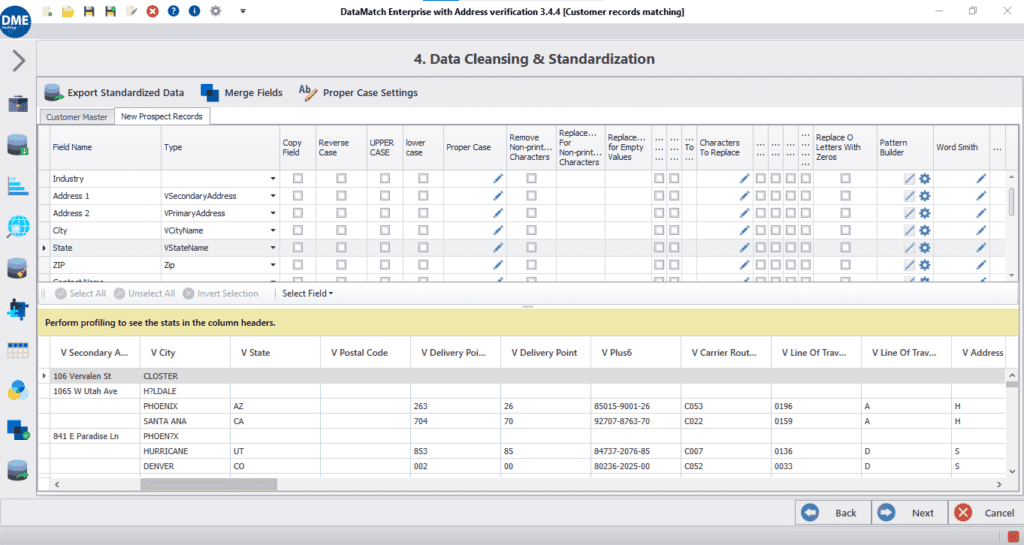

Transform non-conforming values

Now it’s finally time to transform values that do not conform to the defined standard. Let’s take a look at common data transformation techniques used.

- Data parsing

Some data fields must be first parsed to get the necessary data components. For example, parsing the name field to separate the first, middle, and last names, as well as any prefixes or suffixes present in the value.

- Data type and format conversion

You may need to remove non-conforming characters during the conversion, for example, removing symbols and alphabets from a digit-only phone number.

- Pattern matching and validation

Pattern conversion is done by configuring a regular expression for the pattern. For example, an email address can be validated by using the regex: ^[a-zA-Z0-9+_.-]+@[a-zA-Z0-9.-]+$. For the values that don’t conform to the regex, they must be parsed and transformed into the defined pattern.

- Abbreviation expansion

Company names, addresses, and person names often contain abbreviated forms that can lead your dataset to contain varying representations of the same information. For example, you may have to expand country states, such as converting NY to New York.

- Noise removal and spelling correction

Certain words do not really add any meaning to a value, and instead introduce a lot of noise in a dataset. Such values can be identified in a dataset by running it against a dictionary that contains these words, flagging them, and deciding which ones to permanently remove. The same process can be executed to find misspelling and typing errors.

- Retest dataset against the defined standard

In the final step, the transformed dataset is retested against the defined standard to find out the percentage of data standardization errors that were fixed. For the errors that still remain in your dataset, you can tune or reconfigure your methods and run the data through the process again.

Wrap up

The amount of data being generated today – and the variety of tools and technologies used to capture this data – is leading companies to face the dreadful data mess. They have everything they need, but are not quite sure why the data is not present in an acceptable and usable shape and form. Adopting data standardization tools can help rectify such inconsistencies and enable a much-needed data culture across your organization.

Originally published at Martech.zone