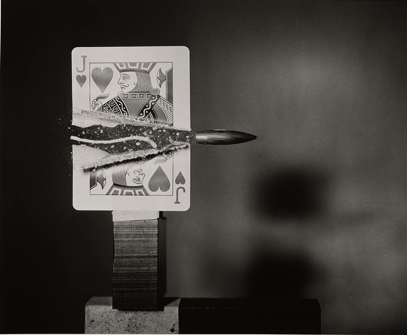

Bullet ripping through Jack of Hearts. High speed photo by MIT’s Harold (Doc) Edgerton.

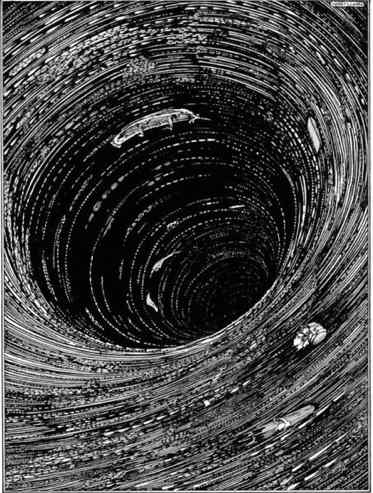

Artist Harry Clarke’s 1919 illustration for “A Descent into the Maelström”

Introduction

We are all familiar with the dictum that “correlation does not imply causation”. Furthermore, given a data file with samples of two variables x and z, we all know how to calculate the correlation between x and z. But it’s only an elite minority, the few, the proud, the Bayesian Network aficionados, that know how to calculate the causal connection between x and z. Neural Net aficionados are incapable of doing this. Their Neural nets are just too wimpy to cut it.

The field of study connecting Causality (the big C) and Bayesian Networks (bnets) is large and difficult. I cannot cover it all in this brief blog post. Instead, I’ll try to give a smattering of the field—enough, I hope, to pique your interest, especially if you are new to this discipline.

For those readers who are already experts, I will deliver my best punch line right now. Feel free to wander off from this page, right now, never to return again (you ungrateful bastard), in order to investigate the following exciting link. The link leads to the github repo of a new Python software library, first released in the beginning of 2020, called CausalNex. CauseNex is free/open source. It is produced by the company QuantumBlack (no connection to me).

Judea Pearl, Father of this field

The undisputed father and main contributor to this field which combines bnets and the big C is UCLA professor Judea Pearl. Pearl won the prestigious Turing prize for his work in this field. Yes, Daniel Pearl, the journalist who was executed by Islamic extremists, was his son. Pearl is quite active in social media (on his own blog and on Twitter). You can find numerous interviews of him on YouTube. I just listened to a recent one done just 5 months ago. At 83, he is still as sharp as a whip. A fascinating man.

Why we study Causality

Suppose you suffer from a disease like, for example, migraine headaches, and you think there are 10 variables that are relevant. Some of those variables might be causing the headaches while others might not. Also, some of those variables might be hidden from you, meaning that you know they are present, but you have no data on them. How do you go about deciding which variables are more likely to be causing the headaches?

We see from this example that causal studies are of much interest to the healthcare industry. They are also of interest to the fintech industry. What are the main factors that cause a particular stock to go up or down? Causality is also very important in Physics. For instance, in Newton’s Law F=ma, the force is a cause and the acceleration is its effect.

By the way, Judea Pearl has written a book called The book of Why. Pearl believes that current AI is mostly just glorified curve fitting, and that adding causal intelligence to current AI will be necessary in order to endow machines with conscience, empathy, free will, etc. Pearl defines a conscious, self-aware machine as one that has access to a rough blueprint of its personal bnet structures.

Judea Pearl’s Do-Calculus In a Nutshell

Henceforth, we will denote the nodes of a bnet by underlined letters and the values the nodes can assume by non-underlined letters. For example, x=x means node x is in state x.

One can often reverse some of the arrows of a bnet without changing the full probability distribution that the bnet represents. For example, a bnet with just two nodes x, z, represents the same probability distribution if the arrow points from x to z or vice versa, because P(x, z) = P(z|x)P(x) = P(x|z)P(z). This leads some people to say that bnets are not causal. I like to say instead that the basic theory of bnets, all by itself, is causally incomplete. Judea Pearl has taught us how to complete that theory. Given a bnet G, one can generate from G, a family of bnets which allows us to address causality issues for G more fully. The members of that family are obtained by erasing some of the arrows from G.

For any bnet, we define a root node as any node that has no arrows entering it, only arrows leaving it.

Suppose r and x are any two nodes of G, and we want to find out whether x is caused by r.

Let G(r) be the graph G after we delete from G all the arrows that are entering r. We say that we are performing an “intervention” or a “surgery” on G. That is why I chose the letter r for this node, as a mnemonic for “root”, although r is only guaranteed to be a root node when it’s in bnet G(r).

Let P(x|r; H) equal P(x|r) for bnets H=G or G(r). Pearl defines a “do-operator” as follows:

P(x| do(r) =r) = P(x|r; G(r)).

Why P(x|r; G(r)) instead of P(x|r; G)? Well, in G(r), r has become a root node. For root nodes, it’s clear that nobody influences them, but they can influence others.

If P(x| do(r)=r) = P(x) or very close to it, it’s clear that r does not cause x. The farther P(x| do(r)=r) is from P(x), the more likely it is that r causes x.

A node of a bnet is hidden if we are not given any data for it. Nodes for which there is data are called visible. For example, bnet G might have nodes r, x, y1, y2, h1, h2, where nodes h1 and h2 are hidden and the other nodes are visible. Judea Pearl’s do-calculus allows us to calculate P(x|do(r)=r) in terms of the data for the visible nodes, if this is at all possible (sometimes it isn’t).

Footnote: A while back, I wrote a pedagogical paper expounding on the underpinnings of Judea-Pearl…, and another paper defining causality for both classical and quantum bnets in terms of Shannon Information Theory and Entropy. The second paper might be of interest to those seeking to define Pearl causality for quantum mechanics.

A second equivalent approach to defining causality is to replace graph G by a new graph G’. To get G’, we replace each node x of G by a deterministic node xd such that P(xd | zd ) = 1[xd =xd(zd)], where zd are the parents of xd , where xd(zd) is a function of zd, and where 1( ) is the indicator function. Then we say that r and x are not causally connected if variables xd and rd are functionally independent of (insensitive to) each other (i.e., xd(rd) is a constant function).

By the way, this idea of replacing all nodes of a bnet by deterministic nodes gives you an idea of how knowledge experts go about designing bnet structures by hand. If x has parents z, then, in the absence of “noise”, you expect that you would be able to find a function x(z). If that turns out to be too difficult to do for you, there are software libraries like bnlearn that can learn the bnet structure from the data, automatically, with minimal user input.