Summary: An interesting documentary about the earliest days of AI/ML and my alternate take on how we should really be describing the development of our profession to the newly initiated.

What could be more natural in these days of the Corona Virus than binge watching great videos. I was intrigued by a recent offer from Futurology who has sponsored “The History of Artificial Intelligence” on YouTube for free viewing. The two-and-a-half-hour video is described as “the culmination of documentaries that cover the history and origins of computing-based artificial intelligence.”

What could be more natural in these days of the Corona Virus than binge watching great videos. I was intrigued by a recent offer from Futurology who has sponsored “The History of Artificial Intelligence” on YouTube for free viewing. The two-and-a-half-hour video is described as “the culmination of documentaries that cover the history and origins of computing-based artificial intelligence.”

So hunkered down in my WFH office and armed with several Red Bulls I dived in anxious to see how others would describe these seminal events. The ‘documentary’ as it’s pitched consists of nearly full length segments, several in black and white from the 50s, 60s, and 70s with interviews by some of the field’s true greats including:

- The Thinking Machine – In Their Own Words (interview with Claude Shannon of AT&T Bell Labs, known as the Father of Information Theory) 52 min.

- The Thinking Machines (more from Bell Labs including early OCR and NLP) 15 min.

- The Machine That Changed the World 55 min.

- John McCarthy Interview 25 min.

If you don’t recognize the names, McCarthy and Minsky are widely credited as founding the field of AI when they formed the first department dedicated to its study at MIT.

Bell Labs was for many years the equivalent of all of Silicon Valley rolled into one research institution. Researchers there created a constant series of innovations from early computer vision and NLP though their real franchise was better, faster, cheaper telephone service.

It was fascinating to hear the thoughts of these founders in their ‘real time’. The thrust of the video however is that these early days were really about what we now categorize as AGI, artificial general intelligence.

It’s easy to understand that in the early days of AI much attention was given to if or how computers could mimic human thought. These were the days in which much seminal research was being conducted in learning theory leading to a wide spread belief that computers could not duplicate many aspects of human thought.

Today we would characterize this as the earliest phases of the discussion between strong or broad AI and narrow AI. In the 50s, 60s, and 70s these thoughts were just coming together. Much of what was demonstrated as AI was actually by today’s definitions, ‘expert systems’. That is deterministic waterfall programs resembling decision trees that embedded a few hundred rules in a narrow domain of expertise that could in fact rival expert decision making, but only in their exceedingly narrow domains. All of it programmed, none by unguided discovery.

While I applaud the effort to shine a light on this original discourse I was left thinking that young people newly introduced to AI would likely be completely misled by this curated version of our history.

Proposal for an Alternate History

I came into data science full time in 2001 with my small consulting firm focusing on the relatively new field of predictive modeling. I had been a closet fan for many years before and led my first consulting engagement based on AI/ML in the 90s, predicting the tradeoff between price and market share for a newly introduced model from a leading Japanese car company. All of which is to say I’ve lived through pretty much all of this development except for the very earliest days described in the documentary.

Here’s an alternate suggestion for designing this introduction and how best to describe it to those just entering the field. While we could carve this into smaller pieces, I’m going to suggest just three major chapters:

- Early Predictive Modeling

- Big Data and Accelerating Change

- The Age of Adoption

Early Predictive Modeling

Over time our profession has been known by many names, from KDD (knowledge discovery from databases) to Predictive Modeling and finally as AI/ML, Artificial Intelligence / Machine Learning. And all of what modern AI/ML has become is about discovering signals or patterns in data using algorithms that are not explicitly programmed.

This is not AGI. By these early days of modern AI/ML our mainstream movement bypassed the question of trying to mimic learning in the human brain for more practical and commercially valuable pattern recognition. Why they come, why they stay, why they go, what will they buy next.

The era of early predictive modeling emerges from the adoption of relational data bases and particularly from Business Intelligence. Of course today we view BI as backwards looking describing what has happened. But the large investments in effort and money to bring about BI data bases and their early single-version-of-the-truth paved the way for future looking predictive modeling.

Techniques for prediction were dominated by relatively simple regression and decision tree statistical programs, largely from SAS and SPSS. There was an increased interest in Neural Nets which were then shallow perceptrons. Their requirement for much larger compute capability was seen as a detriment as was their black box nature and difficulty in setup and training. There was also a nascent interest in genetic programs used for regression and classification, not just optimization.

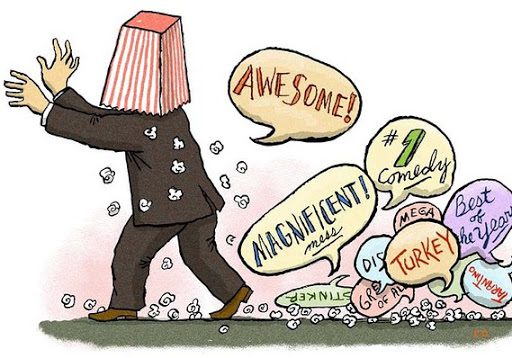

From a business perspective, these were the days in which every client meeting had to start with a lengthy ‘what is that’ introduction, not today’s ready and anxious acceptance. Adoption was definitely an uphill battle but folks in direct marketing were significant users.

Features were essentially limited to what could be extracted from BI and relational data bases which all needed to be converted to numeric or highly regularized text.

Big Data and Accelerating Change

For my money, the most exciting period in our profession was kicked off by introduction of Big Data. The seeds for this were planted by Google in 2002 but brought to full flower by Doug Cutting and his collaborators at Yahoo who unleashed open source Hadoop.

Beginners in our field still need to be reminded that Big Data isn’t just about large volumes of data. In the early 2000s a few Terabytes of data was huge. Today I’ve got that on my laptop. The real key to the value of Big Data was opening up text and image data types, not requiring a schema in advance of storage, and opening the way for streaming data that becomes edge computing and IoT.

None of this was immediate. The first Hadoop developers conference wasn’t until 2008 and momentum didn’t really get underway until the early 2010s. Hadoop was eventually supplanted by Spark with wider capability and much friendlier for developers.

Big Data is a case study in how one thing leads to another. Big Data required a lot more compute. That enforced and accelerated both cloud and MPP (multiple parallel processing). Those in turn, along with steady improvements in chip architecture and the discovery that gaming GPUs worked better in this environment than CPUs enabled DNN (deep neural nets) to come to the forefront. DNNs gave us modern speech and text NLP, along with image recognition and computer vision built on the fundamental capabilities of Hadoop and Spark.

Cloud and ever less expensive compute also gave a kick start to still developing Reinforcement Learning, the other leg of the stool behind game play and autonomous vehicles. These same capabilities also led to a rapid evolution of regression and tree algorithms to culminate in modern ensemble techniques like XGBoost and the use of DNNs for regression and classification problems.

The Age of Adoption

The days of Big Data and Accelerating Change were heady times with new and exciting advances in technique and capabilities introduced every year. That came to a halt at the end of 2017.

Everyone, myself included who attended the major conferences starting in 2018 noted that the gains in technique and performance were suddenly much more incremental. DNNs and Reinforcement Learning had made their big scores in matching human level performance in game play, speech recognition and translation, image classification and computer vision.

Initially this was a letdown. The fact is however that it marked the turning point at which it was now considered safe and desirable for business adoption. This Age of Adoption will continue for some time. The new language of AI/ML is becoming commonplace in business conversations. As with the early introduction of computers, we’re on the cusp of dramatic increases in human and business productivity thanks to AI/ML.

In my opinion this is the span and scope of AI/ML history that new entrants should absorb. Meanwhile, if you’d like to watch that original documentary, I recommend watching it at 2X speed as I did and have your Red Bull handy.

Other articles by Bill Vorhies

About the author: Bill is Contributing Editor for Data Science Central. Bill is also President & Chief Data Scientist at Data-Magnum and has practiced as a data scientist since 2001. His articles have been read more than 2.1 million times.