Summary: Will Automated Predictive Analytics be a boon to professional data scientists or a dangerous diversion allowing well-meaning, motivated but amateur users try to implement predictive analytics. More on the conversation started last week about new One-Click Data-In Model-Out platforms.

I have always been very much of the view that data science is best left to data scientists. But in the research that led up to my article last week “Data Scientists Automated and Unemployed by 2025!” I detailed what I’d found about a new trend and a group of analytic platform developers who are driven to deliver One-Click Data-In Model-Out functionality. In other words, fully Automated Predictive Analytics.

In that article we used the popular definition: Automated Predictive Analytics are services that allow a data owner to upload data and rapidly build predictive or descriptive models with a minimum of data science knowledge.

There is an element here of trying to make the data scientist more efficient by automating the tasks that are least creative. Much of that is in data cleansing, normalizing, removing skewness, transforming data for specific algorithm requirements, and even running multiple algorithms in parallel to determine champion models.

But the reality is that these companies are pitching directly to non-data scientist with tag lines like “Data Science Isn’t Rocket Science”. And the reason they are headed in this direction is that Gartner predicts that this ‘Citizen Data Scientist’ market will grow 5X more quickly than the true data scientist market.

It’s also a compelling motive that while most data scientist have settled in on the one or two advanced analytic platforms or tools they prefer to use that this new expanding market does not come with that baggage. To sell here will not require displacing SAS or SPSS or any of the other heavy hitters since those tools don’t meet the needs of these new users.

What Could Possible Go Wrong

With these concerns in mind I set out to catalogue all the things that can go wrong when excited amateurs use sophisticated tools to produce predictive analytics. I also went back and talked in greater depth to a few of the five One-Click companies I listed in the earlier article to see if they acknowledged or were addressing these concerns. The list of the five companies is not intended to be exhaustive and neither were my repeat interviews.

Homogeneity and Clustering

A fast way to sub-optimize a model is to start out assuming the data is homogenous. Data Science 101 says explore the data visually (to avoid the rooky mistakes illustrated by Anscomb’s Quartet) and lead with clustering and segmentation so you don’t mistake whole new markets for simple outliers.

Some of the One-Clicks have recognized this. DataRPM leads with segmentation and clustering. PurePredictive which just launched says this is on their development roadmap.

Data Cleaning

Limiting this to ensuring that all the data in a column is consistently numeric (no extraneous symbols such as commas) or categorical, (ensuring a limited and consistent use of alphas), we may actually be better off with an automated system that is less likely to make human errors. Like spell check on your phone however, you’ll want to inspect the assumptions especially when automatically trying to standardize alphas.

Data Transforms

So long as we are talking about things like removing skewness, or normalizing data required for specific algorithms (e.g. normalizing for neural nets) once again, I think I would be likely to accept an automated system.

Imputing Missing Data

The One-Click folks are smart enough to impute missing data only where it’s needed by the algorithm (e.g. not for trees). Beyond that there are a variety of techniques at work that vary from platform to platform that a reviewer would want to pay attention to.

Techniques range from simple median value replacement to AI-based logic. Also you would want to carefully examine how much missing data triggers a rule to simply ignore the feature. Is 50% missing data the right cut off? That’s something that a data scientist would like to evaluate by running different experiments.

In fairness, some of the One-Clicks have a kind of expert override mode that would allow a data scientist to see what assumptions had been made and to modify them. The Citizen Data Scientist is likely to be blind to this. Also there was inconsistent treatment regarding coding the imputed data with a separate binary column so it could be recognized by the algorithm as imputed. That may not always be necessary but it’s something I look for.

More about accuracy of the model in a minute but you’re probably aware that the handling of missing data is one of the key drivers of model accuracy. In a recent DataScienceCentral blog Jacob Joseph compared the three main treatments of missing data imputes, Deletion (don’t use an observation with missing data), Average (replacement with mean, median or mode), and Predictive (using a separate predictive model to estimate the missing value from the other independent data). For the data set he used in his testing the results were:

|

Method |

Deletion |

Average |

Predictive |

|

R^2 |

0.719 |

0.763 |

0.776 |

|

% Improvement over the Baseline Deletion Method |

6.1% |

7.9% |

This makes it pretty obvious that selecting the method of imputation is important to accuracy.

Feature Engineering

Some of the One Clicks actually take a good stab at feature engineering. For some of these platforms, if there are time stamp features they are transformed into time intervals. If there are GIS features including zip codes, they may be transformed into distances. To some extent some of the One Clicks made an attempt to identify and transform variables that would be better represented, for example, on a log scale or to create a rate or ratio from related features. There was more creativity here from some of the platforms than I expected to find. If you’re a data scientist, you’re probably thinking you could do a better job and maybe you could.

Feature Selection

Feature selection is fairly straightforward to automate (leaving the creative feature engineering issues aside). This is particularly true in identifying and ignoring features with zero variance or where for example, amateurs have left in first and last name fields that might be interpreted as categoricals. I think I would be satisfied to accept automated feature selection in most cases.

Feature selection is fairly straightforward to automate (leaving the creative feature engineering issues aside). This is particularly true in identifying and ignoring features with zero variance or where for example, amateurs have left in first and last name fields that might be interpreted as categoricals. I think I would be satisfied to accept automated feature selection in most cases.

Algorithm Selection

To be on the One-Click list, the platform must select from a wide variety of appropriate ML algorithms, work the tuning parameters, and offer some ensemble variations. Being cloud based they run these options in parallel until they can present a champion model. For the most part I’d be happy to let the application do this work.

Selecting the Champion Model and Appropriate Fitness Function

Regarding selection of the champion model, you’ll want to understand the automated rules in use favoring fewer variables to create more robust models. Selecting the appropriate fitness measure is a different issue.

Probably 99 times out of 100 this process can be successfully automated with a little AI. If you’re selecting a system there’s one test I would look for and that’s whether the platform can correctly select instances in which false negatives should be given priority over positive hit rates. Since the vast majority of these applications are about human behavior, typically an AUC ROC curve or R^2 will be appropriate. But if you’re doing cancer research or similar high value problems, I sure hope you are taking into account the cost of false negatives.

Accuracy

In the first article we described limited testing on one platform with about a dozen data sets including some prior to data prep. The results were actually quite good. Some were a few decimal points better, some a few decimal points worse, but none were unacceptable when compared to professionally prepared models.

In the past, I have always been a stickler for best possible models. Very small differences in fitness scores can leverage into much greater percentage change in overall campaign success. But in these days of production modeling I think we really need to consider the investment in time and the value of moving on to the next problem in lieu of spending several days or more trying to tune up what might otherwise be an acceptable model.

Another way to look at this is that it may be OK to move a quickly prepared model into production and if it has a fairly long life, to work later on making it better. That would achieve the great majority of the value sooner, leaving the incremental gains for later.

One reader of the first article suggested that these platform developers should publish a reference set of results against well-known Kaggle problems. I wouldn’t mind seeing that sort of comparative data published for all advanced analytic platforms, keeping in mind that two data scientists using the same platform can come up with different results.

The Kaggle competitions have become kind of the Formula One racing of data science. They’re fun to watch, even fun to participate in. And like auto racing, once in a while a development discovered in Kaggle makes its way into mainstream usage, like paddle shifters or anti-lock brakes on your car. It’s important to remember however that Kaggle competitions are almost always won at the fourth decimal point. Given the amount of effort that takes it may not be appropriate for daily business. The balance lies somewhere in between and the One Clicks are doing well and can only get better.

The Kaggle competitions have become kind of the Formula One racing of data science. They’re fun to watch, even fun to participate in. And like auto racing, once in a while a development discovered in Kaggle makes its way into mainstream usage, like paddle shifters or anti-lock brakes on your car. It’s important to remember however that Kaggle competitions are almost always won at the fourth decimal point. Given the amount of effort that takes it may not be appropriate for daily business. The balance lies somewhere in between and the One Clicks are doing well and can only get better.

What Was the Worst That Could Happen?

I admit that I actually set out thinking that we would find some major deficiencies in these new One Clicks but that didn’t happen. Certainly where they’re used to make data scientists more productive while allowing for some expert overrides you have to recognize the value of the time savings.

The caveats that we had mostly around handling missing data, feature engineering, and perhaps selection of fitness functions are not likely to derail value 95 times out of 100. If you’re thinking of selecting one of these, do your own head-to-head comparisons.

Just as AI is going to become an almost invisible presence in our much broader lives over the near future, this AI applied to our advanced analytic platforms is only going to get better and more pervasive.

Are We Giving Citizen Data Scientists the Green Flag to Use One Clicks?

Where non-data scientists are concerned I think some care is warranted. First, we assume the data-motivated amateur has at least a little education in the process of model building. Extra points to the One Clicks who package this with education, recipes, or step-by-step guidance.

Where non-data scientists are concerned I think some care is warranted. First, we assume the data-motivated amateur has at least a little education in the process of model building. Extra points to the One Clicks who package this with education, recipes, or step-by-step guidance.

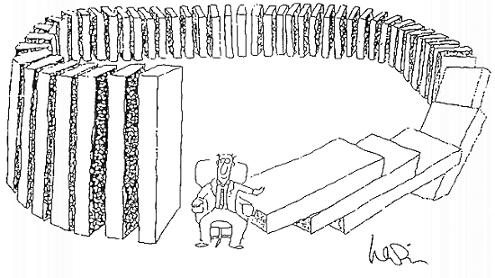

We’re not turning these folks loose to build data science production code, we’re mostly talking about environments where predictive modeling either is already being used or should be used to good business advantage on a fairly regular basis. For our citizen data scientists this is more like a two-wheeler with training wheels. Everyone needs to start somewhere.

But what happens when this adoption takes place in an SMB that hasn’t yet embraced advanced analytics as so many have not? What happens without the ‘adult supervision’ of a data scientist to guide, educate, and even mentor the Citizen Data Scientist?

The heart of the matter is this, the personality traits these data-driven managers and analysts possess are exactly what management encourages. No executive group is going to slap back these self-starters and tell them to wait until the data scientist gets there.

To some extent this may be self-limiting. DSC member Martin Squires suggested “the practical thing that has always prevented take off in this market is that David Ricardo and the theory of comparative advantage still works in this space. It’s still better to hire a data scientist and have him do great work for 10 marketing/commercial guys and then let them do their thing than have 10 marketing guys spend 20-30% of their time [on data science].” It’s true that even with a greatly simplified tool amateur users would need to commit to the learning and practice to become both efficient and accurate.

Still, many SMBs that haven’t currently embraced advanced analytics aren’t likely to start out by hiring a hard-to-find data scientist but might be convinced if one of their managers or analysts showed them the benefit.

In the absence of trained data scientist who manages this risk? The answer is management, except they are very unlikely to understand the risk much less how to mitigate it.

All the same, I give a qualified wave-through to the motivated and data-driven Citizen Data Scientist to give this a try, but to start small, not on projects with major financial impact to the company, and to continue their DS education until the training wheels can come off.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: