We’ve reached a point where human (cognitive) task performance is being leveraged or even replaced by AI. So who or what is responsible for what this AI does?

While the question seems simple enough, legal answers from the field are apparently opaque and embroiled. This is caused by the fact that AI is performing human-like tasks without having the clear legal accountability of one, and the question is whether it should have any. Fortunately, now that machine learning and artificial intelligence are protruding on an ever-increasing amount of practical domains, real-world legal interpretations and guiding principles are forming around the topic.

One legal white paper rounds up relevant arguments, and in this article I’m working with these principles to portray algorithmic accountability. This is based on Dutch law and jurisprudence, yet regulators habitually borrow ideas and principles from each other on bleeding edge topics, so concepts and rationale should translate reasonably well to other jurisdictions.

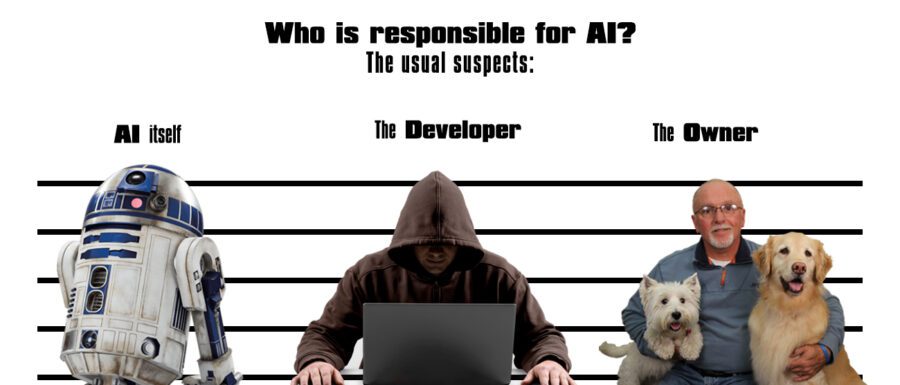

Whodunit suspects

The first suspect on AI responsibility is the AI itself! This follows from discussions in European Parliament where civil law rules on robotics were discussed, the idea being to ascribe rights and responsibilities depending on the extent of its learning and autonomy capacity.

This idea seems absurd to me, as AI has no sense of morality: It can not instinctively distinguish between right and wrong, as it has no instinct. Pushing it through court proceedings would not act as supervised learning towards morality or desired behaviour and therefore not impart justice. At best AI could be given biases and weights towards particular outcomes. Moreover, considering how intransparent and unpredictable for example neural networks are, could we ever really predict how a moral code would actually perpetually “behave” in real-world, ever-changing circumstances? Quoting a computer game assassin droid, when queried about his morality:

HK-47: “I am a droid, master, with programming. Even if I did not enjoy killing, I would have no choice. Thankfully, I enjoy it very much.”

AI can be unpredictable through their autonomy, and they are so by design. This is how we make them cope with a changing and unpredictable world. Exactly what happens under those new circumstances can be guessed, but will only ever be a guesstimate. On capital markets, future unknown events that have not been experienced before and might lead to catastrophe are referred to as so-called “black swan” events. Some highly unlikely sequence of events may lead to algorithms behaving in an undesired way. Black swan scenarios also point us at another weakness of behaviour-based auditing of algorithms. These scenarios are dependent on an ecosystem of human and AI-based algorithms interacting, which means that to understand behaviour for one algorithm, the behaviour of all other algorithms that it can (!) interact with needs to be taken into account. And that would still only tell us something about functionality at one single point in time. Legally cordoning off such behaviour means that we declare that AI operates outside our control and we can’t always be held responsible for it, sort of like a legal “act of god”.

Ascribing legal personality to a collection of code and data ingestors, no matter how well-designed the learning loops, seems to me like a way to pass the buck. For me this idea ranks no less than “I’m a criminal, but I’m not guilty because my bad genes made me do it” and having to complain to the crop farmer when you don’t like the bread you purchased at the supermarket – who in turn might refer you to mother Nature and the bad weather she produced. Let’s not Docker-sandbox legal responsibility in new containers. This does not create the proper incentives for careful and responsible AI design and management, and feels like a way to blackhole the blackbox. AI responsibility is not turtles all the way down.

Moving on, could the AI developer or vendor be to blame (Per Dutch civil code 6:185)? This is a line of argumentation that centres around diligence and due diligence – knowing what AI does and the circumstances under which it will perform according to plan. Blame and responsibility here arises when knowledge of potential damage exists, and no action is taken by that party whilst this damage could have been prevented. A comparable situation beyond the confines of AI would be when car manufacturers issue a recall on a model due to some critical defect having been discovered since production. Or when supermarkets recalls some product as the supplier found out about a production defect in particular batches – better safe than sorry right?

While this approach might be valid in some specific situations, blanket developer scapegoating after AI issues might not be appropriate. Legally, it is not always possible to pinpoint a specific cause after an event. This is not just because the code is complex and unpredictable so that it might be difficult to find a specific AI error, but also because there might be an interplay of variables at hand so that no element individually is at fault. When a ferry sunk in 1987 off the port of Zeebrugge, the owner of the ship quickly blamed some of its personnel that had to monitor a particular area of the ship where the disaster seemingly started. Following an investigation however, the cause was determined to be an unlikely series of events and interactions that demonstrated a more systemic cause, ultimately leading to the board of directors (who were charged with corporate manslaughter) being told by the UK government that they had no understanding of their duties. Quite the firm statement in Britain.

Applied to AI, Knight Capital’s crash and burn comes to mind: When a trading algorithm programmer inadvertently set in motion the near-immediate bankruptcy of what had until that instant been the market leader… by toggling one boolean variable in a decrepit piece of code. Or did he?

In the week before go-live, a Knight engineer manually deployed the new RLP code in SMARS to its eight servers. However, the engineer made a mistake and did not copy the new code to one of the servers. Knight did not have a second engineer review the deployment, and neither was there an automated system to alert anyone to the discrepancy. Knight also had no written procedures requiring a supervisory review.

What is apparent here is that there were systemic conditions, some of which were encouraged by the firm, to aim for speed in getting to market and market activity. Pointing a finger at the developer is easy, but not just. Sure, he made a mistake (and I’d hate to be that guy), but if one mistake can cause major inadvertent damage, responsibility needs to be carried around the board. In this case, all kinds of checks and balances were even purposefully removed (FPGA), to make sure that the algorithms respond as fast as possible to external changes. Moving beyond systemic causes, any AI modeler understands the importance of having training, test, and validation datasets. Not taking this approach means that an algorithm is overfitting for a known set of circumstances, whereas it also needs to demonstrate its preparedness for unknown inputs. At the same time, this unpredictability creates issues in creating hard proof of “knowing” about avertable damage. All in all, legal causality can be hard to determine through this principle.

The third suspect is the owner of the AI (as per Dutch civil code 6:173), the legal (natural) entity that holds the legal title towards, exerts control over, bears the fruits of and is responsible for damages caused by its possesions. This is the suspect that makes the most sense to me. Yet based on just ownership one would not be able to make a case for fault – in order to do so, one would be legally required to demonstrate how the AI is faulty. This is as mentioned before a difficult enterprise; it is not possible to know how adaptive code will behave in real-world, changing circumstances. Some people might argue that this uncertainty is the perfect argument for straitjacketing algorithms – after all, risk is “bad”, right? One might counter this point by pointing out that humans are far less predictable than algorithms.

An avenue for making ownership the leading suspect is by assuming that AI is a phenomenon that behaves similarly to a domestic animal. Per Dutch civil code 6:179 the owners of animals are responsible for their animals’ behaviour by proxy. This is the suspect for AI responsibility that makes the most sense to me, as owners then both benefit from their AI and carry risk. AI would be acting on behalf of the owner, and there is no need to demonstrate neither coding faults nor causality – one need only demonstrate that damage was caused by the AI.

This means that when you walk your AI chihuaha and it injures an AI labradoodle, you, the owner, are responsible for its behaviour. You can then argue to no avail that “it has never done that before”, “it would normally never do that”, or that there must be some unknown poorly coded mongrel in the family tree DNA github that created this behavioural anomaly. None of that matters, because you, the owner, have a duty to raise your AI well so that it behaves in a civil manner. By equating animal and algorithmic ownership responsibility is carried by owners, which is the type of incentive that would make organizations think twice about the control systems they put in place for their AI, so that they instruct data scientists to train carefully.

An example based on my own experience of what this might mean for you, could be the following: With my own teams on capital markets, we consciously decided to make less money. By reining in the control on our AI, we deprived ourselves from large unpredictable swings on our revenues and costs, ultimately constraining short-term gains. After Knight Capital’s collapse, we designed around predictable, controlled risk-taking and smooth revenue curves, to make sure that we would still be in business if any of our AI would go wild.

AI responsibility on the front lines

So what do the AI police and politicians do in practice? In the case of capital markets, most rules now appear to have been written with ubiquitous algorithmic activity in mind, so that the few purely human operators now sometimes need to explain why they did not follow rules designed for AI! This is a marked difference with early stages in the development of understanding of algorithmic accountability on capital markets the regulators were stumped, and as I recall were effectively fobbed off with “not sure why it does what it does, it’s self-evolving” when querying nefarious stock market trades performed by AI.

Times have changed of course: Whereas until around 2008 regulators had such a largely laissez-faire and collaborative approach to compliance, changing government attitudes and behaviors have led to rapidly increasing and cumulative strict regulatory demands on market activity. The path that regulators in the financial industry have chosen is one that focuses on transparency: Every action that is performed by a human or algorithm needs to have a clear reasoning behind it, and it is up to participants to demonstrate that conditions were appropriate for the activity. The regulators’ idea appears to be that forced transparency, in combination with heavy-handed fines, will preclude algorithmic activities that can’t stand the daylight. In other words, this is a control modus where algorithmic behaviours are checked and audited. What I think would be even better if the onus of attention would not be the algorithm’s functioning, but the firm’s.

Regardless of whether the rules make sense under all conditions, it is very important to follow these rules, as non-compliance is no longer a slap on the proverbial wrist, but instead a heavy blow that can put smaller firms out of business – not to mention the marked consequences of reputation damage when regulators send out press reports about your firm. Who really wants to “neither admit nor deny” wrong-doing, yet agree to some type of heavy penalty?

Do algorithms dream of organic sheep?

In the Netherlands a political party has suggested to create an authority that oversees algorithmic functioning. The idea is to force transparency so that there is a guise of understanding of how decisions are made by algorithms. In doing so, they claim, the reasoning for decisions will be fair and clear. I am critical of this idea, for the same reason that we can’t slice off a piece of human brain and understand what it does by shining a spotlight on the neurons, nor better control it for that matter. As I have argued above, what is important is what an algorithm does, and not how it does it nor how it behaves under laboratory conditions.

In trying to prevent blackboxing decision-making by creating transparency, the real boogeyman is left unquestioned: The owner. We should be questioning the effects that algorithms exert on our world on legal or natural entities’ behalf, and praise or punish those who control them – not the poor algorithms who only do our bidding. Also, instead of aiming for transparency, regulators should aim for control: Legal owners of an algorithm need to be in control of what their algorithmic animal does, so that when problems occur they can take responsibility over their agent and limit the damage.

In summary, I believe we should view AI as our agents, like animals that we train to work and act on our behalf. As such, we’re herding algorithms. We’re algorithm whisperers. Riding the algorithmic horse, selectively breeding for desirable labelling accuracy. They learn from us, either supervised or unsupervised, extending and replicating human behaviours, as to enact our desires. Responsibility lies with its management, and it’s time that it takes responsibility.

About the author

Dr Roger van Daalen is an energetic, entrepreneurial, and highly driven strategic executive with extensive international management experience. He is an expert on industry digital transformations through over a decade of experience with data and artificial intelligence on capital markets and is currently working on transitions to cloud data architectures and applications of machine learning and artificial intelligence more broadly, to pioneer innovative products and services in finance and other industries as to aggressively capture market share and drive revenue growth. Feel free to reach out to him for executive opportunities in the Benelux