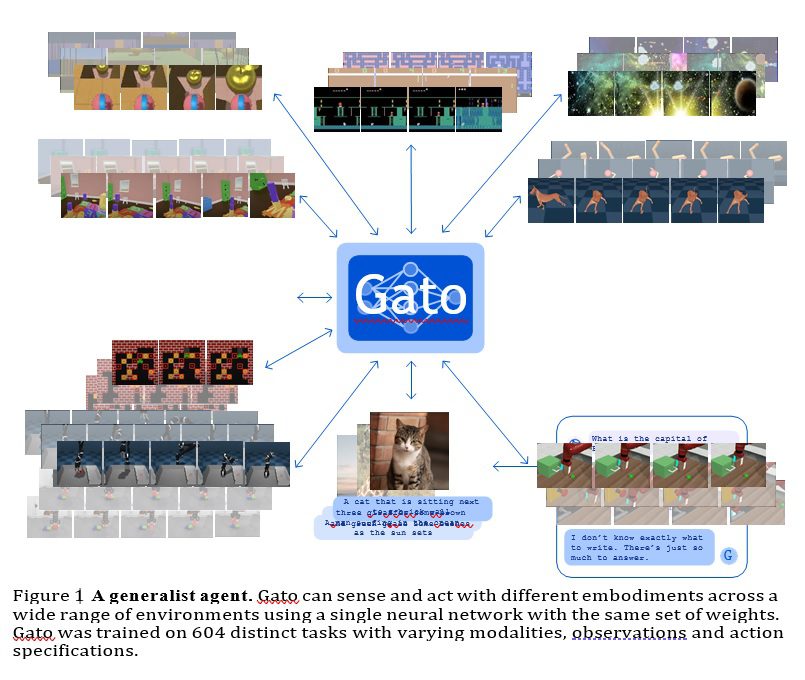

This week, there was (yet another) game changing announcement from the folks at Deepmind named Gato

Gato is a cool cat 🙂

It leads us closer on the road to AGI because Gato is a transformer model that can do multiple things like caption images, chat with people, play games etc

That means you train the model once and it can use those weights to perform multiple tasks

It gets even better

Gato is not just a transformer but also an agent – so can think of it as a transformer combined with an RL agent for multi task reinforcement learning and with the ability to perform multiple tasks – hence game changing

There are some similarities with GPT-3 (in the sense of multi modality) but the difference is in the fact that you have an agent based on the transformer ,model

and it does all this only on 1.2 billion parameters (in contrast to 175 billion from GPT-3)

More about the concept

While no agent can be expected to excel in all imaginable control tasks, especially those far outside of its training distribution, we here test the hypothesis that training an agent which is generally capable on a large number of tasks is possible; and that this general agent can be adapted with little extra data to succeed at an even larger number of tasks. We hypothesize that such an agent can be obtained through scaling data, compute and model parameters, continually broadening the training distribution while maintaining performance, towards covering any task, behavior and embodiment of interest. In this setting, natural language can act as a common grounding across otherwise incompatible embodiments, unlocking combinatorial generalization to new behaviors.

Gato’s tokenization model is a game changer

The guiding design principle of Gato is to train on the widest variety of relevant data possible, including diverse modalities such as images, text, proprioception, joint torques, button presses, and other discrete and continuous observations and actions. To enable processing this multi-modal data, we serialize all data into a flat sequence of tokens. In this representation, Gato can be trained and sampled from akin to a standard large-scale language model. During deployment, sampled tokens are assembled into dialogue responses, captions, button presses, or other actions based on the context. In the following subsections, we describe Gato’s tokenization, network architecture, loss function, and deployment.

I am sure that a lot more will be coming soon on this front

One very intriguing aspect I find is as follows: Because Gato is an agent based on a transformer, it could learn as it goes along. In this sense, its radically better than GPT-3 (for which everyone is still waiting!) and if more people adopt the Gato agent it learns more .. much like pagerank

The original paper from deepmind

Deepmind blog post

Deepmind video