Finding the Elusive ‘U’ in NLU

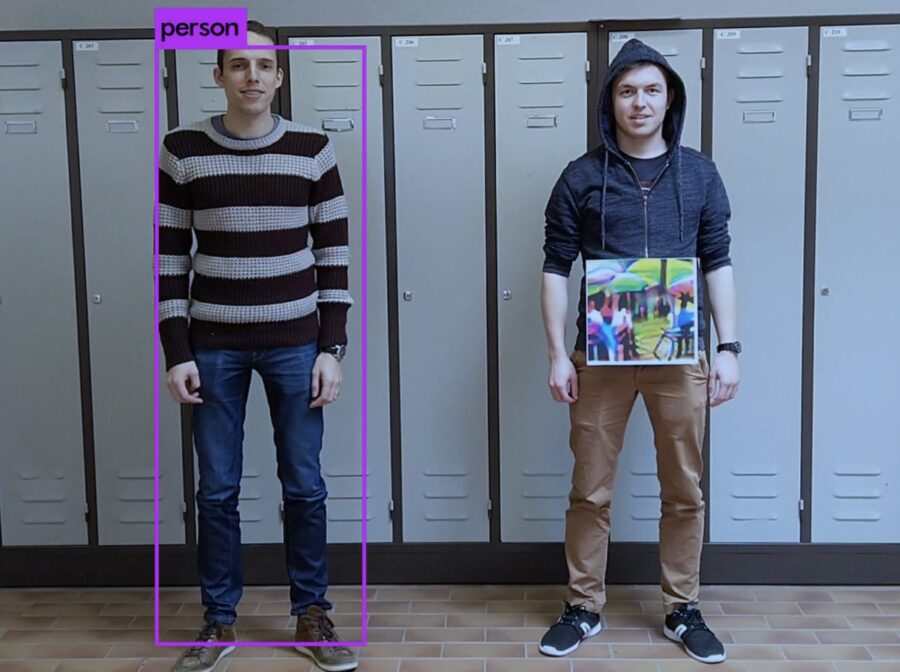

Meet Neo ! Neo is a talented developer who loves building stuff. One fine morning , Neo decides to take up a road, less travelled, decides to build a chatbot ! After a couple of keyword searches and skimming through dozens of articles with titles “build a chatbot in 5 mins” , “chatbot from scratch” etc, Neo figures out the basic components to be intent detection, Named Entity Recognition ,Text Matching for QnA . Another 30 mins of Google search and Neo has collected his arsenal, the state of the art implementations for these 3 components. His arsenal has the almighty Bert for NER, Ulmfit for Text classification, RoBERTa for text matching.

Excited to have decoded the path to greatness, Neo arranges annotated data and sets up the pipeline and calls it ‘The Matrix’ . Little did he know, the name would turn out to be the nemesis. Neo tests out some happy-flows and deploys the system gleefully awaiting well-behaved users.

To Neo’s Horror, The AI system proved to be poles apart from the expected promise of Natural language understanding. ‘the Matrix’ was as awkward as a cow on roller skates.

Neo’s users queried abut everything ‘the matrix’ was not trained for , fooling it more often than not. Happy-flows turned out to be a myth. we, humans as always beat the AI bot down to death, as if we were being challenged in intelligence supremacy . Battered and defeated , Neo decides to fix the ‘matrix’ by hook or crook . Not getting into the debate of enhancing NLP (Natural Language Processing) v/s plugging the gaps using CI (Conversational Interfaces) .

Neo decides to put together a checklist for his developer friends, the core issues in the NLU system nobody had told him before he walked through that mirage , He had thought was remarkably easy to put together.

1. Query Absoluteness Detection/ Fragment detection

Objective : To identify Whether a query is a fragment or a complete sentence . Query can be either syntactically incomplete , or semantically . As long as the intent is clear and the query does not lead to multiple answer possibilities , the query may be considered as ‘complete’

User query : “Virat Kohli”

Query absoluteness index: Fragment

User query : “Virat Kohli test average”

Query absoluteness index: sentence

In the first case , there is no ambiguity as such , but the query seems incomplete to be answered correctly. Thus user has to be either asked about “ what do you want to know about Virat Kohli “ or trigger a fallback which can be a simple definition when the term in the query is an object or brief intro when its a person . Or do the latter and the ask the question.

Now consider the query : User : tell me about cricket

This query has to be disambiguated because it doesn’t clarify if the cricket is the insect or the sport.

Fragment detection can either trigger query elucidation or probing based on the intent type detected ( factoid or non-factoid ) , to disambiguate the query or complete the query in the scenario of incompleteness.

2. Adversarial Query detection

Objective : To identify Whether a query is valid / appropriate within the defined premises of domain, project, bot type, set standards or not.

User query : “How Many goals has Sachin Tendulkar scored in his international cricket career”

Query adversity index: adversarial

User query : “How Many runs has Sachin Tendulkar scored in his international cricket career”

Query adversity index: Valid

Answering invalid queries can be embarrassing , and as we add domains the system would be vulnerable to get fooled by subtle adversarial perturbation in the query which resemble very closely to the valid queries in the domain

Adversarial attacks are not uncommon

3. Query compression (Noise cancellation)

Objective : To cancel out noise in long multi-line queries to be able to detect the intent with higher precision. Also be helpful in making the query concise for improved accuracy in open-ended question answering.

User query : “can you please help me to get some information regarding the symptoms that may surface up when a person may have been infected with flu causing virus ”

Query compression index: Very noisy

Compressed query : “symptoms , flu”

User query : “i have lost my wallet I want to block my card what do i do” Query adversity index: noisy

Compressed query: “how do i block my card”

User queries can be noisy , its important for the conversational AI system to separate salient information pieces from the noise. Noise can be linguistic in nature e.g opening phrases like “can you please” or because of linguistic phenomenon like pied-piping or prepositional stranding .

In some cases it can just be an overload of information like the 3rd example, where the actual intent may get lost in the scramble of words

4a. Explicit compound-ness in User queries

Objective : User queries can have compound statements . Single intent, multiple statements . Or multiple intents.

This segment only caters to scenarios where the compound behaviour is explicit (for example , presence of conjunction/s)

User query : “i want to buy a pen and pencil”

Query compound ness index: explicit conjunctive compound statement

Split type : split and inherit

Split queries : I want to buy a pen, I want to buy a pencil

User query : “add a burger and remove fries”

Query adversity index: explicit conjunctive compound statement

Split type : split at conjunction

Split queries : “add burger, add fries”

User query : “i have an offer from Bain & company ”

Query adversity index: explicit conjunctive compound statement

Split type : no split

Compressed query: “i have an offer from Bain & company”

User queries can have compound statements , which need to be resolved for handling them properly .

4b. Implicit compound-ness handling in User queries : Sentence boundary segmentation, punctuation restoration

Objective : User queries can have compound statements . Single intent, multiple statements . Or multiple intents.

This segment only caters to scenarios where the compound behaviour is implicit (for example , absence of conjunction/s still compound)

User query : “I want to buy a pen I want to buy a pencil ”

Query compound ness index: implicit compound statement Punctuation restored

processed query : I want to buy a pen. I want to buy a pencil

User query : “add a burger remove fries”

Query adversity index: implicit compound statement Punctuation restored processed query: “add burger. add fries”

User query : “hi how are you can you book a flight to delhi”

Query adversity index: implicit compound statement

processed query: “hi. how are you ? can you book a flight to delhi .”

User queries can have compound statements , which need to be resolved for handling them properly . Implicit compound statements can be handled by segmenting the sentences correctly by restoring the punctuations.

5. Query form Identification

Objective : To identify Whether a query is informative or contains enquiry or has instructions . Does the instructions mean read-write or the query has instruction to fetch info only .

E.G

“I like Indian cricket team “ can’t trigger a faq/open-ended QA flow . Its informative and doesn’t contain any instruction .

“I want to know about Indian cricket team” : has a declarative speech act but still requires some info retrieval . Note that previous query was also declarative , still the communicative action has to change.

“tell me about Indian cricket team” has a totally different speech act i.e imperative but still can have the same response as the previous one .

“show me this year’s stats of Indian cricket team “

Speech act : imperative

Query form : instruction

Still the response changes , because the response type has changed .

6. Context change detection in conversations

Objective : During the conversation , users tend to digress temporarily or transition completely to new intent or domain. It is important to detect such changes for smooth conversations .

Bot utterance : “please tell me the destination city to book your flight” User utterance : “can you book a movie ticket instead”

Context : Intent transition

Bot utterance : “please tell me the destination city to book your flight” User utterance : “can you first check the status of a flight ”

Context : Digression within domain

Bot utterance : “please tell me the destination city to book your flight” User utterance : “i do not want to book ticket now”

Context : cancellation

Bot utterance : “please tell me the destination city to book your flight” User utterance : “i do not remember the city name, can I give you the code”

Context : Non-cancellation negation, digression

7. Context Expansion in conversations

Objective : During the conversation , NLU system can not always make complete sense of the user query just by processing the most recent query . Many a times, the most recent query is the lead-on query to the previous turns in the conversation . Understanding and expanding the user query based on the relevant information in previous queries is an important aspect of a good conversational AI system

User utterance 1 : “how many flights do we have before 7 pm today to delhi” Bot utterance : ……………

User utterance 2: “can you book the cheapest one”

Context Expanded user query : “ can you book the cheapest flight today to desi before 7 pm “

User utterance 1 : “who was mahatma gandhi”

Bot utterance : ……………

User utterance 2: “when did he die”

Context Expanded user query : “ when did mahatma Gandhi die”

Bot utterance : “it will take us 5 days to process your application”

User utterance : “why 5”

Context Expanded user query : “ why it takes 5 days to process the application”

8. Text Matching , textual entailment understanding

1. Low overlap , still same sentence :

how do i recharge mobile

v/s

can you please tell me the process to recharge cellphone

2. High overlap , still different sentences:

can you please tell me the process to recharge mobile

v/s

can you please tell me the process to recharge battery

Robust against :

a. Pronoun reflections:

What is my name

v/s

What is your name

b. Tense shifts:

i would like to book a flight

v/s

i booked a flight

C. Unknown word issue handling : Most systems fail to treat unknown words correctly and tend to score both the sentences similar

define xyzuyzyyz

v/s

define abhkjk

Handles complex semantic variations:

define xyz

v/s

can you please tell me what is the definition of xyz

8. Implicit presumptions:

I want an American express gold card

The user’s presupposition that “American express gold card” is a valid card, may be right or wrong. If invalid, or not eligible, or not supported, the user has to be informed accordingly

9. Identify atomic and complex sentences:

Agent: You cannot update you address online.

User: why not?

User’s response should not be considered as atomic, but treated as complex sentence , And the “logical connectives” have to be identified.

10. Identical entities: Anaphoric reference

How much do I have in account? Transfer it to Amit.

“it” should be recognised as the anaphoric reference to the “account balance”

11. Entity segment:

I applied for person loan

I want a lower rate of interest

“rate of interest” should be recognised as property of “apply personal loan”

12. Action extension:

I applied for personal loan.

I wanted to check the status.

“check the status” should be recognised as part of the action “apply personal loan”

13. Entities involved in Action:

I contacted the bank.

They informed, It has not yet been approved.

“They” should be recognised as referring to the “customer care rep”

14. Quantify Elements:

I checked the imperia, iconia, regalia card. I will go with 2 regalia cards.

The “Regalia cards” should be understood to be one of the alternates talked about in previous statement, with the number quantifying the same.

15. Object identity elicitation:

I applied for loan

The object “loan” has multiple hyponyms in hierarchy, thus the specific hyponym (the loan type) has to be identified

16. Causality chaining:

There were mistakes in my application. It got rejected.

“getting rejected” and “mistakes in application” has a cause-effect relation

17. Sequence planning:

I want a car.

I will apply for loan

“apply loan” is to be recognized as the sequence arising due to “need for money” to “buy a car”

18. Illocutionary act:

I want a car.

The intended effect to be recognized, is “apply auto loan”

19. Conversational postulates:

a. Feasibility conditions:

Can you please open a savings account?

b. Reasonability conditions:

User: Can you please block my credit card?

Agent: Why do you want to block you card ?

Or

Agent: Can you please provide you pan card number?

user: Why do you need my pan card?

c. Appropriateness conditions:

Has the requested information been appropriately provided by the user, or it has be asked again

Agent: Can you please provide you pan card number?

user: I thought I already provided you all the information Or

I think it ends with ‘2E’

21. End Digression:

I think I have provided all the information you were seeking. Anyway, I do not want to apply now.

22. Start disgression:

by the way, Do you have any dining offers on credit cards

23. Subtopic/ itemization :

First, I would like to check my eligibility

24. Next subtopic itemization:

Next, I would want to check the EMI calculator.

25. Dialogue closure:

Okay, ok, k

Few Disourses :

26. Continuation discourse:

I want to know about the rate of interest for personal loan. And bike loan

27. Contrast:

Transfer 5000 to amit.

Instead, Send 6000 to him

28. Elaboration/expansion constructs

In particular, For example, In general, In addition,

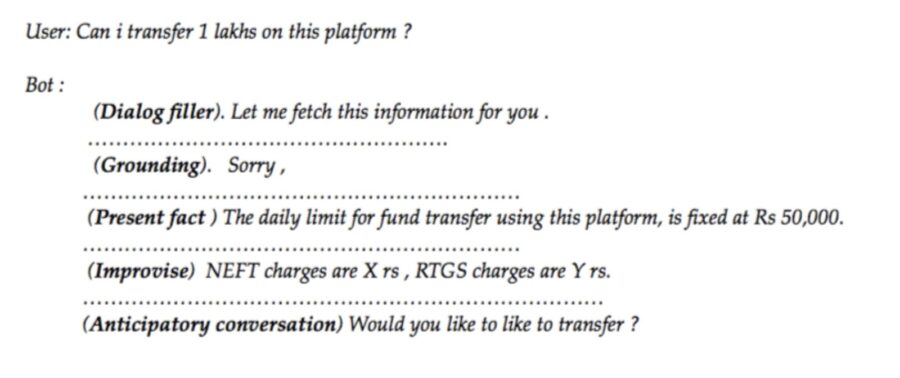

29. Multi-initiative conversations

- Ability to gain user attention

- provide relevant facts which might help the user ( it will cost you X rs to transfer funds)

- educate the user about bot functionalities ( we can help you with your banking requirements)

- perform error mitigation operation when the interaction is not going in the right direction (user asking same question in different ways, multiple times, means the previous bot responses might have

been incorrect ), - be aware when the conversation is not going the natural route ( try “hello” 5 times continuously on any bot , it will respond with greetings overtime , try the same with a human 🙂 ) etc.

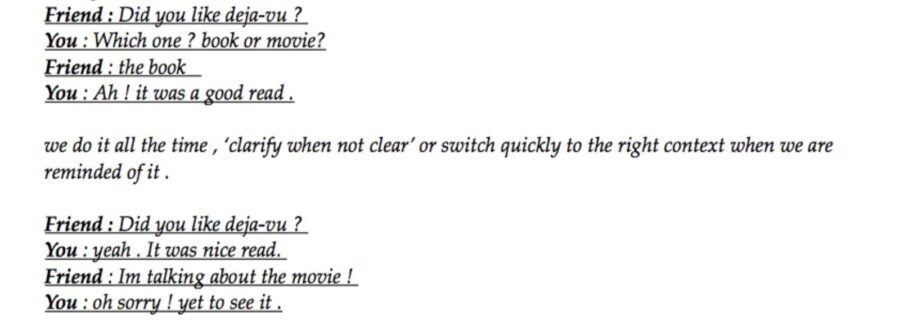

30. Conversational Grounding

31. Query Elucidation

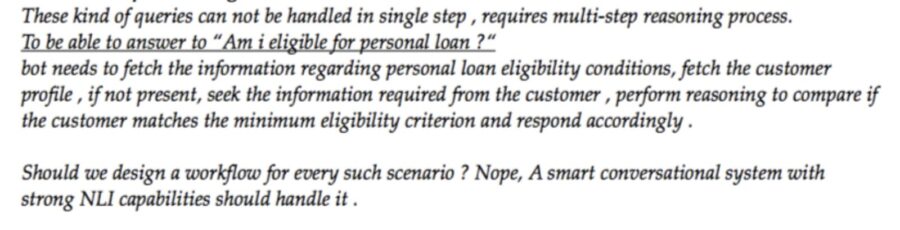

32. Handle Multi-step reasoning

33. Predictive conversations

Hopefully , Neo’s tryst with conversational AI and his findings help other developers travel the uncharted path of building the perfect NLU system which does not disappoint like ‘The matrix” !

Something just for you