By JIANG Buxing

In conventional reporting architecture, a reporting tool is connected directly to data sources, without a data computing layer in between. Most of the time, the middle layer isn’t needed, and the computing purpose can be realized within the data source and by the reporting tool respectively. But development experience has taught us that there are certain types of reports for which the computations are not suitable to be handled either by data source or the reporting tool. The number of such types of reports is small, but the development workload for them is huge.

The issue of procedure-mode computation

All reporting tools are capable of handling computed columns and performing grouping and sorting. Some even provide strategies for performing inter-row operations and for referencing cells and sets with one step of computation, making complex computations possible.

Reporting tools perform computations in a descriptive mode. This mode lists all expressions on the reporting interface, and executes them in an order automatically determined by their dependency relationship. This is intuitive. The computational target of each cell is clear when the relationship between expressions is simple. The descriptive mode becomes awkward when the dependency relationships are complex and the data preparation involves multiple steps. But to make a reporting tool perform the procedure-mode computation, hidden cells have to be used, which will both hurt the descriptive-mode computation’s intuitiveness and cause a lot of extra memory usage.

For example, you might want to list clients whose sales amount accounts for half of the total sales. Without the data preparation stage, you have to hide certain records through the functionality of hidden rows or columns, but can’t really filter them away. Another example is sorting a grouped report having detailed data by aggregate values. You need to first group data and then sort it, but many reporting tools can’t control the order of grouping and sorting.

Round-off error control is particularly typical. The total of rounded detailed values probably doesn’t equate to the rounded total value of the original detailed values, causing disagreement between the detailed data and the totals. In that case you need to find the appropriate round-off values for the detailed values according to the round-off value of the totals. Though the logic isn’t complicated, reporting tools are helpless even using the hidden cells.

The issue of heterogeneous data sources

Years ago, relational databases were the only report data source. Today, the report data source could also come from NoSQL databases, local files, and data downloaded through the web server, etc. These non-relational data sources lack standard interface and syntax for retrieval of data; some even don’t have the basic filtering ability. But the filtering operation and even the associative operation are necessary during report development. Reporting tools normally support the two types of in-memory operation, but they can only handle them well when data amount is relatively small. With a large amount of data, the memory will become overloaded. Also, most reporting tools are not good at processing multi-level data such as JSON and XML, and are not able to create dynamic code to access remote web server to get data.

Dynamic data sources are another common demand. Generally the data source the reporting tool uses is pre-configured, and can’t be dynamically changed according to the parameter directly within the reporting tool. For a standard query, reporting tools don’t support using parameters to control the query condition in the SQL statement for retrieving data. Instead, a sub-clause may need to be replaced. Some reporting tools support macro replacement, which makes up for the lack of support for conditional parameters. But the parameter-based calculation of macro value needs a procedure-mode programming containing a condition, which is difficult to be handled by the reporting tool alone.

The issue of performance optimization

In previous articles, we mentioned that most of the time the reporting performance issue can be addressed through the optimization of data preparation but many operations can’t be handled within the data source. For example, parallel data retrieval should be performed outside of the data source in order to increase its I/O performance; buffer information needs to be written to an external storage device to achieve the controllable buffer, which can’t be handled within a data source; and the asynchronous data buffering and loading data by random page number for building a list report can’t be handled by a data source. Even for the associated query over multiple data sets that a data source can deal with, it would be necessary to get it done outside it when multiple databases or a non-database source is involved, and when the database load needs to be reduced. Obviously, these scenarios that are not able to be handled within a data source also can’t be handled by a reporting tool.

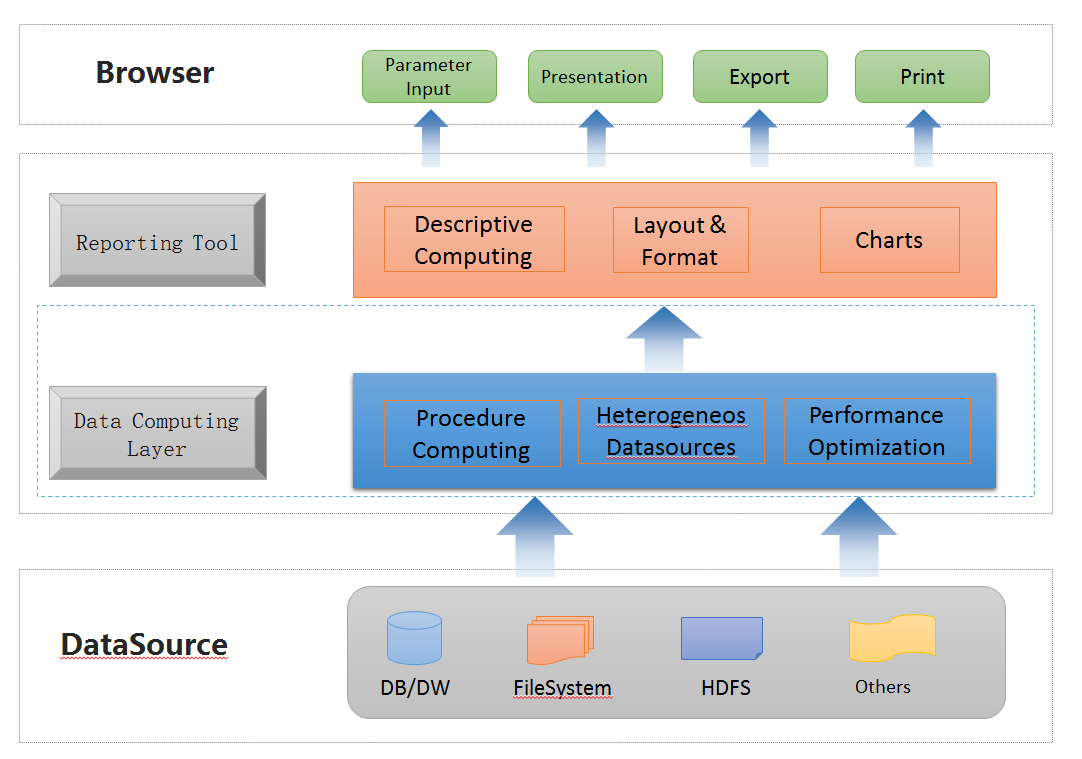

Data computing layer

All the above issues can be solved by adding a middle layer, a data computing layer, to the conventional double-layer reporting architecture.

So a data computing layer can deal with all those computations, leaving a reporting tool to handle the data presentation and a small number of intuitive computing scenarios that the process mode is good at handling.

Though invisible, the data computing layer really exists in the conventional reporting architecture. The proofs are that computations would be performed using the stored procedure of the data source and the reporting tool’s user-defined data source interface would be used. The stored procedure can perform some procedure-mode computations and performance optimizations, but its working zone is within a single database, which means computations can only be performed within the data source. Handling computing targets that need to be handled outside of the data source is beyond its ability. Theoretically, all problems can be solved by using a user-defined data source, for which almost all reporting tools provide the interface, so the method is widely used.

Well, is the reporting tool’s user-defined data source convenient enough to replace a data computing layer? That’s our next topic.