1. Introduction

Most tasks in Machine Learning can be reduced to classification tasks. For example, we have a medical dataset and we want to classify who has diabetes (positive class) and who doesn’t (negative class). We have a dataset from the financial world and want to know which customers will default on their credit (positive class) and which customers will not (negative class).

To do this, we can train a Classifier with a ‘training dataset’ and after such a Classifier is trained (we have determined its model parameters) and can accurately classify the training set, we can use it to classify new data (test set). If the training is done properly, the Classifier should predict the class probabilities of the new data with a similar accuracy.

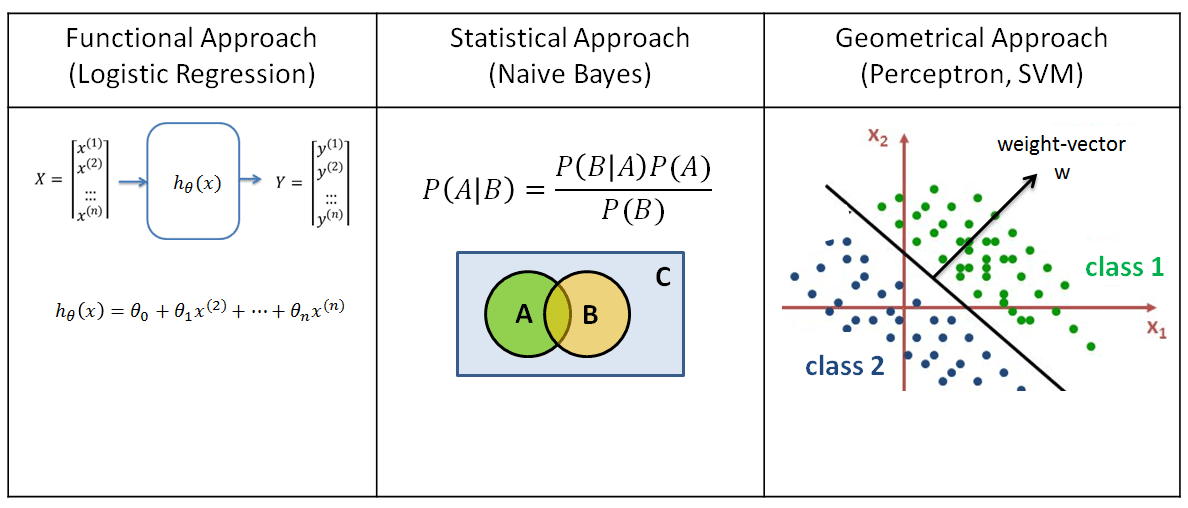

There are three popular Classifiers which use three different mathematical approaches to classify data. Previously we have looked at the first two of these; Logistic Regression and the Naive Bayes classifier. Logistic Regression uses a functional approach to classify data, and the Naive Bayes classifier uses a statistical (Bayesian) approach to classify data.

Logistic Regression assumes there is some function which forms a correct model of the dataset (i.e. it maps the input values correctly to the output values). This function is defined by its parameters

. We can use the gradient descent method to find the optimum values of these parameters.

The Naive Bayes method is much simpler than that; we do not have to optimize a function, but can calculate the Bayesian (conditional) probabilities directly from the training dataset. This can be done quiet fast (by creating a hash table containing the probability distributions of the features) but is generally less accurate.

Classification of data can also be done via a third way, by using a geometrical approach. The main idea is to find a line, or a plane, which can separate the two classes in their feature space. Classifiers which are using a geometrical approach are the Perceptron and the SVM (Support Vector Machines) methods.

Below we will discuss the Perceptron classification algorithm. Although Support Vector Machines is used more often, I think a good understanding of the Perceptron algorithm is essential to understanding Support Vector Machines and Neural Networks.

For the rest of the blog-post, click here.