In the blog “How Can Your Organization Manage AI Model Biases?”, I wrote:

“In a world more and more driven by AI models, Data Scientists cannot effectively ascertain on their own the costs associated with the unintended consequences of False Positives and False Negatives. Mitigating unintended consequences requires the collaboration across a diverse set of stakeholders in order to identify the metrics against which the AI Utility Function will seek to optimize.”

I’ve been fortunate enough to have had some interesting conversations since publishing that blog, especially with an organization who is championing data ethics and “Responsible AI” (love that term). As was so well covered in Cathy O’Neil’s book “Weapons of Math Destruction”, the biases built into many of the AI models that are being used to approve loans and mortgages, hire job applicants, and accept university admissions are yielding unintended consequences that severely impact both individuals and society.

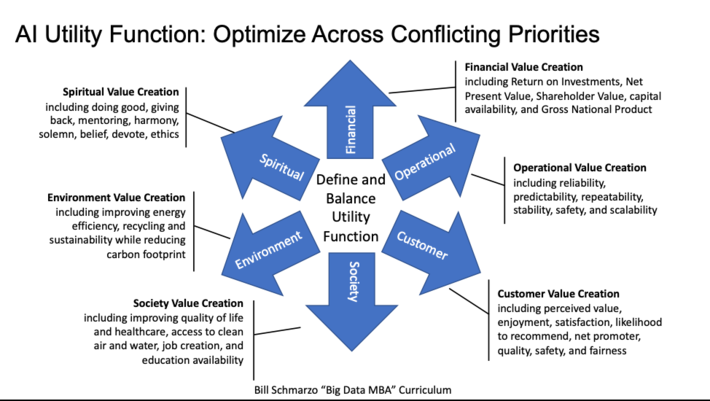

AI models only optimize against the metrics against which it has been programmed to optimize. If the AI model yields unintended consequences, that’s not the AI models fault. It’s the fault of the data science team and the operational stakeholders who are responsible for defining the AI Utility Function against which the AI model will judge model progress and success (see Figure 1).

Figure 1: Critical Importance of Crafting a Holistic AI Utility Function

But crafting of a holistic AI Utility Function is only the first step in creating Responsible AI. But before we dive into Responsible AI, let’s do a quick review on the importance of Explainable AI (XAI).

Explainable AI (XAI)

In the blog “What is Explainable AI and Why is it Needed?”, I discussed Explainable AI (XAI). XAI refers to methods and techniques used in the development of AI models such that the impact that individual metrics have in driving the AI model results can be understood and quantified by humans. Being able to quantify the predictive contribution of a particular variable to the results of an AI model is a critically important in situations such as:

- XAI is a legal mandate in regulated verticals such as banking, insurance, telecommunications and others. XAI is critical in ensuring that there are no age, gender, race, disabilities or sexual orientation biases in decisions dictated by regulations such as the European General Data Protection Regulation (GDPR), the Fair Credit Report Act (FCRA) and the new California Consumer Privacy Act (CCPA).

- XAI is critical for physicians, engineers, technicians, physicists, chemists, scientists and other specialists whose work is governed by the exactness of the model’s results, and who simply must understand and trust the models and modeling results.

- For AI to take hold in healthcare, it has to be explainable. This black-box paradox is particularly problematic in healthcare, where the method used to reach a conclusion is vitally important. Responsibility for making life and death decisions, physicians are unwilling to entrust those decisions to a black box.

- No one less than the Defense Advanced Research Projects Agency (DARPA) and their Explainable AI project are interested in understanding why an AI model recommended a particular action that could inadvertently lead to conflict or even war. (NOTE: watch the movie “Colossus: The Forbin Project” for a reminder of the ramifications of black box AI systems).

To address the Explainable AI (XAI) challenge, a framework for interpreting predictions, called SHAP (SHapley Additive exPlanations), assigns each feature an importance value for a particular prediction. SHAP estimates the importance of a particular metric by seeing how well the model performs with and without that metric for every combination of metrics. I had the fortunate opportunity to review Denis Rothman’s book titled “Hands-On Explainable AI (XAI) with Python.” It’s an invaluable source for those seeking to understand XAI and its associated challenges. XAI is going to be fundamental to any organization who is building AI models to guide their operational decisions.

Unfortunately, XAI does not address the issue of confirmation bias[1] that permeates the AI monetization strategies of most social media, news and ecommerce sites. That is, the AI models that power the monetization of social media, news and ecommerce sites default to showing you more of want you have already indicated you are interested in viewing in order for these sites to maximize views, impressions and engagement time. Confirmation bias feeds upon itself with respect to the biased data that feeds the AI models, as the AI models that drive engagement just continue to reinforce preexisting beliefs, values and biases.

Consequently, organizations must go beyond Explainable AI and take the next step to Responsible AI, which focuses on ensuring the ethical, transparent and accountable use of AI technologies in a manner consistent with user expectations, organizational values and societal laws and norms[2].

Let’s walk through a real-world example of the importance of Responsible AI, an example where AI biases are having severe negative impact on society today – hiring of job applicants.

AI in the World of Job Applicants

The article “Artificial Intelligence will help determine if you get your next job” highlights how prevalent AI has become in the job applicant hiring process. In 2018, about 67% of hiring managers and recruiters were using AI to pre-screen job applicants. By 2020, that percentage has increased to 88%[3].

Critics are rightfully concerned that AI models introduce bias, lack accountability and transparency, and aren’t gu… into the hiring process. For example, these AI hiring models may be rejecting highly-qualified candidates whose resume and job experience don’t closely match (think k-nearest neighbors algorithm) the metrics, behavioral characteristics and operational assumptions that underpin the AI models.

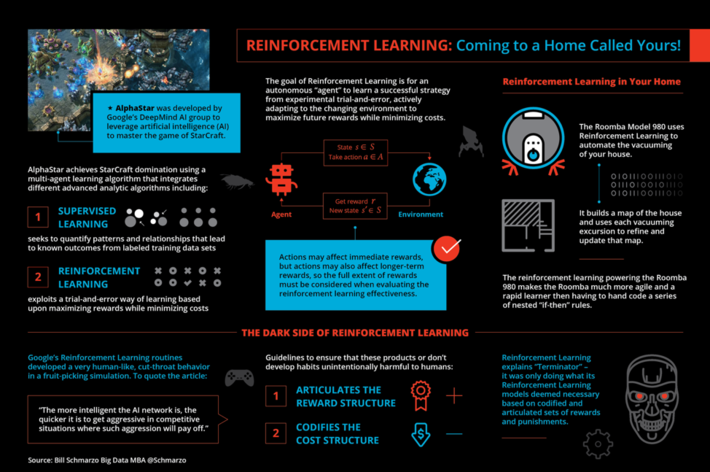

The good news is that this problem is solvable. We can construct AI models that can continuously-learn and adapt based upon the effectiveness of model’s predictions. If the data science team can properly construct and instrument a feedback loop to measure the effectiveness of the model’s predictions, then the AI model can get smarter with each prediction it makes (see Figure 2).

Figure 2: “Reinforcement Learning: Coming to a Home Called Yours!”

Yes! We can build feedback loops that help mitigate the AI model biases, but it takes a lot of work. Here’s how we start.

Solving the AI Biases Problem

Creating AI models that overcome biases takes a lot of upfront work, and some creativity. That work starts by 1) understanding the costs associated with AI model False Positives and False Negatives and 2) building a feedback loop where the AI model is continuously-learning and adapting from the False Positives and False Negatives. For example, in our job applicant example:

- A Type I Error, or False Positive, is hiring someone who the AI model believes will be successful, but ultimately, they fail. The False Positive situations are easier to track because most HR systems track the performance of every employee and one can identify (tag) when a hire is not meeting performance expectations. The data about the “bad hire” can be fed back into the AI training process so that the AI model can learn how to avoid future “bad hires”.

- A Type II Error, or False Negative, is not hiring someone who the model believes will not be successful at the company, but ultimately that person does on to be successful elsewhere. We’ll call this a “missed opportunity”. These False Negatives are very hard to track, but tracking these False Negatives/ “missed opportunities” is absolutely necessary if we are going to leverage the False Negatives to improve the AI model’s effectiveness. And this is where data gathering, instrumentation and creativity come to bear!

I am currently working with the DataEthics4All organization to address the AI bias / False Negatives challenge. The mission of DataEthics4All is to break down barriers of entry and build cognitive diversity across the technology industry. We are currently in the process of building the “Ethics4NextGen Quotient”, a benchmark that will measure an organization’s Diversity and Inclusion practices and provide insights into an organization’s end-to-end Data Governance Practices while building Responsible AI practices.

While we have only started to develop the Ethics4NextGen Quotient, it is clear that both manual (follow-up phone calls, surveys, emails) as well as automated (scanning professional applicant sites like LinkedIn and Indeed) processes will be necessary to track the ultimate success of the job applicants that companies did not hire. Again, lots of work to be done here, but solving the AI bias / False Negatives challenge is critical to ensuring AI model fairness in areas such as job applications, loans, mortgages, and college admissions.

Summary: Achieving Responsible AI

In order to create AI models that can overcome model bias, organizations must address the False Negatives measurement challenge. Organizations must be able to 1) track and measure False Negatives in order to 2) facilitate the continuously-learning and adapting AI models that mitigates AI model biases.

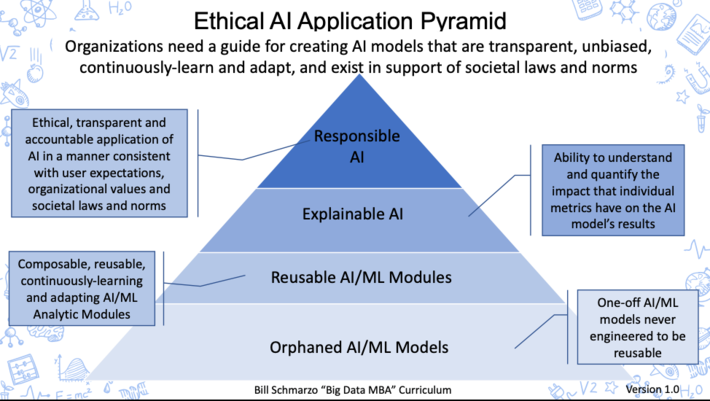

Organizations need a guide for creating AI models that are transparent, unbiased, continuously-learn and adapt, and exist in support of societal laws and norms. That’s the role of the Ethical AI Application Pyramid (see Figure 3).

Figure 3: Ethical AI Application Pyramid

The Ethical AI Application Pyramid helps guides organizations AI strategy from Orphaned AI/ML models to reusable, continuously-learning and adapting Analytic Modules to Explainable AI (which most organizations do poorly today) to the ultimate goal of AI model maturity with Responsible AI.

Note: I reserve the right to continue to morph the Ethical AI Application Pyramid based upon customer engagements, as well as feedback from the outstanding field of AI practitioners already wrestling with these problems. Feel free to use and test with your customer. Just do me the favor of sharing whatever you learn about this journey with all of us!

The Ethical AI Application Pyramid embraces the aspirations of Responsible AI by ensuring the ethical, transparent and accountable application of AI models in a manner consistent with user expectations, organizational values and societal laws and norms. And to ensure the goals of Ethical AI, organization must address the False Negative challenge. Organization must invest the effort and the brain power to not only track, measure and learn from the False Positives or “bad hires” (or bad loans or bad admissions or bad treatments), but even more importantly, organizations must figure out how to track, measure and learn from the False Negatives or the “missed opportunities.”

Yes, this will be hard, but then again, if we want AI to do our bidding in the most responsible, accountable and ethical manner, it is an investment that we MUST make.

[1] Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one’s preexisting beliefs or values.

[2] Responsible AI: A Framework for Building Trust in Your AI Solutions https://www.accenture.com/_acnmedia/pdf-92/accenture-afs-responsibl…

[3] Employers Embrace Artificial Intelligence for HR https://www.shrm.org/ResourcesAndTools/hr-topics/global-hr/Pages/Em…