Why is it so hard to change, especially from a business perspective? Why is it so hard to understand that new technology capabilities can enable new technology approaches? And nowhere is that more evident than in how companies are trying (and failing) to apply the old school “Big Bang” approach to Big Data Analytic projects.

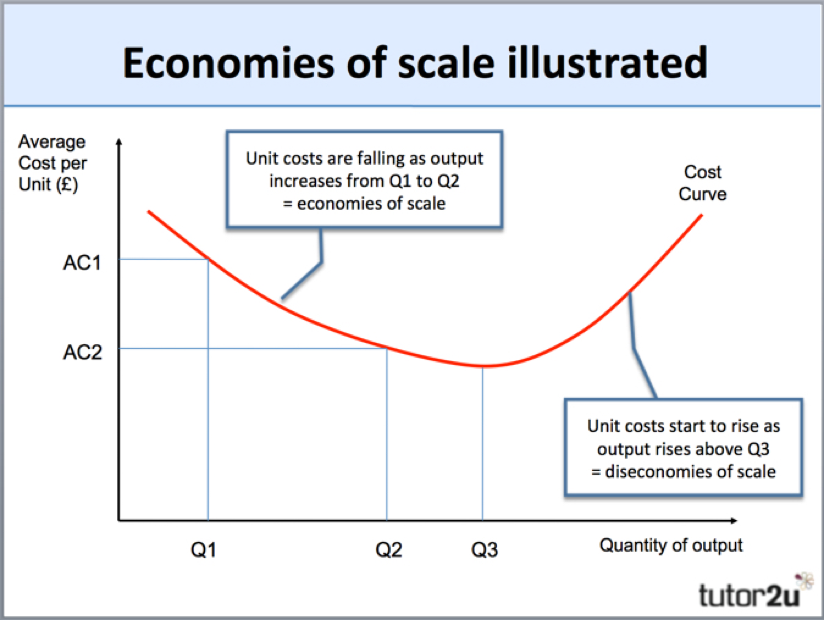

The “Big Bang” approach likely comes from how businesses have historically gained competitive advantage – through “economies of scale.” Economies of scale refers to the concept that costs per unit of a product decline as there is an increase in total output of said product (see Figure 1).

Historically, large organizations have leveraged economies of scale to lock smaller players and new competitors out of markets (yes, we’re talking economics again…).

The economies of scale concept morphed into an economic concept called the Learning Curvewhich was employed by companies such as Texas Instruments and DuPont in the 1970’s. Like economies of scale, a learning curve exploits the relationship between cost and output over a defined period of time; that is, the more someone or an organization learns from the repetitive completion of a task, the more effective they become in the execution of that task.

Thepopular book “The Lean Startup” by Eric Ries expands upon the Learning Curve concept introducing the concept of incremental learning to become more efficient more quickly. “The Learn Startup” shares a story about stuffing 100 envelopes. From the story, we learn that the optimal way to stuff newsletters into envelopes turns out to be one at a time, versus folding all the newsletters first, and then stuffing all the newsletters into the envelopes, and then sealing all the envelopes and finally stamping all of them. The reason the “one at a time” or incremental learning approach is more effective is because:

- From a process perspective, you learn what’s more efficient stuffing one envelope at a time and can then can immediately reapply any learnings to the next envelop.

- You don’t have to wait until the end to realize that you have made a costly or fatal error (like folding all of the envelopes before you realize that they won’t fit into the envelopes because of the way that they are folded)

From an economics perspective, we have to ask a very hard question:

Is “incremental learning” (and the immediate application of that incremental learning) more important than “economies of scale”?

Lessons from The World of ERP Projects

“Big Bang” Big Data Analytics projects are poisonous (in my humble opinion) to the success of your Big Data and Digital Transformation journey. Organizations do not have to spend $20M+ on a 3-year Big Data Analytics project and then hope that they get what they thought they bought at the end of the project. And that’s not even considering how much the technology world can change during those 3 years (for example, Google’s open source TensorFlow machine learning framework didn’t even exist 3 years ago).

For a quick refresher on the dangers associated with the “Big Bang” approach, we only need to go back to the heyday of “Big Bang”…the days of ERP implementations.

History is littered with a litany of Big Data ERP projects that either didn’t deliver as promised, or just plain failed.

- “You can find out how crucial an enterprise resource planning (ERP) software rollout can be for a company from a single word: billions—as in, lawsuits over failed ERP and customer relationship management (CRM) implementations are now being denominated in the billions of dollars.”

- “21% of companies who responded to a 2015 Panorama Consulting Solutions survey characterized their most recent ERP rollout as a failure.”

For example, what did a $400 million upgrade to Nike’s supply chain and ERP systems get the world-renowned shoe- and athletic gear-maker? $100 million in lost sales, a 20% stock dip and a collection of class-action lawsuits.

And now into the world of Big Data and Analytics (or Artificial Intelligence, if you bit on that marketing worm) comes IT implementation strategies of yore.

“By all accounts, the [IBM Watson] electronic brain was never used to treat patients at M.D. Anderson. A University of Texas audit reported the product doesn’t work with Anderson’s new electronic medical records system, and the cancer center is now seeking bids to find a new contractor.”

Enter the “Economies of Un-scale”

The era where large organizations held economies of scale advantages over smaller organizations are over. Nowadays, it’s not larger companies that are winning, it’s the companies that are more nimble and responsive to rapidly-changing customer expectations and market dynamics.

“Business in the century ahead will be driven by economies of un-scale, in which the traditional competitive advantages of size are turned on their head. These developments have eroded the powerful inverse relationship between fixed costs and output that defined economies of scale. Now, small, unscaled companies can pursue niche markets and successfully challenge large companies that are weighed down by decades of investment in scale — in mass production, distribution, and marketing.”

The “Economies of Un-scale” are being driven by the following economic factors:

- Powerful open source technologies (like Hadoop, TensorFlow, and Spark ML) are available to organizations off all sizes

- Scale out (versus scale up) commodity storage and compute architectures (like Hadoop) supports incremental technology implementations (don’t need to buy all the technology upfront)

- Commodity storage and compute hardware is lowering the hardware barriers to adoption (though Hyper-converged hardware environments and GPU and TPU’s processors are certainly trying to change that play)

- The wide availability of low-cost/high-quality on-line training and education

- Cloud rental models that allow any business to rental only what compute and storage they need “by the drink”

This is no need for organizations to commit to huge technology investments on the hope that you get what you were promised at the end. The “Economics of Un-scale” will reward those organizations that are more focused and nimbler; who take an incremental implementation in order to 1) accelerate time to value (by monetizing incremental learning) while 2) de-risking or mitigating technology investment risks.

The world of technology implementations is changing, and we need to ask the very hard economics question:

Is “incremental learning” more important than “economies of scale”?