This article was written by Jacob Joseph.

Unlike evaluating the accuracy of models that predict a continuous or discrete dependent variable like Linear Regression models, evaluating the accuracy of a classification model could be more complex and time-consuming.Before measuring the accuracy of classification models, an analyst would first measure its robustness with the help of metrics such as AIC-BIC, AUC-ROC, AUC- PR, Kolmogorov-Smirnov chart, etc. The next logical step is to measure its accuracy. To understand the complexity behind measuring the accuracy, we need to know few basic concepts.

Model Output

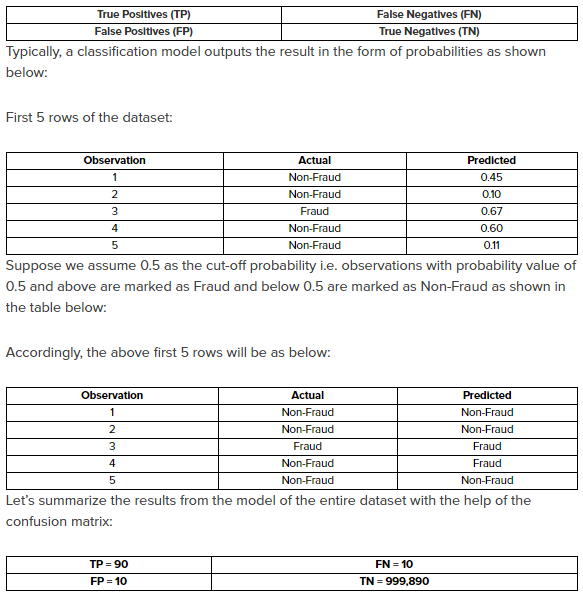

Most of the classification models output a probability number for the dataset.

E.g. – A classification model like Logistic Regression will output a probability number between 0 and 1 instead of the desired output of actual target variable like Yes/No, etc.

The next logical step is to translate this probability number into the target/dependent variable in the model and test the accuracy of the model. To understand the implication of translating the probability number, let’s understand few basic concepts relating to evaluating a classification model with the help of an example given below.

- Goal: Create a classification model that predicts fraud transactions

- Output: Transactions that are predicted to be Fraud and Non-Fraud

- Testing: Comparing the predicted result with the actual results

- Dataset: Number of Observations: 1 million; Fraud : 100; Non-Fraud: 999,900

The fraud observations constitute just 0.1% of the entire dataset, representing a typical case of Imbalanced Class. Imbalanced Classes arises from classification problems where the classes are not represented equally. Suppose you created a model that predicted 95% of the transactions as Non-Fraud, and all the predictions for Non-Frauds turn out to be accurate. But, that high accuracy for Non-Frauds shouldn’t get you excited since Frauds are just 0.1% whereas the Predicted Frauds constitute 5% of the observations.

Assuming you were able to translate the output of your model to Fraud/Non-Fraud, the predicted result could be compared to actual result and summarized as follows:

- True Positives: Observations where the actual and predicted transactions were fraud

- True Negatives: Observations where the actual and predicted transactions weren’t fraud

- False Positives: Observations where the actual transactions weren’t fraud but predicted to be fraud

- False Negatives: Observations where the actual transactions were fraud but weren’t predicted to be fraud

Confusion Matrix is a popular way to represent the summarized findings.

We have all non-zero cells in the above matrix. So is this result ideal?

Wouldn’t we love a scenario wherein the model accurately identifies the Frauds and the Non-Frauds i.e. zero entry for cells, FP and FN?

A BIG YES.

Consider a scenario wherein as a marketing analyst; you would like to identify users who were likely to buy but haven’t bought yet. This particular class of users would be the ones who share the characteristics of the users who bought. Such a class would belong to False Positives – Users who were predicted to transact but didn’t transact in reality. Hence, in addition to non-zero entries in TP and TN, you would prefer a non-zero entry in FP too. Thus, the model accuracy depends on the goal of the prediction exercise.

Key Testing Metrics and Metric Comparison

Since we are now comfortable with the interpretation of the Confusion Matrix, let’s look at some popular metrics used for testing the classification models:

- Sensitivity/Recall

- Specificity

- Precision

- F1 score

- Matthews Correlation Coefficient (MCC)

To read the full original article, including definition and computation examples for these 5 metrics, as well as a section on metric comparison, click here. For more related articles on classification models on DSC click here.

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Popular Articles