“What you see is what you buy”

It is believed that 95% of the purchase decisions happen in the subconscious (let’s call it the reptile brain). The decisions taken by the reptile brain are strongly influenced by what we see. When walking across a supermarket aisle, we see hundreds of packs of different products. But then, there are some products that capture our attention. When we see such products, the reptile brain kicks into action, it fixates its attention on that packaging that looks interesting (“ooh shiny!!!”) and before we know it — we enter the process of seriously considering to purchase that product.

Eye-tracking is a well known tool to implicitly measure how people respond to different product packaging, advertisement copies, web-page layouts, banner placements and more. Performing eye-tracking studies have the potential to generate tremendous RoI on product packaging, advertisement creatives and placement decisions. But, we (and the clients we speak to) believe that the industry is only beginning to scratch the surface when it comes to using this technique for generating insights. The reasons cited include that it is — expensive, slow, not objective (as participants get influenced by the process). However, we believe that the key reason is that the technology is not that developed yet.

With this whitepaper, we intend to demonstrate how embedding of Artificial Intelligence (AI) into the current eye tracking technology can make the process of eye tracking analysis much more powerful, faster and cost-effective. Note that we assume that the reader is familiar with the eye tracking process for physical environments.

Eye-Tracking at a Glance

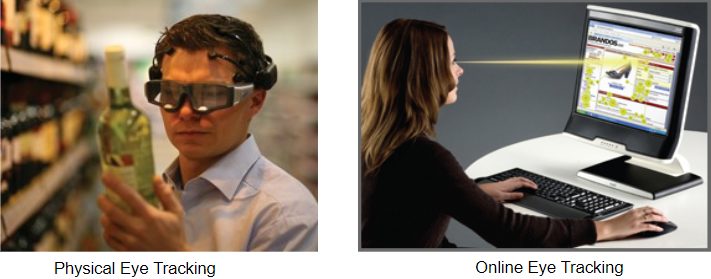

There are two primary methodologies associated with eye tracking: Physical and Online. In Physical eye-tracking, research participants wear eye tracking-hardware (glasses) and walk around a physical space (usually a retail-shelf) where the actual copies of the research subject are kept. However, in a virtual environment, participants look at the research subject (typically a virtual shelf, website layout, video ad) through a computer screen and a webcam is used to track the gaze movements.

Source — EyeGaze, Threesixtyone

While virtual eye-tracking is faster and cheaper, it has disadvantages of being less accurate (webcam is not as accurate as eye tracking hardware) and not being close to reality (a virtual shelf is very different from what it would look in real life). In this whitepaper, we are going to specifically focus our attention to Physical Eye Tracking for the purpose of testing the visual appeal of product packages on retail shelves.

Coding in Physical Eye Tracking

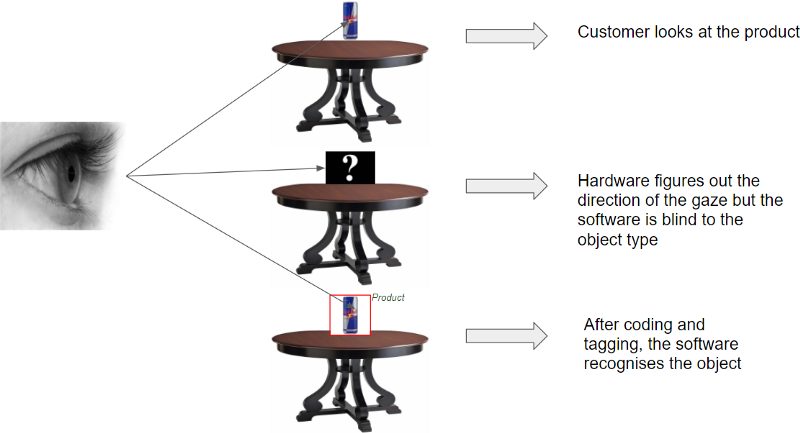

The key constraint with eye tracking technology is that it can tell you ‘where’the customer is looking, but not ‘what’ the customer is looking at. The hardware figures out the direction and location where the gaze of a person is fixated. However it has no knowledge about what exactly the person is seeing. It is blind to whether the person is looking at a price tag, Red Bull can, Gatorade can, her mobile phone etc.

Manual Coding has many challenges

The current coding solutions in the market for physical eye tracking don’t offer fully automated coding of gaze videos. They are essentially an annotation/tagging software designed to make manual coding more efficient. Manual coding creates following challenges in a typical physical eye tracking project:

- It is manual labour intensive and not scalable across a large number of videos

- It’s a slow process which ends up making the turnaround time of an entire research exercise high (which is big roadblock in today’s fast-paced business environment)

- Manual coding can also lead to human errors affecting accuracy of the final analysis

- Logistics of managing a manual coding pipeline is challenging

- Sometimes researchers influence the behaviour of the respondents in order to make coding easier — this takes the exercise away from objectivity

Smart-Gaze — AI Solution for Eye Tracking Coding

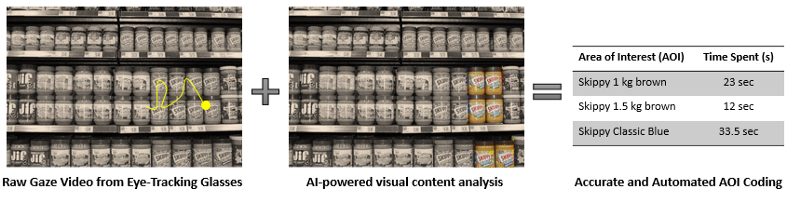

Smart-Gaze uses Deep Neural Networks based architecture to analyse raw gaze videos. Through some training, the algorithm understands what the key areas of interests (AOIs) look like and once that it is done, it does the coding automatically. And this is done with accuracy that is comparable to what a human coder would achieve.

Smart Gaze makes coding for physical eye tracking projects much more effective due to the following advantages:

- It is very fast v/s human coding leading to faster insights and more RoI.

- Much more cost effective compared to human coding.

- Highly scalable due to automation.

- Removes possibility of human errors due to fatigue or boredom.

- No need to worry about coding logistics — send the raw data and get the coded data in return.

- No need to influence research participant behaviour as coding is no longer a worry

How Does It Work?

At the moment, Smart-Gaze is not a self-serving product that a researcher can log into and use. At the moment it is a solution where the researcher captures raw gaze videos from eye tracking glasses (any brand works, no constraints here), briefs us about the key Areas of Interests and then we take over. Within a period of 3 days, our team at Karna AI trains an AI algorithm and codes the data as per the client’s need.

The accuracy can be assessed through a coding visualisation tool. Some other key benefits of this model include:

- There is no constraint of eye-tracking hardware brand (our system works for any type of glasses)

- There is no need for up-front investment in a eye-tracking software license

- This is a pay-as-you-go model where the client is charged on a per video basis

- The process and nature of output are completely customizable.