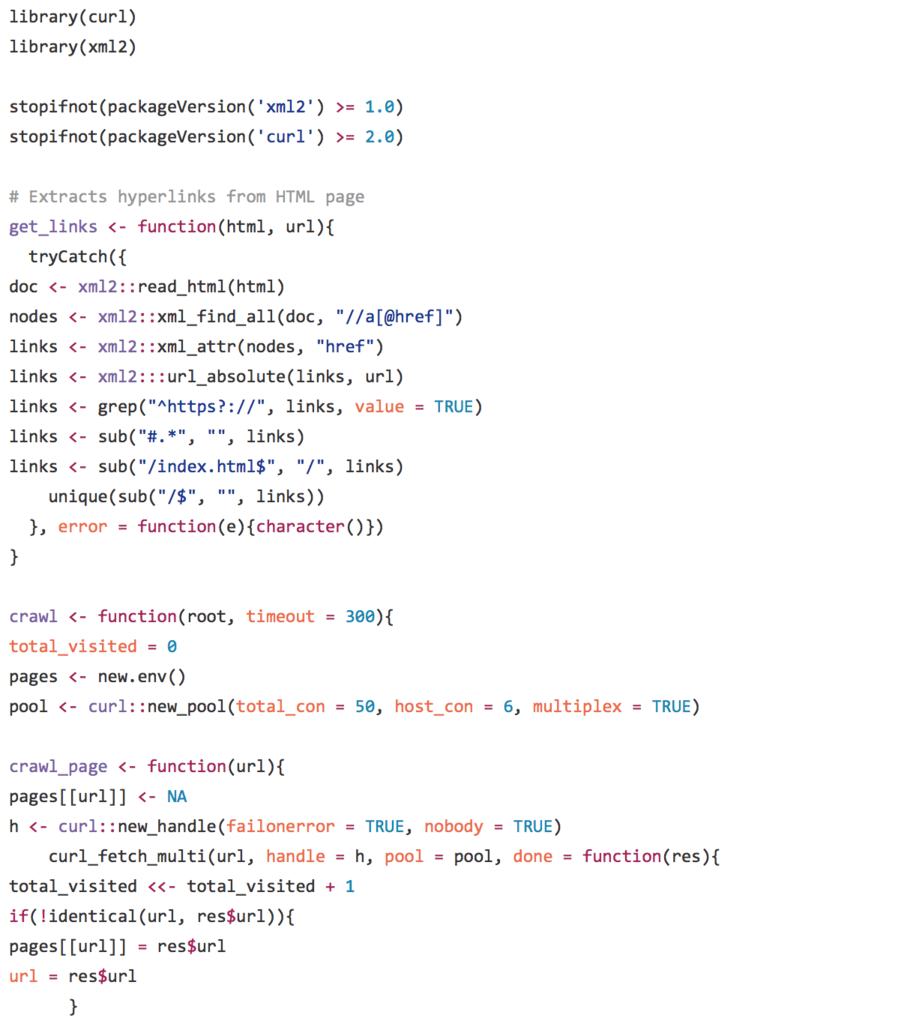

Try the new non-blocking http API in curl 2.1:

R sitemap example, Jeroen Ooms, 2016

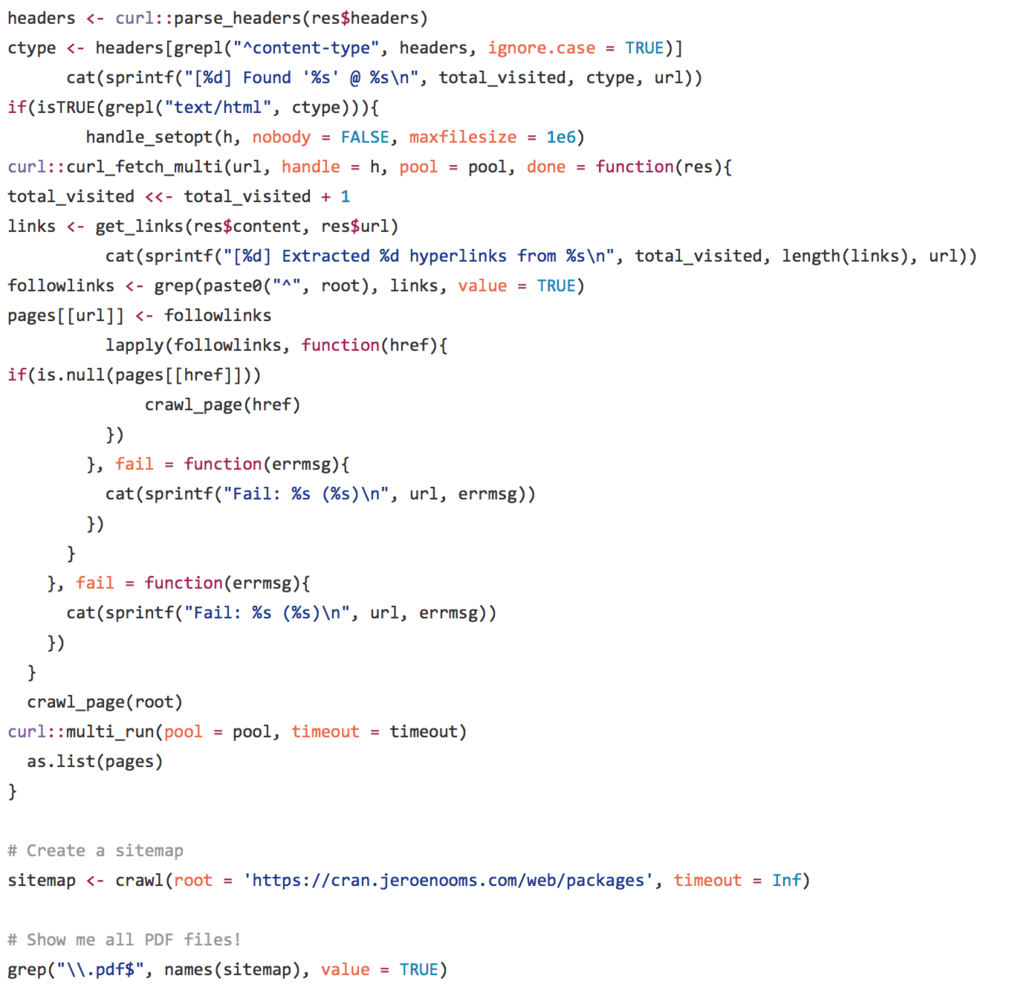

This code demonstrates the new multi-request features in curl 2.0. It creates an index of all files on a web server with a given prefix by recursively following hyperlinks that appear in HTML pages.

For each URL, we first perform a HTTP HEAD (via curlopt_nobody) to retrieve the content-type header of the URL. If the server returns ‘text/html’, then we perform a subsequent request which downloads the page to look for hyperlinks.

The network is stored in an environment like this: env[url] = (vector of links)

WARNING: Don’t target small servers, you might accidentally take them down and get banned for DOS. Hits up to 300req/sec on my home wifi.

.

To read original article, click here. For more information on web scraping, click here.

DSC Resources

- Career: Training | Books | Cheat Sheet | Apprenticeship | Certification | Salary Surveys | Jobs

- Knowledge: Research | Competitions | Webinars | Our Book | Members Only | Search DSC

- Buzz: Business News | Announcements | Events | RSS Feeds

- Misc: Top Links | Code Snippets | External Resources | Best Blogs | Subscribe | For Bloggers

Additional Reading

- What statisticians think about data scientists

- Data Science Compared to 16 Analytic Disciplines

- 10 types of data scientists

- 91 job interview questions for data scientists

- 50 Questions to Test True Data Science Knowledge

- 24 Uses of Statistical Modeling

- 21 data science systems used by Amazon to operate its business

- Top 20 Big Data Experts to Follow (Includes Scoring Algorithm)

- 5 Data Science Leaders Share their Predictions for 2016 and Beyond

- 50 Articles about Hadoop and Related Topics

- 10 Modern Statistical Concepts Discovered by Data Scientists

- Top data science keywords on DSC

- 4 easy steps to becoming a data scientist

- 22 tips for better data science

- How to detect spurious correlations, and how to find the real ones

- 17 short tutorials all data scientists should read (and practice)

- High versus low-level data science

Follow us on Twitter: @DataScienceCtrl | @AnalyticBridge