Heating, ventilation, and air conditioning of buildings accounts alone for nearly 40% of the global energy demand [1].

The need for Energy Savings has become increasily foundamental to fight Climate Change. We have been working on a cloud-based RL algorithm that can retrofit existing HVAC controls to obtain substantial results.

In the last decade, a new class of controls which relies on Artificial Intelligence have been proposed. In particular, we are going to highlight data-driven controls based on Reinforcement Learning (RL), since they showed from the very beginning promising results as HVAC controls [2].

There are two main ways to upgrade with RL the air conditioning systems: to implement RL on new systems or to retrofit the existing ones. The first approach is suitable for manufacturers of Heating and air-conditioning systems, while the latter can be applied to any existing plant that can be controlled remotely.

We designed a Cloud-Based RL algorithm that continuously learns how to optimize power consumption by remotely reading the environmental data and consequently defining the HVAC set-points. The cloud-based solution is suitable to scale to a significant number of buildings.

Our test demonstrated a reduction between 5.4% and 9.4% in primary energy consumption for two different locations, guaranteeing the same thermal comfort of state-of- the-art controls.

Traditionally HVAC systems are controlled by model-based (e.g., Model Predictive Control) and rule-based controls:

Model Predictive Control (MPC)

The basic MPC concept can be summarized as follows. Suppose that we wish to control a multiple-input, multiple-output process while satisfying inequality constraints on the input and output variables. If a reasonably accurate dynamic model of the process is available, model and current measurements can be used to predict future values of the outputs. Then the appropriate changes in the input variables can be computed based on both predictions and measurements.

In essence, MPC can fit complex thermodynamics and achieve excellent results in terms of energy savings on a single building. Following this train of thought, there is a significant issue: the retrofit application of this kind of models requires to develop a thermo-energetic model for each existing building. Similarly, it is clear that the performance of the model relies on its quality, and having a pretty accurate model is usually expensive. High initial investments are one of the main problems of model-based approaches [3]. In the same fashion, for any intervention of energy efficiency on the building, the model has to be rebuilt or tuned, again, with an expensive involvement of a domain expert.

Rule-Based Controls (RBC)

Rule-based modeling is an approach that uses a set of rules that indirectly specifies a mathematical model. This methodology is especially effective whenever the rule-set is significantly simpler than the model it implies, in such a way that the model is a repeated manifestation of a limited number of patterns.

RBCs are, thus, state-of-the-art model-free controls that represent an industry standard. A model-free solution can potentially scale up because the absence of a model makes the solution easily applicable to different buildings without the need for a domain expert. The main drawback of RBC is that they are difficult to be optimally tuned because they are not adaptable enough for the intrinsic complexity of the coupled building and plant thermodynamics.

Reinforcement Learning (RL) Controls

Before introducing the advantages of RL Controls, we are going to talk briefly about RL itself. Using the words of Sutton and Barto [4]:

Reinforcement learning is learning what to do — how to map situations to actions — so as to maximize a numerical reward signal. The learner is not told which actions to take, but instead must discover which actions yield the most reward by trying them. In the most interesting and challenging cases, actions may affect not only the immediate reward but also the next situation and, through that, all subsequent rewards. These two characteristics — trial-and-error search and delayed reward — are the two most important distinguishing features of reinforcement learning.

In our case, an RL algorithm interacts directly with the HVAC control system. It adapts continuously to the controlled environment using real-time data collected on-site, without the need to access to a thermo-energetic model of the building. In this way, an RL solution could obtain primary energy savings reducing the operating costs while remaining suitable to a large-scale application.

Therefore, it is desirable to introduce RL controls for large-scale applications on HVAC systems where the operating cost is high, like those in charge of the thermo-regulation of a significant volume.

One of the building use classes where it could be convenient to implement an RL solution is the supermarket class. Supermarkets are, by definition, widespread buildings with variable thermal loads and complex occupational patterns that introduce a non-negligible stochastic component from the HVAC control point of view.

We are going to formalize this problem by using the framework of Reinforcement Learning, let us contextualize it in a better way.

A Reinforcement Learning Solution

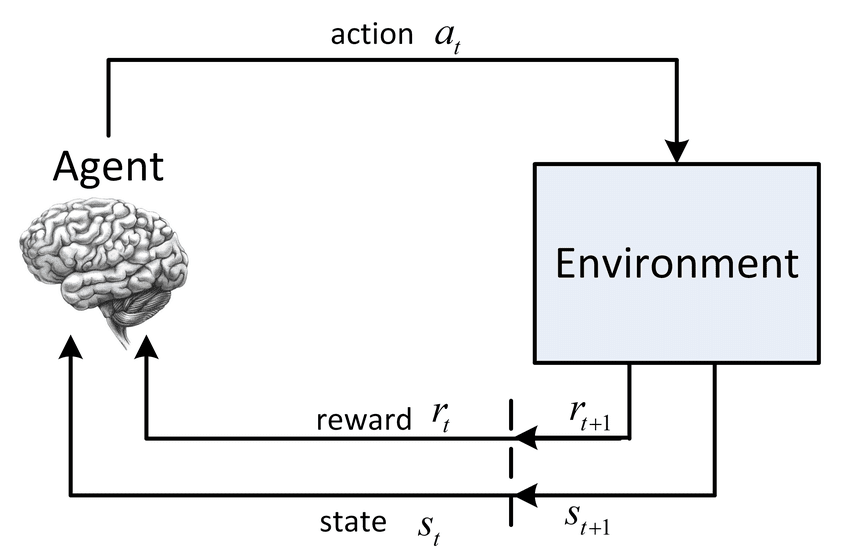

In RL, an agent interacts with an environment and learns the optimal sequence of actions, represented by a policy to reach the desired goal. As reported in [4]:

The learner and decision maker is called the agent. The thing it interacts with, comprising everything outside the agent, is called the environment. These interact continually, the agent selecting actions and the environment responding to these actions and presenting new situations to the agent.

Figure 1 : ResearchGate

In our work, the environment is a supermarket building. A reward expresses the learning goal of the agent: a scalar value returned to the agent, which tells us how the agent is behaving with respect to the learning objective.

Original post can be viewed here.