Source: ArabianBusiness

Takeaways

- Learn about the innovative method introduced by Program-Aided Language models (PAL), which revolutionizes the approach to natural language reasoning in LLMs by offloading, solving and calculating tasks to an external Python interpreter.

- Explore how PAL significantly mitigates the issue of inaccurate arithmetic calculations and complex reasoning found in conventional models by leveraging the strength of a Python interpreter. Also, see how PAL goes beyond improving the standard chain-of-thought approach and enhances other prompting strategies.

- Discover how PAL offers impressive accuracy across a variety of benchmarks, demonstrating substantial improvements over existing models and setting new state-of-the-art records.

- Understand how PAL provides a broader perspective on semantic parsing, allowing models to generate free-form Python code rather than parsing into strict domain-specific languages.

Artificial Intelligence (AI) continues to evolve at a rapid pace, with groundbreaking strides in generative capabilities playing a critical role in defining this ever-evolving landscape. One such transformative leap is the advent of Program-Aided Language models (PAL), an innovative solution that revolutionizes how Language Learning Models (LLMs) function. This article delves into the intricate workings of PAL and explores how it has enhanced LLMs, ultimately resulting in superior AI performance.

Source: Cobus Greyling via Medium

PAL’s synergy with LLMs

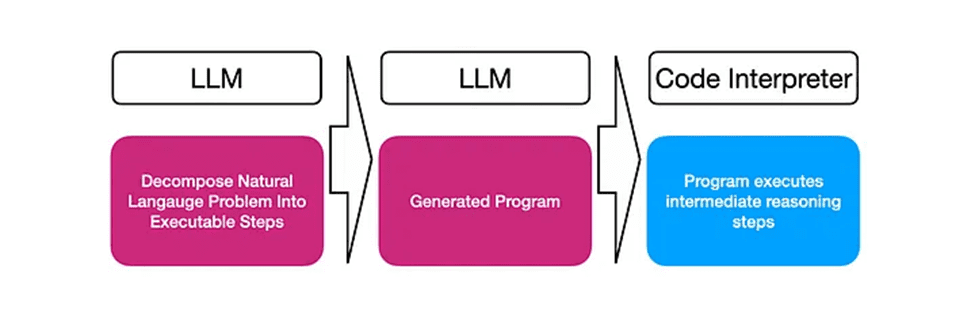

PAL introduces a new method for natural language reasoning, using programs as intermediate reasoning steps. This stands in stark contrast to existing LLM-based reasoning approaches, which typically utilize the LLM for both understanding the problem and solving it. In the PAL paradigm, the task of solving and calculating is offloaded to an external Python interpreter. The result is a final answer guaranteed to be accurate, provided the programmatic steps predicted are correct.

The PAL approach can be viewed as a seamless collaboration between an LLM and a Python interpreter. In practice, PAL has demonstrated its effectiveness across a broad range of tasks, consistently outperforming larger LLMs such as PaLM-540B, which uses the popular “chain-of-thought” method.

PAL in action: A leap forward in Generative AI

While conventional models have shown a remarkable capability in a variety of tasks, from text generation to code-generation, they often falter when it comes to accurate arithmetic calculations and complex reasoning. PAL significantly mitigates these issues by leveraging the strength of a Python interpreter. This is a significant advantage over other models that rely on ad-hoc solutions or specialized modules for these tasks.

Moreover, PAL isn’t just limited to improving the standard chain-of-thought approach but also shows promising results in enhancing other prompting strategies, such as the least-to-most prompting. This versatility is a testament to the far-reaching applications and value addition PAL brings to the AI landscape.

Source: PAL: Program-aided Language Models (ArXiv)

COT: Chain of thought Prompting

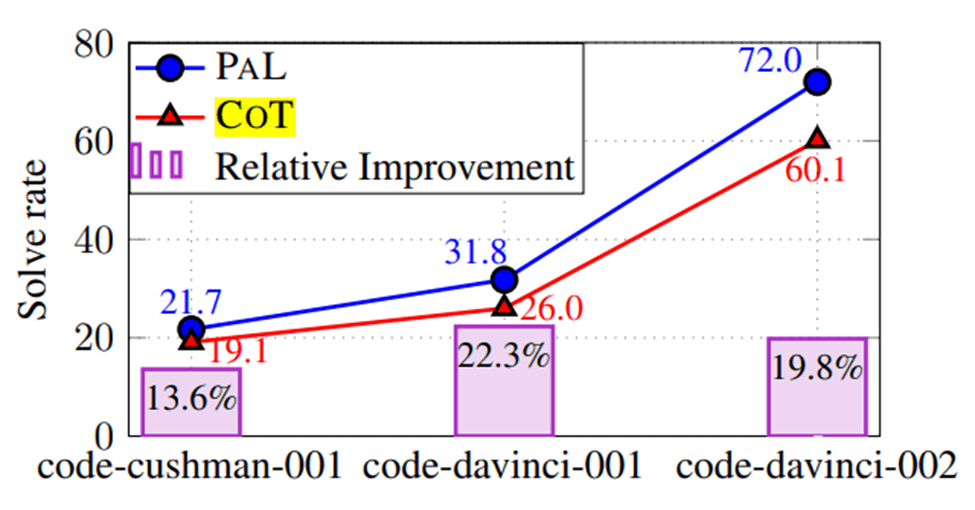

Figure: PAL with different models on GSM8K: though the absolute accuracies with code-cushman-001 and code-davinci-001 are lower than code-davinci-002, the relative improvement of PAL over COT is consistent across models.

Delivering superior value in enhancing AI performance

PAL’s true value lies in its impressive accuracy in a variety of benchmarks. The method has shown substantial improvements over existing models, setting new state-of-the-art records. For example, it improved Codex’s (LLMs that can parse generate code) performance by a substantial 6.4% on the GSM8K benchmark, a leap over the mere 2.3% increase achieved by using external calculators [1].

The PAL approach offers a broader perspective on semantic parsing, allowing the model to generate free-form Python code rather than parsing into strict domain-specific languages. This feature, combined with the fact that LLMs pretrained on Python are abundant, makes Python code a much more preferable representation in AI tasks.

Source: LinkedIn Post by Arjun Majumdar

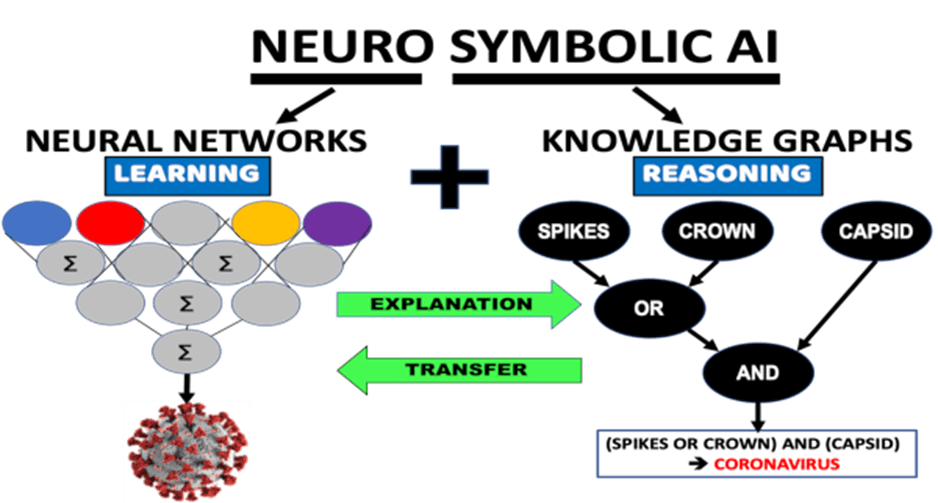

Looking forward: The future of neuro-symbolic AI reasoners

As AI continues to advance, the synergy between neural networks and symbolic reasoning exemplified by PAL offers an exciting direction for the future. The method illustrates how AI can leverage the strengths of different systems – in this case, an LLM and a Python interpreter – to deliver unprecedented performance.

In conclusion, PAL pushes the boundaries of what’s achievable with generative AI. By seamlessly combining the power of LLMs with Python interpreters, PAL not only delivers superior AI performance but also sets the stage for the future of neuro-symbolic AI reasoners. As we move forward, the advent of techniques like PAL reaffirms that the possibilities with AI are endless, and we are just scratching the surface of its potential.

References

1. Gao, L., Madaan, A., Zhou, S., Alon, U., Liu, P., Yang, Y., … & Neubig, G. (2023, July). Pal: Program-aided language models. In International Conference on Machine Learning (pp. 10764-10799). PMLR.

About the Authors

Rudrendu Kumar Paul https://www.linkedin.com/in/rudrendupaul/

Rudrendu Kumar Paul is an AI Expert and Applied ML industry professional with over 15 years of experience across multiple sectors. He has held significant roles at the top Fortune 50 companies including PayPal and Staples. Rudrendu’s professional proficiency encompasses various fields, including Artificial Intelligence, Applied Machine Learning, Data Science, and Advanced Analytics Applications. He has applied AI to multiple use cases in diverse sectors such as advertising, retail, e-commerce, fintech, logistics, power systems, and robotics.

In addition to his professional accomplishments, Rudrendu actively contributes to the startup ecosystem as a judge and expert at several global startup competitions. He reviews for prestigious academic journals like IEEE, Elsevier, and Springer Nature. Rudrendu holds an MBA, an MS in Data Science from Boston University, and a Bachelor’s in Electrical Engineering.