I read a paper from ETSI last week which talks of protecting Artificial Intelligence models against threats and attacks.

This topic is of interest to me since we cover it in the artificial intelligence for cybersecurity course at the University of Oxford. Protecting AI models from threats is getting increasingly more important but is not yet fully understood. Hence the paper is useful

I summarize the key ideas here (link to paper below)

Firstly, some terminology (from the paper)

- adversarial examples: carefully crafted samples which mislead a model to give an incorrect prediction

- conferrable adversarial examples: subclass of transferable adversarial examples that exclusively transfer with a targetlabel from a source model to its surrogates

- distributional shift: distribution of input data changes over time

- inference attack: attacks launched from deployment stage

- model-agnostic mitigation: mitigations which do not modify the addressed machine learning model

- model enhancement mitigation: mitigations which modify the addressed machine learning model

- training attack: attacks launched from development stage

- transferable adversarial examples: adversarial examples which are crafted for one model but also fool a different model with a high probability

While AI is a central part of life and business, AI is also under increasing attack. The paper lists the threats and the mitigation strategies for AI related cyber threats.

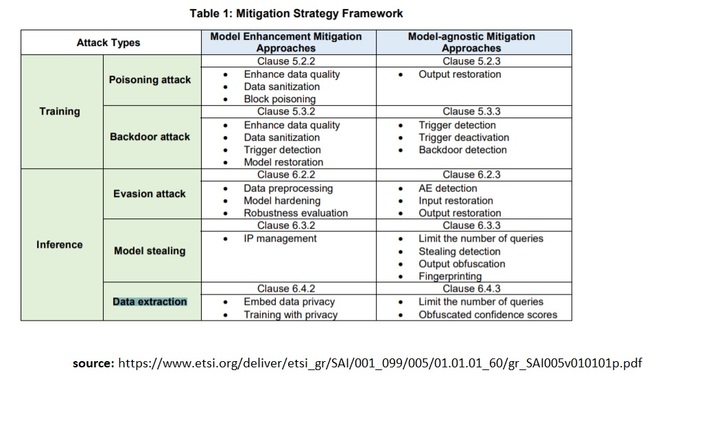

Mitigation approaches are can be model enhancement or model-agnostic. As threats evolve, mitigation strategies should evolve also.

The threats can be summarised as below

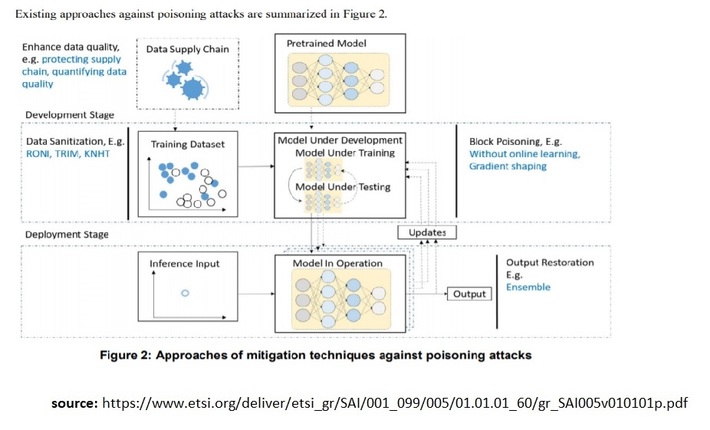

And specific threats have mitigation strategies ex for poisoning attacks

Poisoning attacks are designed to impair model performance over a wide range of inputs, and they often include attacks on availability. Poisoning attacks have been reported in inherently adversarial environments (such as spam filters), where they often strive to raise misclassification to the point where the system is hardly useable, preventing appropriate functionality from being available. Such assaults are especially significant if the distribution of input data changes over time (distributional shift), and frequent model updates are employed to keep up with the shift.

The threats and mitigation strategies are summarised below as per the paper

Paper link Securing Artificial Intelligence (SAI); Mitigation Strategy Report …

many thanks to my student at #universityofoxford François Ortolan for recommending the paper.