I have been frequently asked about the tools for the Machine Learnign projects There are lot of them on the market so in my newest post you will find my view on them. I would like to start my first Machine Learning project. But I do not have tools. What should I do? What are the tools I could use?

I will give you some hints and advices based on the toolbox I use. Of course there are more great tools but you should pick the ones you like. You should also use the tools that make your work productive which means you need to pay for them (which is not always the case – I do use free tools as well).

The first and most important thing is that there are lots of options! Just pick what works for you!

I have divided this post into several parts like the environments, the langauges and the libraries.

THE ENVIRONMENT

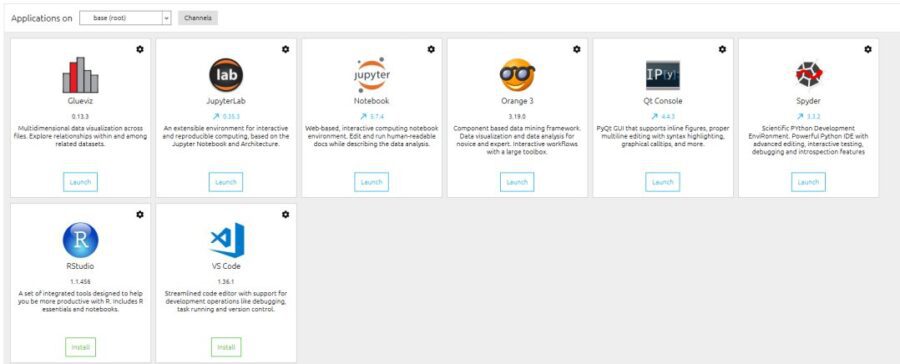

The decision about which environment to choose is really fundamental. I use to have three environments and use them as needed. The first and the one I like is Anaconda. It is an enterprise data science platform with lots of tools. It is designed for data scientists, IT professionals and business leaders as well. You can configure it for you project so it contains only the tools and libraries needed. This can make your deployments easier (I am not saying it will be easy).

Anaconda – home page and the tools

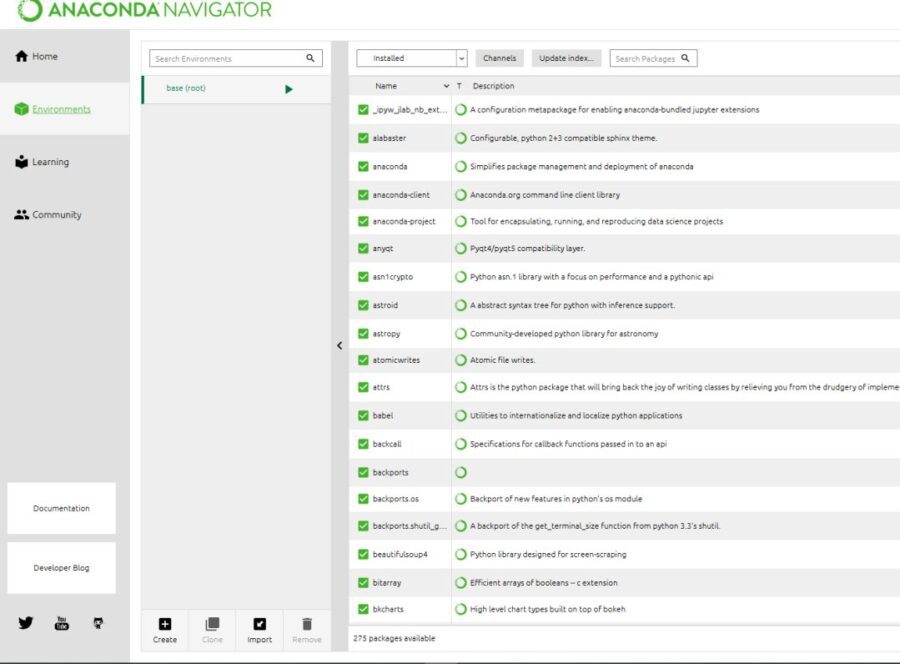

Creating an environment is super easy! Of course assuming you know what you need but it is also possible to reconfigure the environment later. I understand an environment like a project.

The Anaconda environments

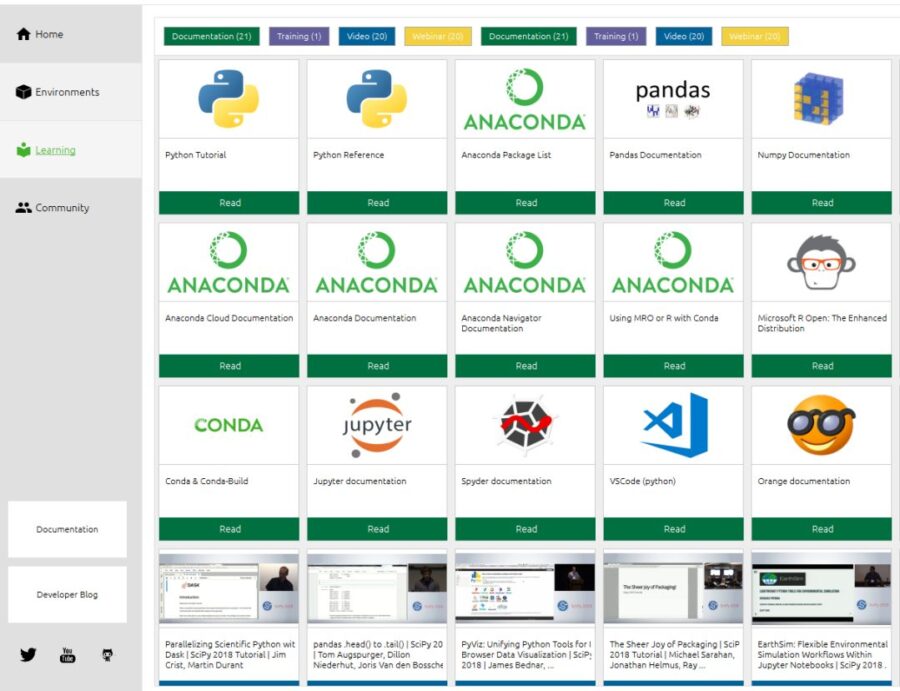

Anaconda offers also shortcuts to the Learning portal where you can find not only the documentation but also a lot of useful materials like videos or blog posts. This is really a great place to learn how to start working with a tool or to gain more knowledge

The Anaconda learning

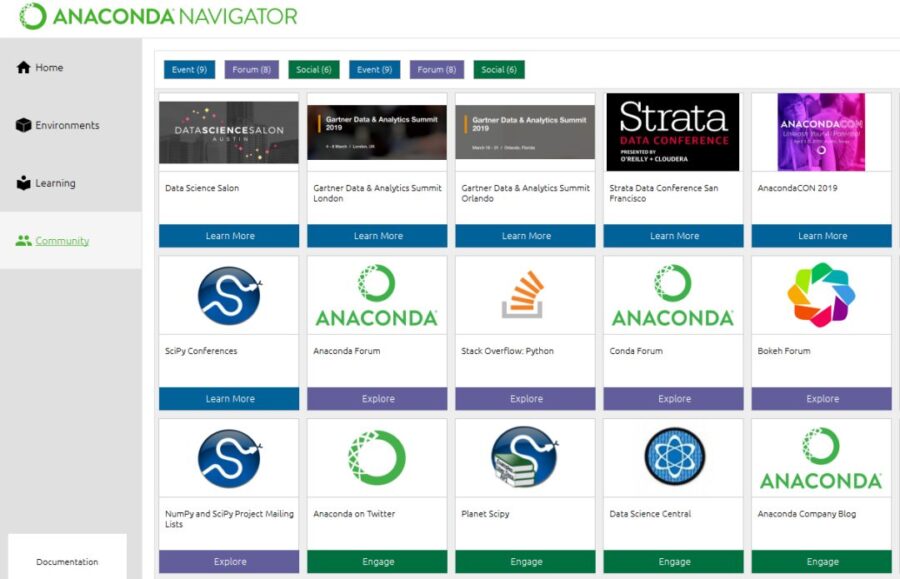

The last thing I would like to show here is the Anaconda Community tab. The community is really what makes our live easier. You can share thoughts or learn or just ask questions. As a proud member of the #SQLFamily community I know what I am saying… The community is the heart of all the learning process so do not forget to take part in and share your knowledge!

The Anaconda community tab

By the way – you can install MiniConda (minimal installation of the Anaconda) and install everything else from the command line like I have shown here:

Cmd

Cd documents

Md project_name

Cd project_name

Conda create --name project_name

Activate project_name

Conda install --name project_name spyder

What I have done with the code above? I started with the cmd tool, then created a new project named project_name in the documents folder. Then I have created an environment and activated it. The last line shows an example how to install libraries or tools – I have shown how to install the Spyder.

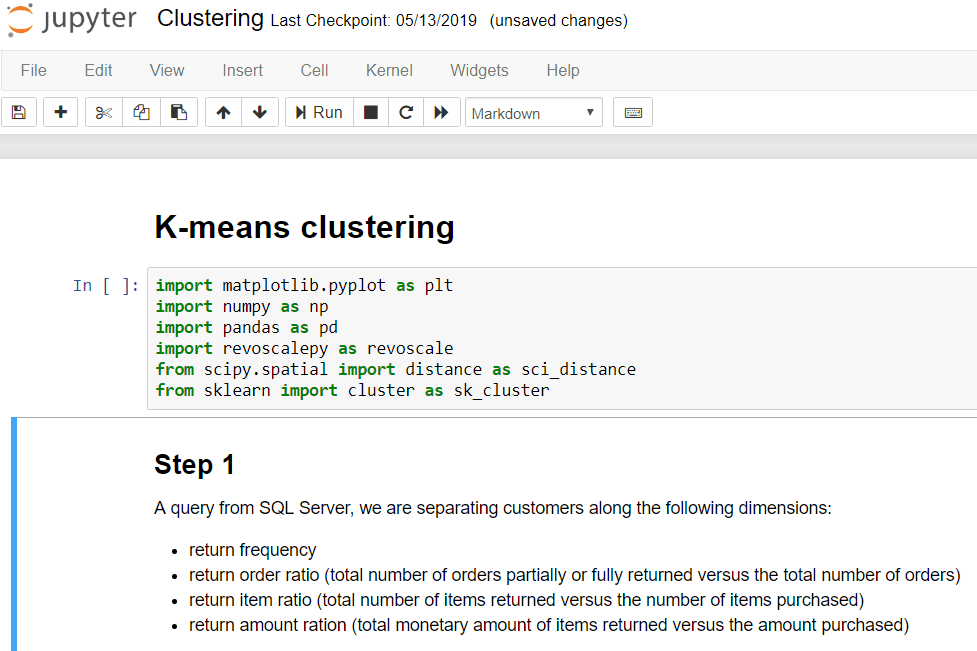

I use the Jupyter Notebook along with other tools (Orange, Spyder etc) to do the modelling. The advantage of the Jupyter Notebooks over the other tools is that you can write a code and immediately run it without compiling anything. Looks great, ain’t it? This is not all as I always like to document my code and this is what you can do here. Take a look at the picture below – code and documentation live logether peacefully!

Jupyer Notebook in action

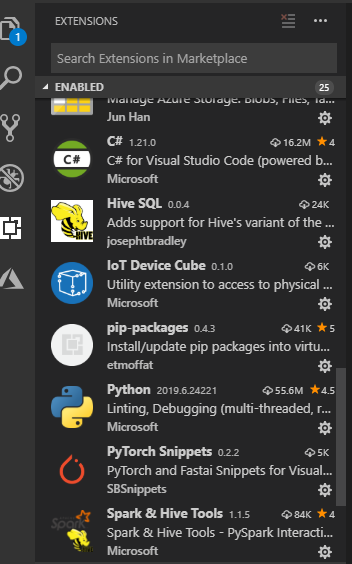

Now let’s move on to the Visual Studio Code. I have been using Visual Studio since it was released for the first time. You cannot be surprised that Visual Studio Code is just my natural choice for many projects including Machine Learning and AI.

Visual Studio Code is released once a month which makes this product unique.

You can customize your Visual Studio Code the way you need – just install all the extensions and start working with the code.

Visual Studio Code – my installed extensions

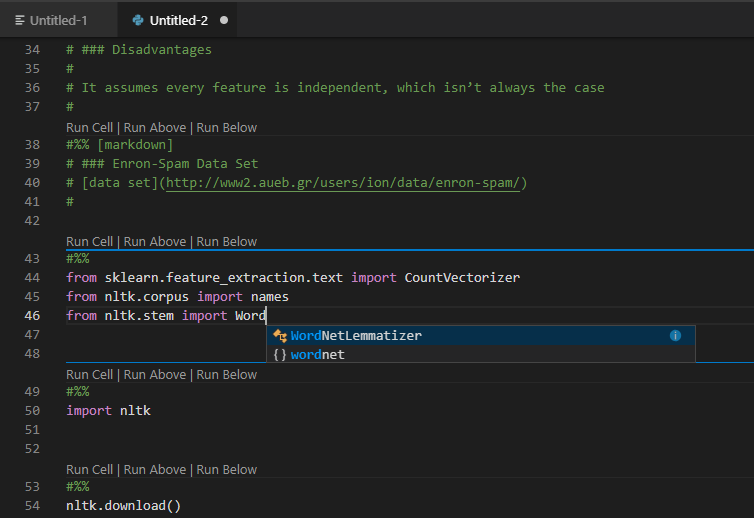

Visual Studio Code – my installed extensionsBut this is not all. Having Visual Studio Code you have also a powerfull debugger, intellisense (!!!!) and built-in Git.

Visual Studio Code intellisense for Machine Learning project

Visual Studio Code intellisense for Machine Learning projectWhat about the Visual Studio Code community? Yes, there is one! It is also powerfull so you do not get lost and get the help if needed.

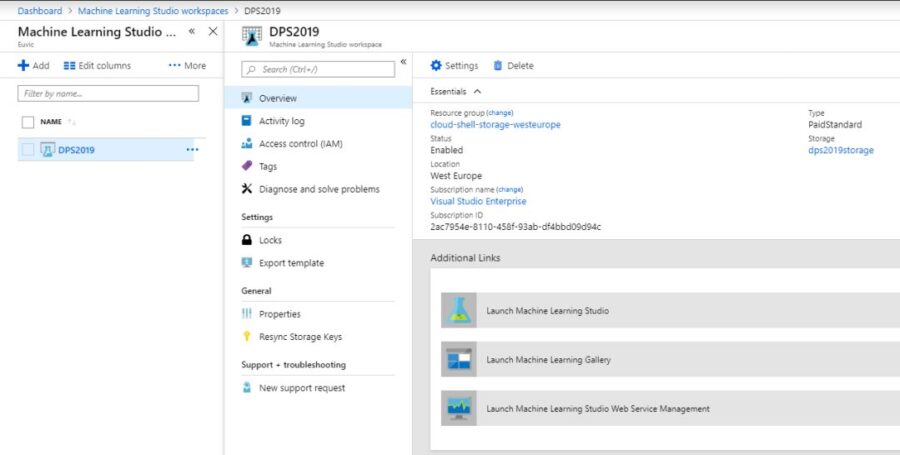

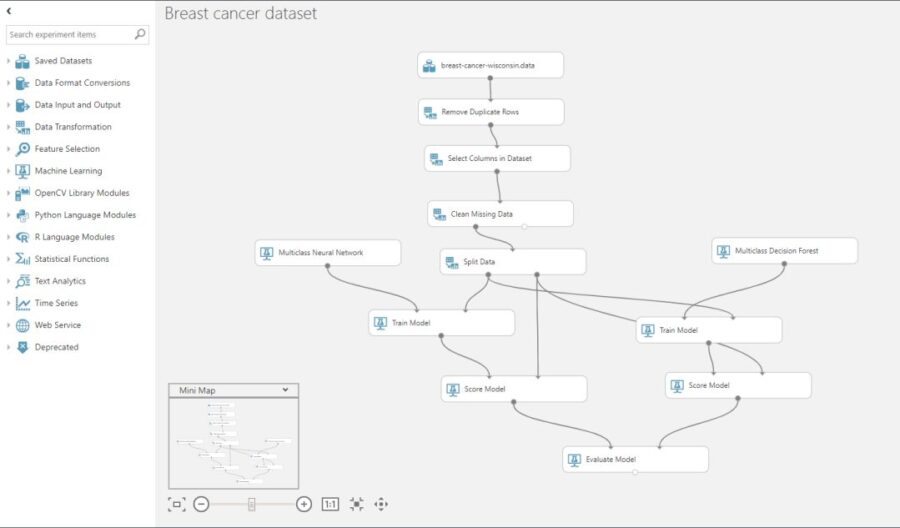

The last tool I would like to present is the Azure Machine Learning Studio. This is a graphical tool and it does not require any programming knowledge at all. You need to log to the Azure Portal and create a Machine Learning Workspace.

Machine Learning Studio Workspace

There is a free version for the developers so you can just start immediatelly. I suggest you to start with the examples that are in the Gallery. Take a look at the one I have just picked and opened in the Studio:

Machine Learning Studio

As you can see the Machine Learning Studio is more oriented to the Machine Learning Process (take a look int my recent article) rather than coding. Of course you can add as much code as you wish there as well.

THE LANGUAGES

I would prefer to use Python but there is also the R language in the scope. What I see is the R language is mostly used by the people from universities whilst Pyhon is used by the data engineers and programers. This is how it looks like usually but I am not doing any assumptions. Please use the language you like and feel comforatble coding. I will use both of them on the blog.

Both Python and R are powerful languages. They can easily manipulate with data sets and perform complex operations on them.

Wait, do you know any other language that can handle data sets? Yes – it is the good, old T-SQL! I think you should at least know that the SQL Server can mix up the T-SQL, Python and R! You can create powerful Machine Learning and AI solution using SQL Server and I will definitelly show you how to this later!

THE LIBRARIES

Now we move to the heart of the Machine Learning modelling. The libraries that give you everything you need. You can prepare your data set, clean it, standarize, perform regularization, pick an algorithm, create learining/testing splits, learn the model, perform scoring, plot the data and many more…

The decision which library you use is really important. The decision is also driven by the language you use as libraries are not transferrable between the Python and R.

I am going to describe some well known (free of charge) libraries here below but we will learn more about them in the next posts where I will be discussing the code itself.

PANDAS

This is one of the most popular libraries for data loading and preparation. It is frequently used with the Scikit-learn. It supports loading data from different lources like SQL databases, flat files (text, csv, json, xml, Excel) and many more. It can do SQL-like operations for example joining, grouping, aggregating, reshaping, etc. You can also clean the data set perform transformations and dealing with missing values.

NUMPY

This is all about multidimensional arrays and matrices and it is used in linear algebra operations. It is a core component for the pandas nad scikit-learn.

SCIKIT-LEARN

This library is one of the most popular libraries today. You can find lot of both supervised and unsupervised learning algorithms like clustering, linear and logistic regressions, gradient boosting , SVM, Naive Bayes, k-means and many more.

It also provides helpful fonctions for data preprocessing and scoring.

You should not use it for the Neural Networks as it is designed for Machine Learning.

PYTORCH

This is the Deep Learning library built by Facebook. It supports the CPU and GPU computations. It can help you solving problems from the Deep Learning area like medical image analysis, recommender systems, bioinformatics, image restoration etc.

PyTorch provides features like interactive debugging and dynamic graph definition.

TENSORFLOW

It has been built by Google. This is both Machine Learning and Deep Learning library. It supports many Machine Learning algorithms for classification and regression analysis. The great benefit is that it also supports the Deep Learning tasks.

KERAS

It is a popular high-level Deep Learning library which uses various low-level libraries like Tensorflow, CNTK, or Theano on the backend. It should be easier to learn than Tensorflow and can use the Tensorflow under the hood (what for example PyTorch cannot do).

XGBOOST

This library implements algorithms under the Gradient Boosting framework. It provides a parallel tree boosting (also known as GBDT, GBM) that solve many data science problems in a fast and accurate way.

WEKA

I have used the Weka library in my R code when testing how the association rules work. But is a powerful library for data preparation and many types of algorithms like classification, regression. It can also do clustering and perform visualization.

MATPLOTLIB AND SEABORN

The two libraries are used for data visualization. They are easy to use and help you using both very basic and very complex plots. You do not need to be an artist or talented coder to make beatufil visualizations anymore.

WHAT ABOUT THE CLOUD SOLUTIONS?

Everything lives in a cloud now. This is also true for the Machine Learning solutions. There are many Cloud Providers you can choose but I will be showing most of my cloud solutions on Microsoft Azure. There is everything you need to start with. You can start from scratch and build your solution just step by step having control on everything. But you can also use so called Automatic Machine Learning (yes, I show you both ways !!!) to concentrate on the solution and not on the infostructure. Think about how powerful this can be – you develop a model and the Azure will deploy it for you – in a contenerized solution!

SUMMARY

Now you know the tools – environment, languages and libraries. We can move forward to Machine Learning. The next post will be dedicated to a very simple but powerfull example of the Machine Learning solution.

Please let me know if you need me to elaborate on a specific tool more. I will be very happy to do so in one of the further posts!

Originally posted here.