Summary: Quantum computing is now a commercial reality. Here’s the story of the companies that are currently using it in operations and how this will soon disrupt artificial intelligence and deep learning.

Like a magician distracting us with one hand while pulling a fast one with the other Quantum computing has crossed over from research to commercialization almost without us noticing.

Like a magician distracting us with one hand while pulling a fast one with the other Quantum computing has crossed over from research to commercialization almost without us noticing.

Is that right? Has the dream of Quantum computing actually stepped out of the lab into the world of actual application. Well, Lockheed Martin has been using it for seven years. Temporal Defense Systems, a leading edge cyber security firm is using one. And two Australian banks, Westpac and Commonwealth, and Telstra, an Australian mobile telecom company are also in. Add to that the new IBM Q program offering commercial quantum compute time via API where IBM says “To date users have run more than 300,000 quantum experiments on the IBM Cloud”.

Of all the exotic developments to come out of the lab and into the world of data science none can beat Quantum computing. Using the state or spin of photons or electrons (qubits) and exploiting ‘spooky entanglement at a distance’ these futuristic computers look like and program like nothing we have seen before. And they claim to know all the possible solutions to any complex problem instantaneously.

Has the Commercialization Barrier Really Been Breached?

When we look back at other technologies that have moved out of the lab and entered commercialization it’s usually possible to spot at least one key element of the technology that allowed for explosive takeoff.

In genomics it was Illumina’s low cost gene sequencing devices. In IoT I would point at Spark. And in deep learning I would look at the use of GPUs and FPGAs to dramatically accelerate the speed of processing in deep nets.

In Quantum it’s not yet so clear what that single technology driver might be except that within a very short period of time we have gone from one and two Qubit machines to 2,000 Qubit machines that are being sold commercially by D-Wave. Incidentally, they are on track to double that every year with expectations of a 4,000 Qubit machine next year. The research firm MarketsandMarkets reports that the Chinese have developed a 24,000 Qubit machine but it’s not available commercially.

What Commercial Applications are Being Adopted?

It appears that while this is going to expand rapidly that current commercial applications are fairly narrow.

It appears that while this is going to expand rapidly that current commercial applications are fairly narrow.

Lockheed Martin: For all its diversity Lockheed Martin spends most of its time writing code for pretty exotic application like missile and fire control and space systems. Yet, on average, half the cost of creating these programs is on verification and validation (V and V).

In 2010 Lockheed became D-Wave’s first commercial customer after testing whether (now 7 year old) Quantum computers could spot errors in complex code. Even that far back D-Waves earliest machine found the errors in six weeks compared to the many man-months Lockheed Martin’s best engineers had required.

Today, after having upgraded twice to D-Waves newest largest machines Lockheed has several applications, but chief among them is instantly debugging millions of lines of code.

Temporal Defense Systems (TDS): TDS is using the latest D-Wave 2000Q to build its advanced cyber security system, the Quantum Security Model. According to James Burrell, TDS Chief Technology Officer and former FBI Deputy Assistant Director this new system will be a wholly new level with real-time security level rating, device-to-device authentication, identification of long-term persistent threats, and detection and prevention of insider threats before network compromise and data theft occurs.

Westpac, Commonwealth, and Telstra: While the Australians are committed to getting out ahead their approach has been a little different. Commonwealth recently announced a large investment in a Quantum simulator, while Westpac and Telstra have made sizable ownership investments in Quantum computing companies focused on cyber security. The Commonwealth simulator is meant to give them a head start on understanding how the technology can be applied to banking which is expected to include at least these areas:

- Ultra-strong encryption of confidential data.

- Continuously run Monte Carlo simulations which provide a bank with a picture of its financial position; currently these are run twice a day.

- Move anti-money-laundering compliance checks to real time.

The Australian efforts are all focused on a home-grown system being developed at the University of New South Wales which expects to have a commercially available 10 Qubit machine by 2020, about three years.

QuantumX: There is now even an incubator focusing solely on Quantum computing applications called QuantumX with offices in Cambridge and San Francisco.

As for operational business uses these applications are not overwhelmingly diverse but this harkens back to about 2005 when Google was using the first NoSQL DB to improve its internal search algorithms. Only two years later the world had Hadoop.

Quantum Computing and Deep Learning

Here’s where it gets interesting. All these anomaly detecting cybersecurity, IV&V, and Monte Carlo simulations are indeed part of data science, but what about deep learning? Can Quantum computing be repurposed to dramatically speed up Convolutional and Recurrent Neural Nets, and Adversarial and Reinforcement Learning with their multitude of hidden layers that just slows everything down? As it turns out, yes it can. And the results are quite amazing.

The standard description of how Quantum computers can be used falls in these three categories:

- Simulation

- Optimization

- Sampling

This might throw you off the track a bit until you realize that pretty much any gradient descent problem can be seen as either an optimization or sampling problem. So while the press over the last year has been feeding us examples about solving complex traveling salesman optimization, the most important action for data science has been in plotting how Quantum will disrupt the way we approach deep learning.

Exactly the scope of improvement and the methods being employed will have to wait for the next article in this series.

Does Size Count?

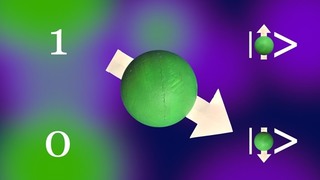

You may have read that IBM Q’s Quantum machine available in the cloud via API is 17 Qubits while D-Wave’s is now 2,000 Qubits. Does this mean IBM’s is tiny by comparison? Actually no. IBM and D-Wave use two completely different architectures in their machines so that their compute capability is roughly equal.

D-Wave’s system is based on the concept of quantum annealing and uses a magnetic field to perform qubit operations.

IBM’s system if based on a ‘gate model’ which is considered both more advanced and more complicated. So when IBM moves from 16 qubits to 17 qubits its computational ability doubles.

There are even other architectures in development. The Australians for example made an early commitment to do this all in silicon. Microsoft is believed to be using a new topology and a particle that is yet to be discovered.

Then there’s the issue of reliability. Factors such as the number and quality of the qubits, circuit connectivity, and error rates all come into play. IBM has proposed a new metric to measure the computational power of Quantum systems which they call ‘Quantum Volume’ that takes all these factors into account.

So there’s plenty of room for the research to run before we converge on a common sense of what’s best. That single view of what’s best may be several years off but like Hadoop in 2007, you can buy or rent one today and get ahead of the crowd.

Next time, more specifics on how this could disrupt our path to artificial intelligence and deep learning.

Other articles in this series:

Understanding the Quantum Computing Landscape Today – Buy, Rent, or Wait

Quantum Computing, Deep Learning, and Artificial Intelligence

The Three Way Race to the Future of AI. Quantum vs. Neuromorphic vs. High Performance Computing

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist and commercial predictive modeler since 2001. He can be reached at: