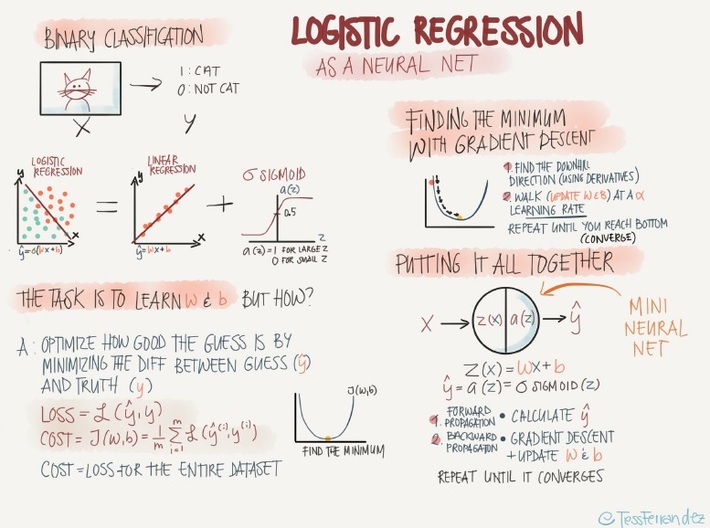

As a teacher of Data Science (Data Science for Internet of Things course at the University of Oxford), I am always fascinated in cross connection between concepts. I noticed an interesting image on Tess Fernandez slideshare (which I very much recommend you follow) which talked of Logistic Regression as a neural network

Image source: Tess Fernandez

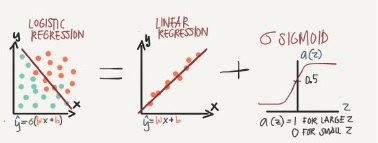

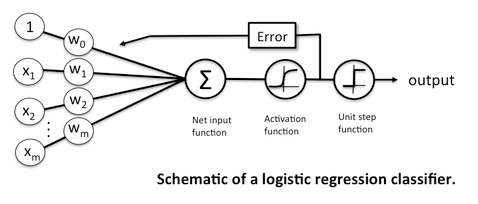

These pictures are self-explanatory when you break down the idea of logistic regression as a Linear Regression and a Sigmoid function

Image source: Tess Fernandez

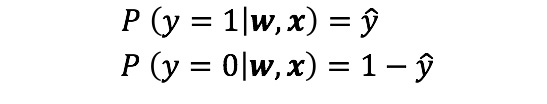

To recap, Logistic regression is a binary classification method. It can be modelled as a function that can take in any number of inputs and constrain the output to be between 0 and 1. This means, we can think of Logistic Regression as a one-layer neural network. For a binary output, if the true label is y (y = 0 or y = 1) and y_hat is the predicted output – then y_hat represents the probability that y = 1 – given inputs w and x. Therefore, the probability that y = 0 given inputs w and x is (1 – y_hat), as shown below.

Images source: @melodious

Which gives us

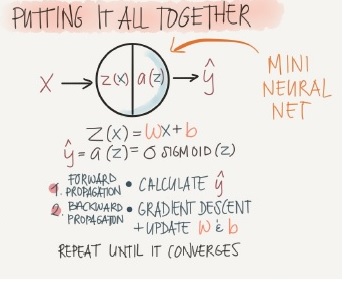

Image source: Tess Fernandez

Sebastian Raschcka also provides a good explanation when he says that – we can think of logistic regression as a one layer neural network where using the threshold function in the output layer gives us a binary classification

I hope you found this analysis useful as well.