This article was written by Alexandr Honchar.

People use deep learning almost for everything today, and the “sexiest” areas of applications are computer vision, natural language processing, speech and audio analysis, recommender systems and predictive analytics. But there is also one field that is unfairly forgotten in terms of machine learning — signal processing (and, of course, time series analysis). In this article, I want to show several areas where signals or time series are vital, after I will briefly review classical approaches and will move on to my experience with applying deep learning for biosignal analysis in Mawi Solutions and for algorithmic trading. I already gave a couple of talks on this topic in Barcelona and Lviv, but I would like to make the materials a bit more accessible.

I am sure, that not only people working with time series data will benefit from this article. Computer vision specialists will learn how similar their domain expertise is to signal processing, NLP people will get some insights about sequential modeling and other professionals can have interesting takeaways as well. Enjoy!

Sources of signals and time series

First of all, planet Earth and space bodies around it are sources of signals — we measure amount and intensity of sunspots, temperature changes in different regions, wind speed, asteroids speed and a lot of other things.

Of course, most common examples of time series are the ones related to business and finance: stock prices and all possible derivatives, sales in big and small business, manufacturing, websites activity, energy production, political and sociological factors and many others.

We can’t also forget about humans as great source of biosignals: brain activity (EEG), heart activity (ECG), muscle tension (EMG), data from wearables like pulse, activity based on accelerometer, sleep, stress indices — all these vital signals are getting very popular today and they need to be analyzed.

Surely, there are also other examples like data from the vehicles, but I hope you can already see a very large range of applications. I personally am very tightly involved into biosignal analysis, in particular, cardiograms — I’m responsible for ML in Mawi Band — a company that developed our own medical grade portable cardiograph that measure first lead ECG.

The coolest part is that ECG data shows not just the state of your heart as it is — you can track emotional state and stress level, physical state, drowsiness and energy, the influence of alcohol or smoking on your heart and a lot of other things. If you’re doing research and you need to gather biomedical data for further analysis of your hypotheses (even the craziest ones), we launched a platform to democratize data collection and analysis process.

Classical approaches

Before machine learning and deep learning era, people were creating mathematical models and approaches for time series and signals analysis. Here is a summary of the most important of them:

- Time domain analysis: this is all about “looking” how time series evolves over time. It can include analysis of width, heights of the time steps, statistical features and other “visual” characteristics.

- Frequency domain analysis: a lot of signals are better represented not by how the change over time, but what amplitudes they have in it and how they change. Fourier analysis and wavelets are what you go with.

- Nearest neighbors analysis: sometimes we just need to compare two signals or measure a distance between them and we can’t do this with regular metrics like Euclidean, because signals can be of different length and the notion of similarity is a bit different as well. A great example of metrics for time series with dynamic time warping.

- (S)AR(I)MA(X) models: the very popular family of mathematical models based on linear self-dependence inside of time series (autocorrelation) that is able to explain future fluctuations.

- Decomposition: another important approach for prediction is decomposing time series into logical parts that can be summed or multiplied to obtain the initial time series: trend part, seasonal part, and residuals.

- Nonlinear dynamics: we always forget about differential equations (ordinary, partial, stochastic and others) as a tool for modeling dynamical systems that are in fact signals or time series. It’s rather unconventional today, but features from DEs can be very useful for…

- Machine learning: all things from above can get features for any machine learning model we have. But in 2018 we don’t want to rely on human-biased mathematical models and feature. We want it to be done for us with AI, which today is deep learning.

Deep learning

Deep learning is easy. From a practical point of view, you just need to stack layers in your favorite framework and be careful about overfitting. But everything is a bit more complex than that. Four years ago great researches thought of if this stacking layers is the best we can do not for general AI of course, but at least for the signal processing? Four years later we might have an answer: neural networks are extremely powerful tools for all the domains I showed in previous sections, they win Kaggle competitions like sales forecasting and web traffic forecasting, they surpass human accuracy in biosignals analysis, they trade better than us as well. In this section, I want to talk about main deep learning approaches that give state of the art results and why they work so well.

Recurrent neural nets

The first thing that comes to our mind when we talk about any sequence analysis with neural networks (from time series to language) is the recurrent neural network. It was created especially for sequences with the ability to maintain its hidden state and learn dependencies over time, is Turing complete and is able to deal with sequences of any length. But as it has been shown in recent research, we barely use these benefits in practice. Moreover, we encounter numerous problems that won’t make them able to work with too long sequences (and that’s what we have with streaming signal processing with the high-frequency sampling rate, e.g. 500–100 Hz), for more details check the reading list in the conclusions of this article. From my personal experience, recurrent nets are good only when we deal with rather short sequences (10–100 time steps) with multiple variables on each time step (can be multivariate time series or word embeddings). In all other cases we better go with the next.

Convolutional neural nets

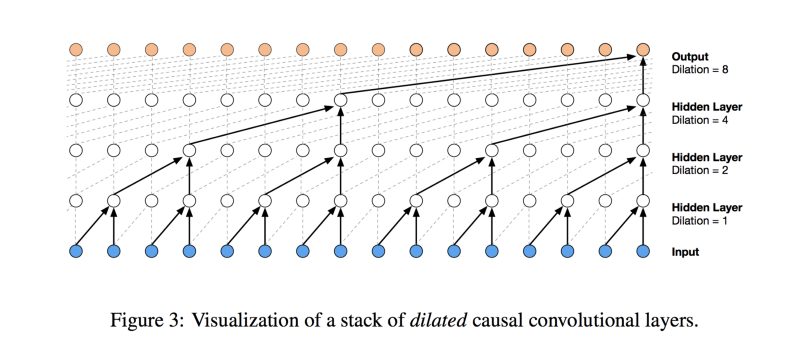

CNNs are great for computer vision, because they’re able to catch the finest details (local patterns) in images or even 3D volumetric data. Why we don’t apply them for even more simple 1D data? And we definitely should do this, taking into account, that all we need to do — to take the open sourced state of the art deep learning architecture like ResNet or DenseNet and replace 2D convolutions with 1D ones (no joke!). They show great performance, are fast, can be optimized in parallel, work well both for classification and regression, since the combination of all local patterns in time series is what defines them. I have benchmarked them a lot of times and they’re mostly superior to RNNs. I can only add, that at the moment when I am working with signals I have two main baselines: logistic regression and 2–3 layers CNN.

CNN + RNN

RNNs and CNNs are something that you might expect, but let’s consider more interesting models. Local patterns are good, but what if we still imply temporal dependence of these patterns (but RNNs over raw signal is not the best option)? We just should remember, that convolutional nets are good in dimension reduction using different pooling techniques and over these reduced representations we already can run recurrent neural nets and their “physical meaning” will be “checking dependency between local patterns over time”, which definitely has the sense for some applications. The visual illustration of the concept you can see above.

To read the whole article with illustrations, click here.