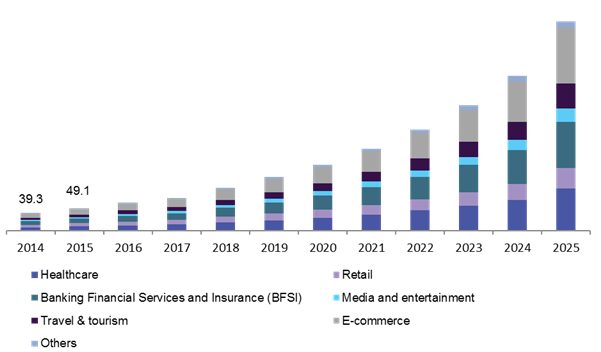

Chatbots are the hot thing right now. Many surveys claim that XX% of companies plan to deploy a chatbot in Y years. Consider the infographic below that describes the chatbot market value in the US in a million dollars

However, where did it all start? Where are we now? In this article, we try to answer these questions.

ELIZA

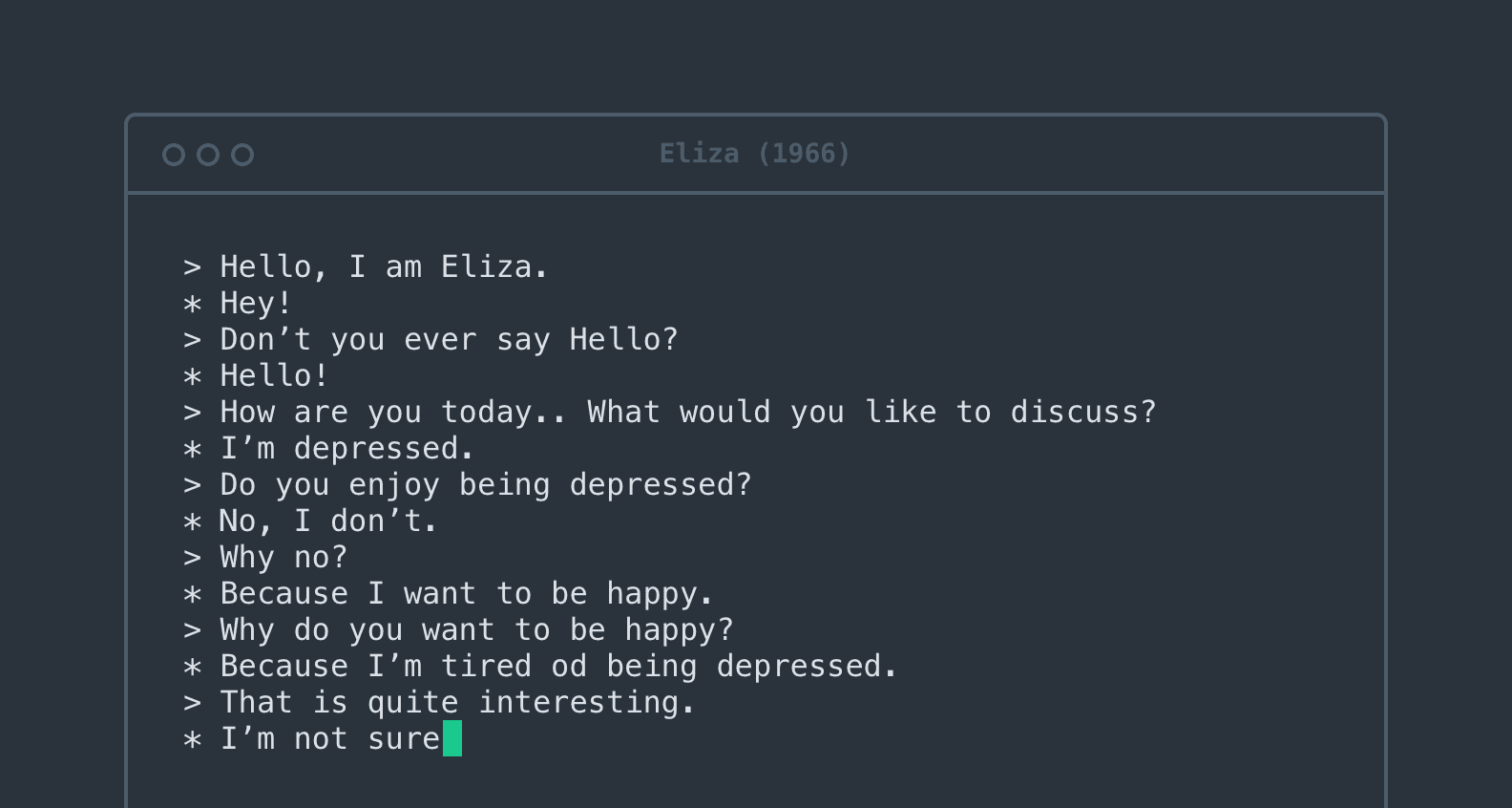

Joseph Weizenbaum, a German professor of Computer Science at the Massachuset Institute of Technology, developed a program called ELIZA in 1966. It aimed at tricking users by making them believe that they were having a conversation with a human. ELIZA is considered to be the first chatbot in the field of Computer Science.

However, how does this work? The processing loop of ELIZA takes in the user input and breaks it into sentences and scans each sentence for the keyword or phrase. It then applies synonym list to match equivalent words. For each key, it looks for a pattern. In case the input does not match with any of its stored patterns, it returns some generic response like “Can you elaborate?”

As you can see, it does not understand your input for its next response. However, let’s not be too hard on 1966.

ALICE

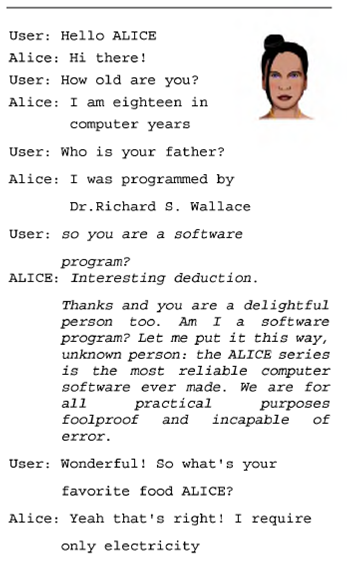

ALICE (Artificial Linguistic Internet Computer Entity) developed by Wallace in 1995. A key thing about ALICE is that it relies on many simple categories and rule matching to process the input. ALICE goes for size over sophistication: it makes up for lack of morphological, syntactic, and semantic NLP modules by having a massive number of simple rules.

ALICE stores knowledge about English conversation patterns in AIML files. AIML, or Artificial Intelligence Mark-up Language, is a derivative of Extensible Mark-up Language (XML). It was developed by the Alicebot free software community during 1995-2000 to enable people to input dialogue pattern knowledge into chatbots based on the ALICE free software technology. AIML consists of data objects called AIML objects, which are made up of units called topics and categories. A topic is an optional top-level element; it has a name attribute and a set of categories related to that topic. Categories are the basic unit of knowledge in AIML. Each category is a rule for matching input and converting it to an output and consists of a pattern, which represents the user input, and a template, which implies the ALICE robot answer.

As you can see, it works pretty well. However, it’s not good enough. Natural Language is complex and evolving. Relying on a rule-based chatbot is not a long term way to go.

Neural Conversational Bots

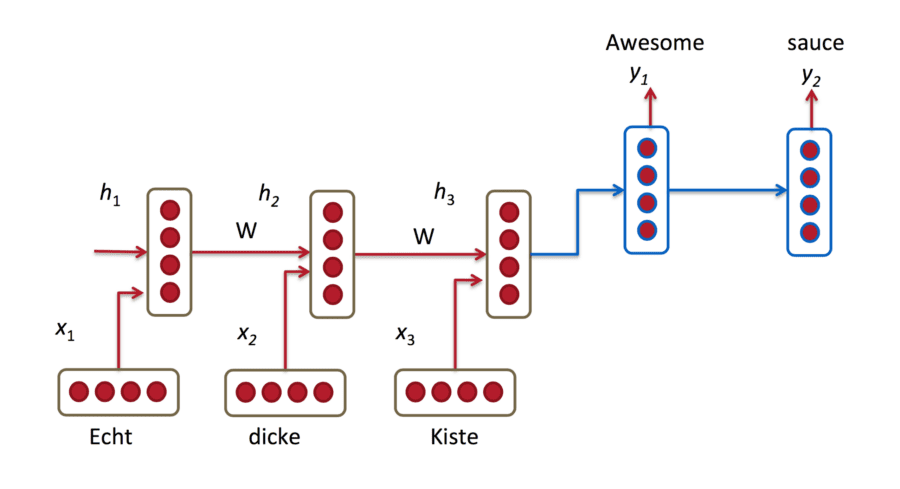

Deep Learning is today’s buzz word. Moreover, it’s there for a reason. The level of complexity these algorithms can learn once fed with vast amounts of data and sufficient computational capacity is just astounding. Models like Convolutional Neural Nets have proven their ability in image processing. In Natural Language Processing, models like Recurrent Neural Networks (RNN) or their advanced variants like Long Short Term Memory (LSTM) or Gated Recurrent Units (GRU) have now enabled us to take a step further towards actual Natural Language Understanding. Since this is the state of the art model, let’s very intuitively understand what’s happening.

Like any Machine Learning algorithm, Neural Nets learn from examples. Meaning, to predict the outcome for an unknown instance (say the price of a house), you need to train the model. How do you do that? Feed it already existing instances ( say information about houses whose price is already known) whose predictions you already know. The model will learn from the fed data and will be able to predict for a new instance, who’s predicted value isn’t known. Now RNN, a modification of Neural Networks is specially built to process sequential data, i.e., where the order matters. Like sentences. Here the order of the words matter.

Consider the example of language translation above. The RNN aims to translate German into English. On the left, we feed the German sentence, word by word. The RNN process a word (say Echt) and passes on some information about it to the next step where the next word (in this case dicke). Finally, all the information about the sentence is Encoded in a vector. This vector is then Decoded in a Decoder. Thus the decoder returns the translated sentence in English. What I have given is a very high-level idea of RNNs.

You might ask – Have we cracked the code to the perfect chatbot? No. However, researchers firmly believe that deep learning is the way to go unless something disruptive emerges in the future. One good thing about it is that it does an end to end task. Meaning, we don’t have to define rules. We need to feed it with high-quality data.

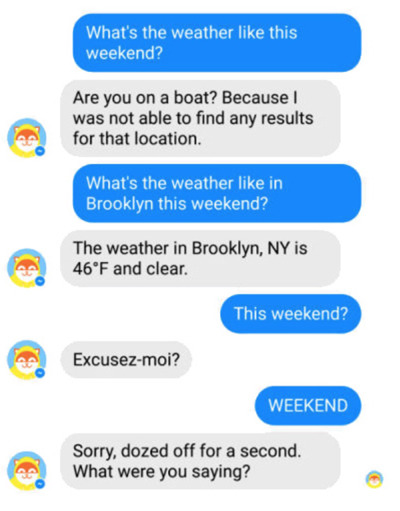

However, let’s not get misled by the hype. Even today, chatbots make many mistakes. Look at the example below.

Many times you might have noticed that even the most advanced bots like Alexa or Cortana fail to respond correctly. Quite often their best response to a question is a link which opens in a browser. The point being if a business needs a chatbot, they should clearly define the problem they wish to solve with it.

- For example, a chatbot can save time and energy of customer helpline executives by automating simple queries and thus allowing them to focus on issues that require human assistance.

- Another example could be how easily customers can look for a particular piece of information instead of navigating through nested web pages.

In conclusion, chatbots have been in the picture for quite long, and they are very promising. However, we are yet to reach a point where they converse like you and me.