Enabling cost-effective terabyte main memory along with third-party observations of a “profound” change in storage, Intel® Optane™ DC persistent memory modules change the way we think about memory capacity, tiered memory, out-of-core algorithms, storage speed, and essentially what we can do on a computational “fat node” and with persistent storage.

With a growing ecosystem of more than 50 OEMs, ISVs and cloud service providers are exploring what is possible given the touted three-fold increase in memory capacity that Intel® Optane™ DC persistent memory provides.[i] As can be seen in the figure below, an Intel Optane DC persistent memory module looks very much like a standard DIMM. According to Intel they are “even electrically and physically compatible with standard DDR4 slots but they are not intended as a DRAM replacement”.

Figure 1: An Intel Optane DC Persistent Memory Module

Several high-profile validation efforts demonstrate the successful application and benefits of both performance and cost savings. We briefly cover several cloud and ISV success stories then dig down into with third-party performance evaluations of why Intel Optane DC persistent memory is so compelling.

Google cloud highlights

Ned Boden (Senior Director, Global Technology Partnerships, Google Cloud) blogged that, “In-memory workloads continue to be the backbone of innovative data management use cases, but as demand increases, so does the need for even larger memory capacity.” [ii] This summarizes the “backbone of innovative benefit” of the big-memory enabled systems that one can now build with Intel Optane DC persistent memory.

In-memory workloads continue to be the backbone of innovative data management use cases, but as demand increases, so does the need for even larger memory capacity – Ned Boden. Senior Director, Global Technology Partnerships, Google Cloud

Making this concrete, Google Cloud is the first public cloud provider to offer virtual machines (VMs) with Intel Optane DC persistent memory. Initial VM contain seven terabytes (7 TB) of total memory. Those who are old enough remember the remarkable increase in application capability that occurred as we transitioned from systems with megabytes of memory to gigabytes. Now we are at the cusp of terabytes of memory capacity. You too can explore these capabilities by signing up for access to the Google Cloud systems here.

Intel and Google Cloud are also working with SAP to evaluate the benefits of big-memory VMs for SAP workloads that also exploit the Intel Optane DC persistent memory capability. SAP says early customers have seen up to a data reload times on these new VMs [iii], which is significant when spinning up new VM instances. According to the Google blog, “With lower total cost of ownership (TCO) compared to DRAM-onlybased VMs, GCP customers using this technology [Intel Optane DC persistent memory] no longer have to balance operational efficiency against cost.” [iv]

With lower total cost of ownership (TCO) compared to DRAM-only based VMs, GCP customers using this technology [Intel Optane DC persistent memory] no longer have to balance operational efficiency against cost – Google Cloud blog

Other ISV success summaries

Various OSVs are using Intel Optane DC persistent memory to achieve lower latency, higher throughput, and greater application performance:

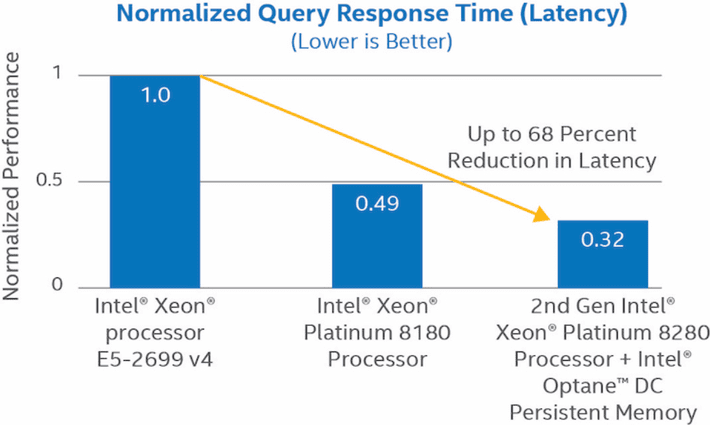

- AsiaInfo: AsiaInfo* Technologies, China’s largest business support systems (BSS) provider. Latency is a big concern as it directly affects the customer experience. With Intel Optane DC persistent memory, AsiaInfo can store more data closer to the processor, where it can be rapidly accessed for more responsive service as shown below. [v]

Figure 2: Intel® Optane™ DC persistent memory reduces query response time (i.e., latency) for AsiaInfo* BSS.

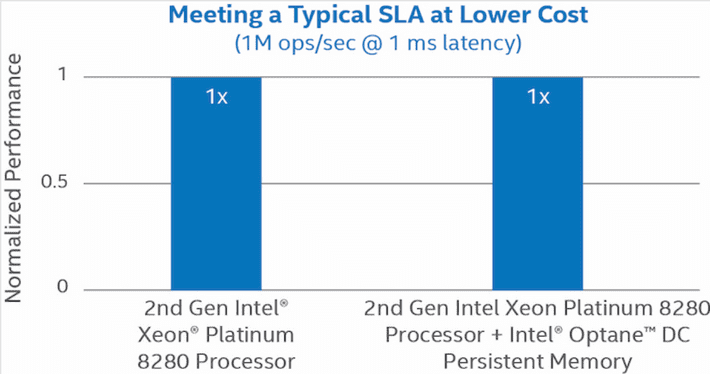

- Redis Labs: After optimization of the database, Redis observed sub-millisecond latency at a cost lower than traditional DRAM. Basically, systems using lower cost Intel Optane DC persistent memory delivered equivalent performance on this benchmark. [vi]

Figure 3: Maintaining a typical customer service level agreement (SLA) with reduced server cost.

- Aerospike: Persistent Intel Optane DC persistent memory was used to preserve database indexes across reboots and power-off cycles. Thus, software can be updated and security patches performed while meeting redundancy requirements. For Aerospike, database reload times were reduced by as much as 135x. [vii]

330 hours of machine time testing by the University of California, San Diego

The Non-Volatile Systems Laboratory performed over 330 hours of machine time testing on a dual-socket machine containing 3 TB of Intel Optane DC persistent memory.[viii] They note. “This work comprises the first in-depth, scholarly, performance review of Intel’s Optane DC PMM, exploring its capabilities as a main memory device, and as persistent, byte-addressable memory exposed to user-space applications.”

This work comprises the first in-depth, scholarly, performance review of Intel’s Optane DC PMM, exploring its capabilities as a main memory device, and as persistent, byte-addressable memory exposed to user-space applications – Izaelevitz, et. al.

The Intel Optane DC persistent memory capabilities

Like traditional DRAM DIMMs, the Intel Optane DC persistent memory sits on the memory bus and connects to the processor’s onboard memory controller. The persistent memory operates in two modes Memory mode and App Direct.

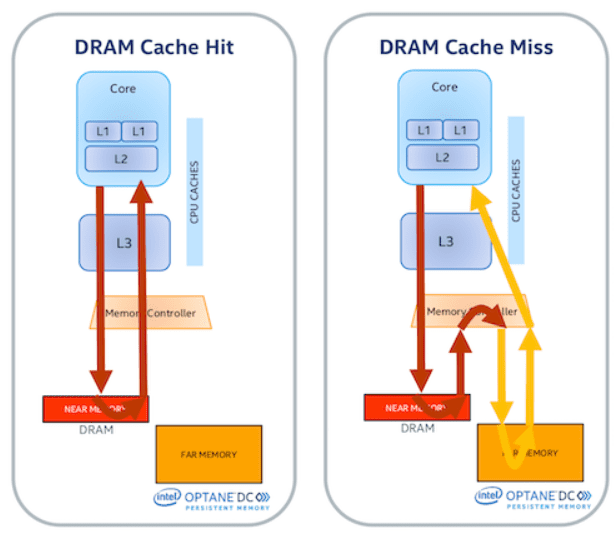

Memory Mode

Memory mode uses Intel Optane DC persistent memory to expand main memory capacity without persistence. It combines an Intel Optane DC persistent memory with a conventional DRAM DIMM that serves as a direct-mapped cache for the persistent memory module. The cache block size is 4 KB, and the CPU’s memory controller manages the cache transparently. The CPU and operating system simply see a larger pool of main memory.

Figure 4: Intel Optane DC persistent memory in memory mode

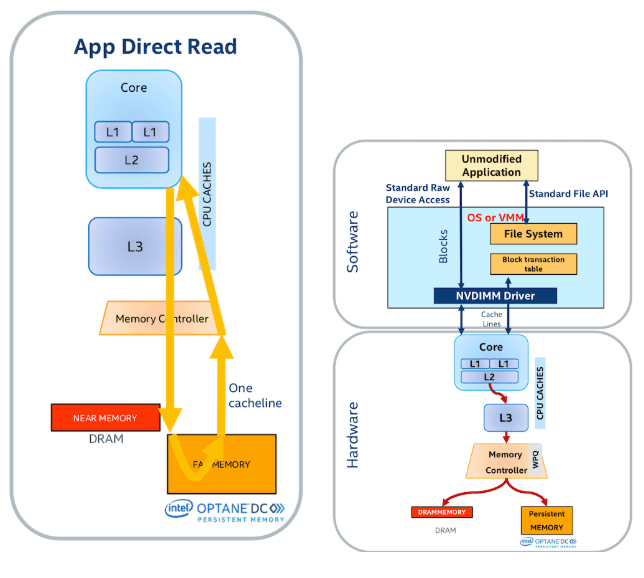

App Direct Mode

In App Direct mode, the persistent memory appears as a separate pool of memory. There is no DRAM cache. Instead, the system installs a file system to manage the pools of memory. Intel Optane DC persistent memory-aware applications and the file system can access the persistent memory via 2nd Generation Intel® Xeon® Scalable processors that enforce ordering constraints and ensure crash consistency as the persistent memory. In this way, the integrity of the Intel Optane DC persistent memory as a persistent store can be enforced.

Figure 5: Intel Optane DC persistent memory in App Direct mode

Encryption

Intel Optane DC persistent memory modules also offer on-module encryption via a 256bit AES-XTP encryption engine.

- When Memory Mode is selected, the data is cryptographically erased by the controller on the module between power cycles, thus mimicking the volatile nature of DRAM.

- In App Direct mode, persistent media is encrypted using a key stored in a security metadata region on module that is only accessible by Intel Optane DC persistent memory controller. Persistent memory is locked at a power loss event and a passphrase is required to unlock. Microsoft bitlocker or the Intel utility are two existing tools that support this capability.

Performance

The NVSL group notes that the most critical difference between Intel Optane DC persistent memory and DRAM is that the persistent memory . (Intel notes Intel DC persistent memory has near DRAM latency and bandwidth.) Load and store performance is also asymmetric. They provide detailed bandwidth studies and the reader is encourage to examine this study.

Memory Mode overview

Overall, the NVSL team observed that the persistent memory mode caching mechanism works well for larger memory footprint workloads.

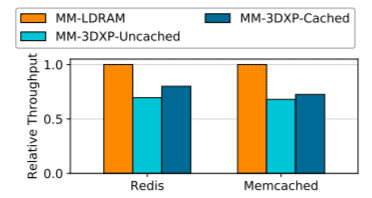

The following shows performance observed when using Memcached and Redis (each configured as a non-persistent key-value store) to manage a 96 GB data set. This was the largest size the NVSL team could fit into DRAM for comparison.

Figure 6: Large Key-Value Store Performance Intel Optane DC persistent memory can extend the capacity of in-memory key-value stores like Memcached and Redis, and 2nd Gen Intel Xeon Scalable processors can use normal DRAM to hide some of Intel Optane DC persistent memory’s latency.. The performance with uncached Intel Optane DC persistent memory is 4.8-12.6% lower than cached Intel Optane DC persistent memory. Despite performance losses, the persistent memory allows for far larger sized databases than DRAM only due to its density. Note: larger workloads could not be fit into DRAM.

App Direct mode overview

Overall the NVSL team observed that in App Direct mode, “Optane DC will profoundly affect the performance of storage systems”.

Optane DC will profoundly affect the performance of storage systems – Izaelevitz, et. al.

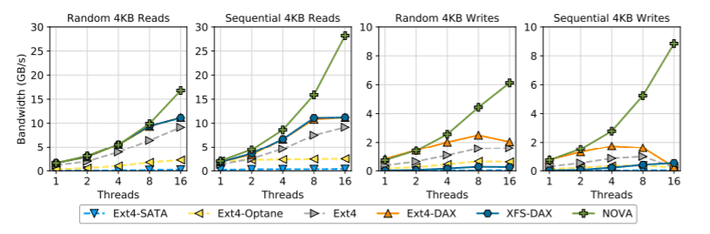

The data shown below highlights that Intel Optane DC persistent memory improves basic storage performance over a SATA flash-based SSD and a wide margin for both file-systems and applications. Again, the reader is encouraged to examine the NVSL report in detail.

Figure 7: Raw Performance in Persistent Memory File Systems Intel Optane DC persistent memory provides a big boost for basic file access performance compared to SATA SSDs (“Ext4-SATA”) and Intel Optane DC-based SSDs (“Ext4-Optane”). The data also shows improved scalability.

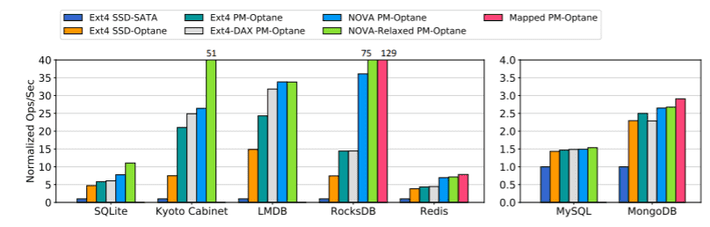

Figure 8: Application Performance on Optane DC and SSDs These data show the impact of more aggressively integrating Optane DC into the storage system. Replacing flash memory with Optane DC in the SSD gives a significant boost, but for most applications deeper integration with hardware (i.e., putting the Optane DC on a DIMM rather than an SSD) and software (i.e., using a PMEM-optimized file system or rewriting the application to use memory-mapped Optane DC) yields the highest performance.

Summary

Early results are in and we have to agree, putting large capacity persistent memory will change how we use memory and storage profoundly.

Rob Farber is a global technology consultant and author with an extensive background in HPC and in developing machine learning technology that he applies at national labs and commercial organizations. Rob can be reached at [email protected].

[i] https://www.hpcwire.com/2019/04/03/google-cloud-unveils-roadmap-for…

[ii] https://cloud.google.com/blog/topics/partners/available-first-on-go…

[iii] https://cloud.google.com/intel/

[iv] ibid

[v] https://www.intel.com/content/www/us/en/processors/xeon/scalable/so…

[vi] https://software.intel.com/en-us/articles/intel-optane-dc-persisten…

[vii] ibid