Blog key points:

- Google open-sourced TensorFlow to gain tens of thousands of more users across hundreds (thousands) of new use cases to improve the predictive effectiveness of the platform that runs Google’s business.

- In the digital economy, the economies of learning are more powerful than the economies of scale.

- Organizations must avoid building orphaned analytics—one-off analytics developed to address a specific business need but are never “operationalized” or packaged for re-use across the organization.

- Instantaneous acceleration is the acceleration of an object at a specific moment in time as measured by the second derivative.

What does Google know that the other 99.99% of organizations don’t? I don’t roam the hallowed hallways of Google or have access to any insider secrets as to their business plans and future business models, but it sure does make one contemplate why they would give away the very engine that fuels their obscenely-lucrative search business.

You see, Google uses Machine Learning and Deep Learning to fuel its Personal Photo App (it auto-magically groups your photos into storyboards or collages), recognize spoken words (natural language processing), translate foreign languages (handy for me as I frequently travel internationally), and serve as the basis for its search engine. Arguably, Machine Learning and Deep Learning are the foundation for everything that makes Google money, and TensorFlow is the foundation for those Machine Learning and Deep Learning efforts.

So why would Google open source TensorFlow and make it accessible to everyone – researchers, scientists, machine learning experts, students, and even its competitors? The Forbes article “Reasons Why Google’s Latest AI-TensorFlow is Open Sourced” gives us a glimpse into the answer:

“In order to keep up with this influx of data and expedite the evolution of its machine learning engine, Google has open sourced its engine TensorFlow.”

Google is using an open source strategy to get more folks to test and refine TensorFlow in new ways that ultimately will improve its predictive effectiveness, and make the products in which TensorFlow is based even more effective.

“In open sourcing the TensorFlow AI engine, CrowdFlower CEO Lukas Biewald says, ‘Google showed that, when it comes to AI, the real value lies not so much in the software or the algorithms as in the data needed to make it all smarter. Google is giving away the other stuff but keeping the data.[1]’”

By open-sourcing TensorFlow, Google can more easily introduce new ideas and applications, ultimately making the engine that runs Google even that much more powerful, beyond what they could do on their own. They are letting the world – including their competitors – improve the very engine that powers Google’s business models and in effect, challenging anyone to beat them at their own game…a ballsy and brilliant move.

Economic Value of Data

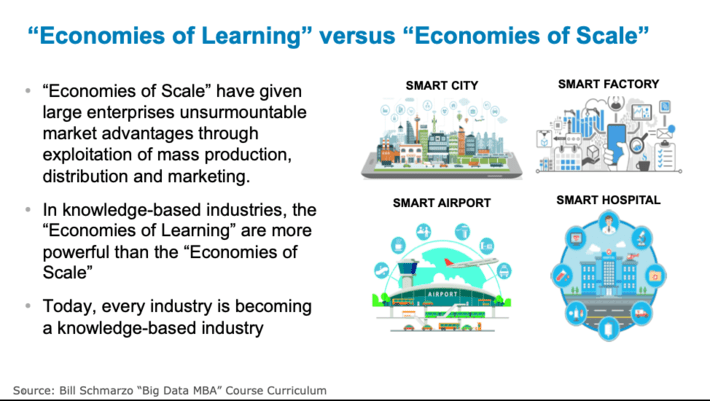

Google knows something that very few organizations have grasped, that in a knowledge-based industry, the “Economies of Learning” are more powerful than the “Economies of Scale.” And given the movement towards Digital Transformation and smart spaces, all organizations are competing in knowledge-based industries (see Figure 1).

Figure 1: All industries transforming into knowledge-based industries

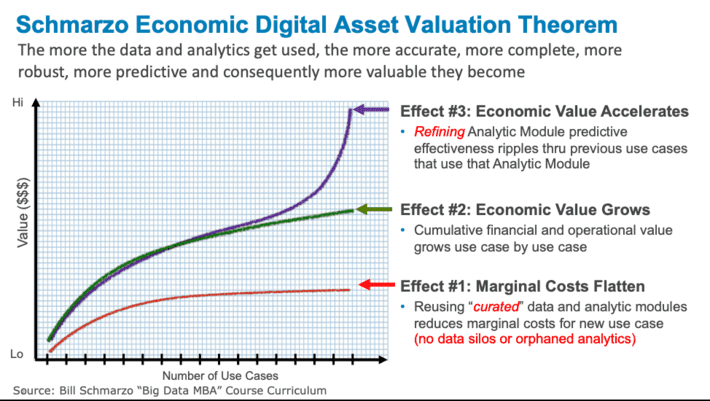

My blog “Why Tomorrow’s Leaders MUST Embrace the Economics of Digital Transf…” introduced the Schmarzo Economic Digital Asset Valuation Theorem. The Theorem takes into account a digital asset’s unique ability to simultaneously drive down marginal costs (via digital economies of scale) while accelerating the economic value creation of digital asset (via digital asset reusability). See Figure 2.

Figure 2: The Schmarzo Economic Digital Asset Valuation Theorem

The Theorem yields three economic “effects” that are unique for digital assets:

- Digital Asset Economic Effect #1: Marginal Costs Flatten. Since data never depletes, never wears out and can be reused at near zero marginal cost, marginal costs of sharing and reusing “curated” data flattens.

- Digital Asset Economic Effect #2: Economic Value Grows.Sharing and reusing the packaged/operationalized Analytic Modules across multiple use cases drives an accumulated increase in economic value.

- Digital Asset Economic Effect #3: Economic Value Accelerates.Economic value of analytic models accelerates because refinementof one analytic module lifts the value of all associated use cases.

A future blog will address Effects #1 and #2 and the important role of “curated data.” This blog will drill into how Google is leveraging Effect #3 to enable the “Economic Value Acceleration” of its analytic assets to drive their business models (see Figure 3).

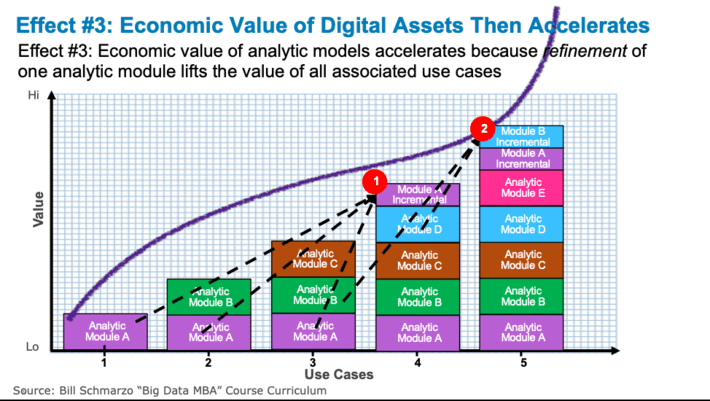

Figure 3: Schmarzo Economic Digital Asset Valuation Theorem – Economic Value Accelerates

The ramifications of Figure 3 depict the improvements and predictive effectiveness realized in use cases built from a common analytic module ripples beneficially back to all associated use cases. For example, let’s say I have an Anomaly Detection analytic module (Anomaly Detection 1.0) that I have used for multiple use cases including Vendor Delivery Management, Vendor Quality Assurance, O&E Inventory Reduction, Supply Chain Management Optimization, and Customer Retention.

Now along comes our next use case, Marketing Campaign Effectiveness, which also wants to use that same Anomaly Detection analytic module. However in the development of this use case, the data science team discovered new data enrichment and feature engineering techniques to improve the predictive effectiveness of the Anomaly Detection module (yielding Anomaly Detection 2.0). All the previous use cases that used Anomaly Detection 1.0 now experience an increase in the effectiveness of their use case via the upgrading (refinement) of the analytic effectiveness of Anomaly Detection 2.0. And those use cases get that improvement at a near zero cost (the only significant cost is the regression testing of the use case, which is probably done via an automated software testing harness anyway).

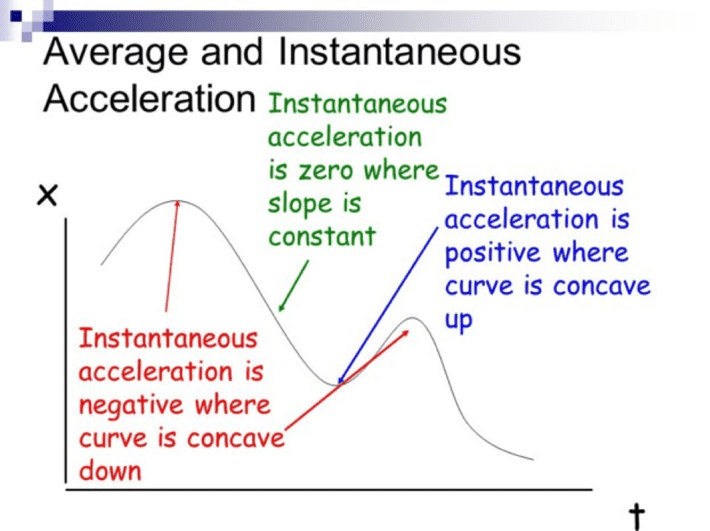

The Economic value of analytic models accelerates because the refinementof one analytic module lifts the value of all associated use cases. This is the point of instantaneous acceleration in the creation of economic value.

Nerd Alert! Instantaneous acceleration is the acceleration of an object at a specific moment of time as measured by the second derivative. On the graph of a function, the second derivative corresponds to the curvature or concavity of the graph. The graph of a function with a positive second derivative is upwardly concave (see Figure 4).

Figure 4: Slide from Average and Instantaneous Acceleration

Figure 4: Slide from Average and Instantaneous Acceleration

Beware the economic value destroying ramifications of “orphaned analytics”. Orphaned Analytics are one-off analytics developed to address a specific business need but never “operationalized” or packaged for re-use across the organization. As organizations gain expertise in developing machine learning and deep learning-fueled analytics, it becomes easier to just build new analytics versus investing the time to create packaged, reusable analytics.

Resist the easy path and learn to build and operationalize your analytics as packaged, reusable analytic modules!

Recommendations for Exploiting the Economic Value of Data Today

So, what do organizations that don’t share Google’s tremendous advantage need to do to exploit the economic value of digital assets?

Here are my recommendations:

- Stop creating “orphaned analytics”. Embrace an analytics development process similar to the one that you use for your software development process including indexing, cataloging, documentation, version control management, regression testing and support.

- Once you have created these packaged, reusable analytic assets, then embrace a data science testing, learning and refinement methodology that constantly seeks to improve the predictive effectiveness of these analytic assets.

- Finally, knock down the organizational walls that prevent organizations from sharing their analytic assets across multiple use cases. If you can’t share the packaged analytic modules, then you can’t get the exhilaration of the point of instantaneous acceleration in the creation of value.

We all can’t be Google, but we can certainly learn from the way they seek to expand their machine learning and deep learning capabilities by sharing with others outside their organization.

By the way, maybe the biggest lesson from Google is that the true source of economic value is the data (which they don’t freely share), not the machine learning and deep learning technologies. But we’ll save that topic for a future blog…

[1]Source: Wired Magazine, “Google Open-sourcing TensorFlow Shows AI’s the Future of Data”