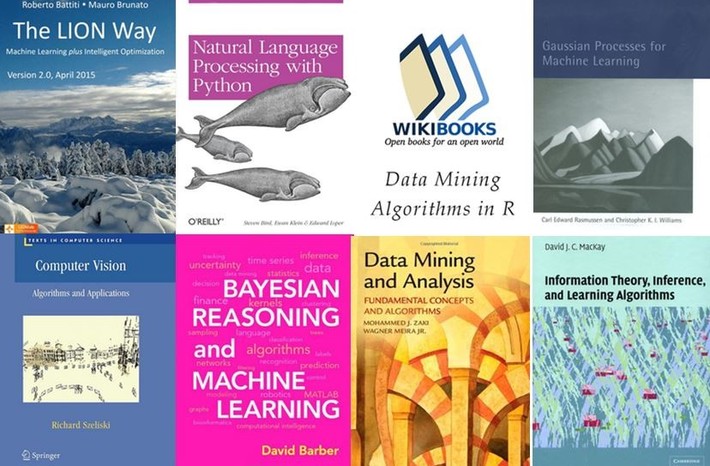

I share a list of recommended free books for Data Science & AI from introductory level to advanced techniques:

- The LION Way: Machine Learning plus Intelligent Optimization

- Computer Vision

- Natural Language Processing with Python

- Bayesian Reasoning and Machine Learning

- Data Mining Algorithms In R

- Data Mining and Analysis: Fundamental Concepts and Algorithms

- Gaussian Processes for Machine Learning

- Information Theory, Inference, and Learning Algorithms

- Probabilistic Programming & Bayesian Methods for Hackers

- Social Media Mining An Introduction

I hope that these books are of your interest, and I have found them very interesting and with real applications to our projects, even many of them I usually recommend in my formations. I hope your opinions of other free books that you use!

Originally posted here