Summary: Whether trying to predict the life outcomes of disadvantaged kids or to model where ventilators will be most needed, a little humility is in order. As this study shows, the best data and the broadest teams failed at critical predictions. Getting the model wrong, or more importantly using it in the wrong way can hurt all of us.

Archimedes famously said “Give me a lever long enough and a fulcrum on which to place it and I shall move the world”. If Archimedes had been a data scientist he might well have said ‘Give me enough data and I’ll tell you how the world turns out’. Of course if he’d said that he’d be wrong.

That hasn’t kept social scientists from gathering reams of data and applying the techniques of data science to try to predict answers to BIG QUESTIONS like can we tell how someone’s life will turn out.

If that seems like a preposterous goal, stick with me because 160 separate teams of highly educated social scientists just did that. The project setup and the data available were quite reasonable. The results were not so good.

All of this appears in the recent PNAS paper “Measuring the Predictability of Life Outcomes with Scientific Mass …” published March 30, 2020.

The study relies on a very extensive dataset, the “Fragile Family and Child Wellbeing Study”. Using a cohort from the 2000 birth year and following the children and their families extensively over the following 15 years the data set contains 12,942 variables for 4,242 families selected from unmarried families in a single large unidentified urban city.

The goal was to determine if the data could be used to predict some or all of these life outcomes:

- Overall level of poverty in their household.

- The child’s GPA.

- Their level of ‘grit’ or self-reported perseverance in school.

- Likelihood that the family will have experienced an eviction.

- Job training.

- Job loss.

The authors were not shy to point out that if these could be accurately modeled they could be used to targeting families at risk, determine social rigidity (once poor always poor), and specifically for guiding policy making (aka the allocation of tax resources).

What They Got Right

The project design was sound. All accepted 160 teams received the same training data but none of the hold out data. Results were scored against a single measure, R^2 and all the hold out data scoring was conducted by the central control team.

What they also got right was the conclusion that none of the six target questions could be answered with sufficient accuracy to be useful.

Participating teams used a wide variety of techniques from simple linear and logistic regression to much more complex techniques and models. The difference between the best and worst of 160 submissions was less than the difference from the best submission to the hold out truth. The models were better at predicting each other than at predicting the truth.

Not surprisingly, frequently the simple models were more accurate than the most complex models.

What They Concluded and Why We Should Worry

These authors and their 160 team collaborators are the good guys. They conclude that we should be very circumspect of drawing conclusions about causality or predictability from analysis of even the best appearing data using the sheen of AI/ML to appear to give accuracy.

The bad news is that the authors identify over 750 published journal articles using this same data, all of which claimed to draw valid conclusions where this study showed that statistical validity could not be found.

The authors are also candid about rejecting the use of similar models being used for all sorts of social and justice decisions such as the algorithms used to predict recidivism, bail, and those used in child protective services.

The Risk of Models – What We All Need to Remember

Most of us messed around with the Titanic Survivor data set early in our learning and it’s a good lesson. The data set is small. There are lots of missing values. And getting much above 80% accuracy is rare.

Looking back at a distant historical event like this is pretty harmless. But the lesson we were supposed to learn is that the inaccuracies of modeling mean a great deal of care needs to be taken when interpreting results as a basis for current action like planning lifeboats or allocating any other resource.

Although we use models to identify specific individuals who are likeliest to buy something, there’s not much harm beyond a little lost profit in getting it wrong. But many models, and you’re seeing this develop on live television for corona virus, are used to plan and distribute critical resources in near real time.

A 20% error rate on the Titanic data set is not going to bring anyone back. A 20% error rate, either false positive or false negative in healthcare can and probably does mean a ventilator won’t be where it’s supposed to be when it’s needed.

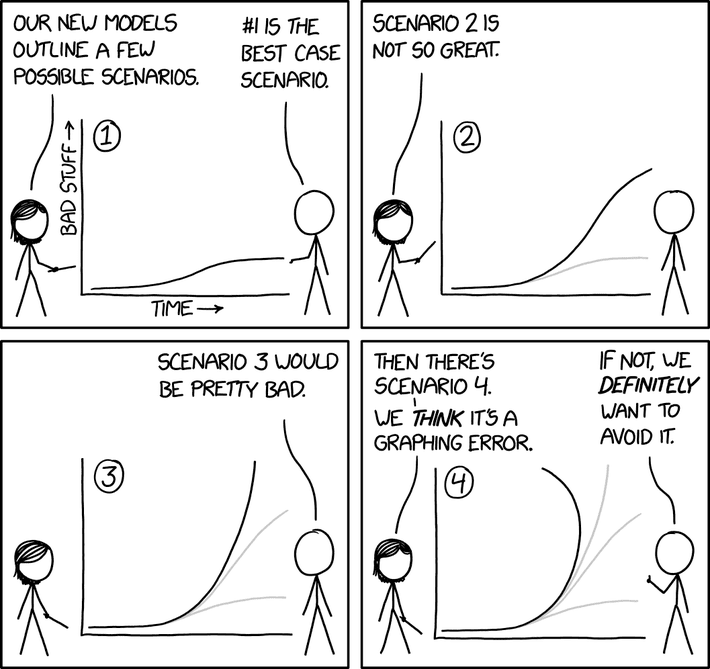

As Randall Munroe, the author behind the XKCD comic in the header makes clear for this example, “Remember, models aren’t for telling you facts, they’re for exploring dynamics”.

Models based on new or rapidly evolving data are a dangerous gamble for allocating resources. When there are many competing models as there are now with Corona Virus, picking the right one is by no means clear.

Other articles by Bill Vorhies

About the author: Bill is Contributing Editor for Data Science Central. Bill is also President & Chief Data Scientist at Data-Magnum and has practiced as a data scientist since 2001. His articles have been read more than 2.1 million times.