In February 2019 Polish government added an amendment to a banking law that gives a customer a right to receive an explanation in case of a negative credit decision. It’s one of the direct consequences of implementing GDPR in EU. This means that a bank needs to be able to explain why the loan wasn’t granted if the decision process was automatic.

In October 2018 world headlines reported about Amazon AI recruiting tool that favored men. Amazon’s model was trained on biased data that were skewed towards male candidates. It has built rules that penalized résumés that included the word “women’s”.

Consequences of not understanding models’ predictions

What is common for the two examples above is that both models in the banking industry and the one built by Amazon are very complex tools, so-called black-box classifiers, that don’t offer straightforward and human-interpretable decision rules.

Financial institutions will have to invest in model interpretability research if they want to continue using ML-based solutions. And they probably will, because such algorithms are more accurate in predicting credit risk. Amazon on the other hand, could have saved a lot of money and bad press if the model was properly validated and understood.

Why now? Trends in data modeling.

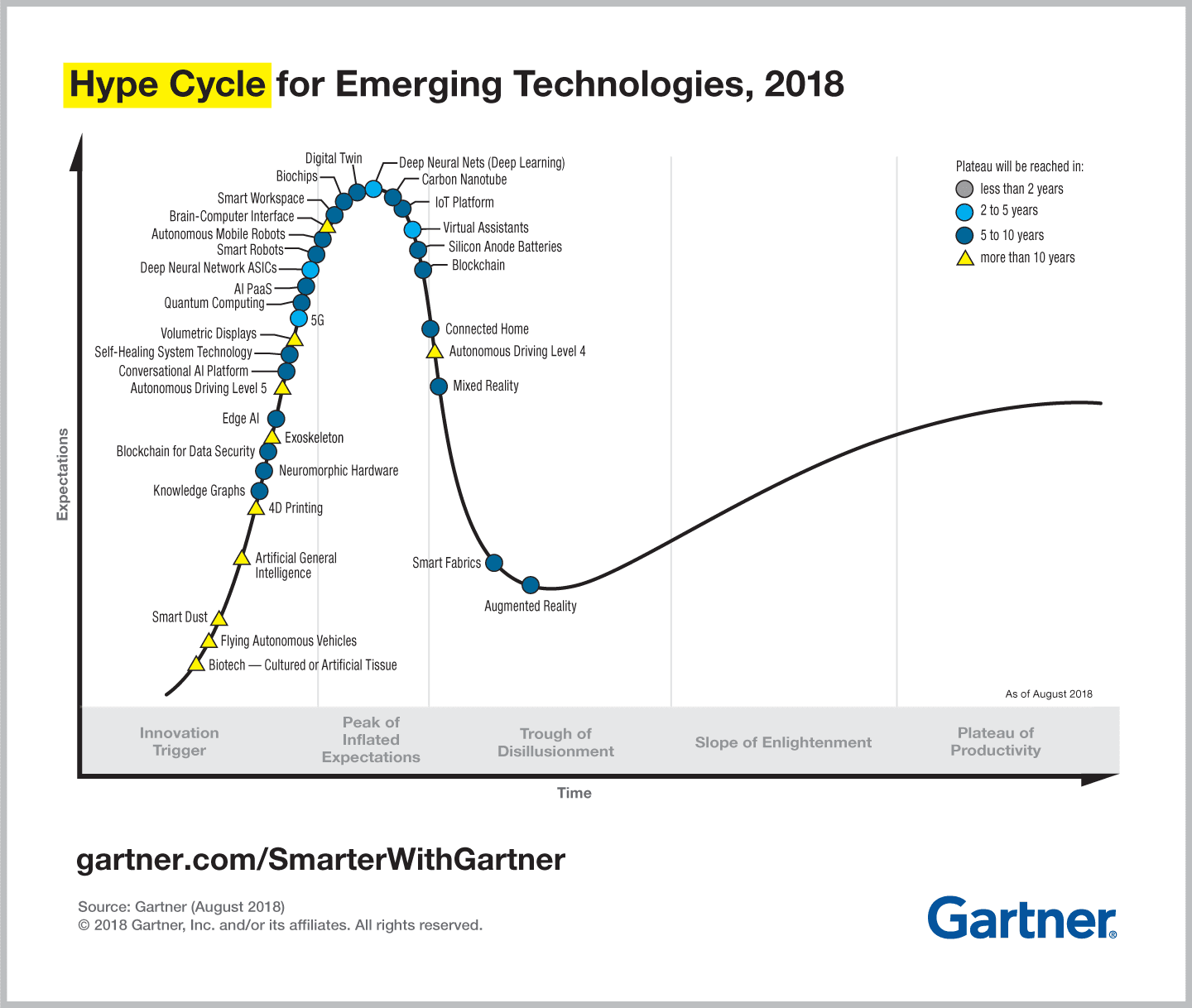

Machine learning has continued to stay on the top of Gartner’s Hype Cycle since 2014, to be replaced by the Deep Learning (a form of ML) in 2018 suggesting the adoption hasn’t reached its peak yet.

Source: Gartner

Machine learning growth is predicted to further accelerate. Based on the report by Univa 96% of the companies are expected to use ML in production in the next 2 years.

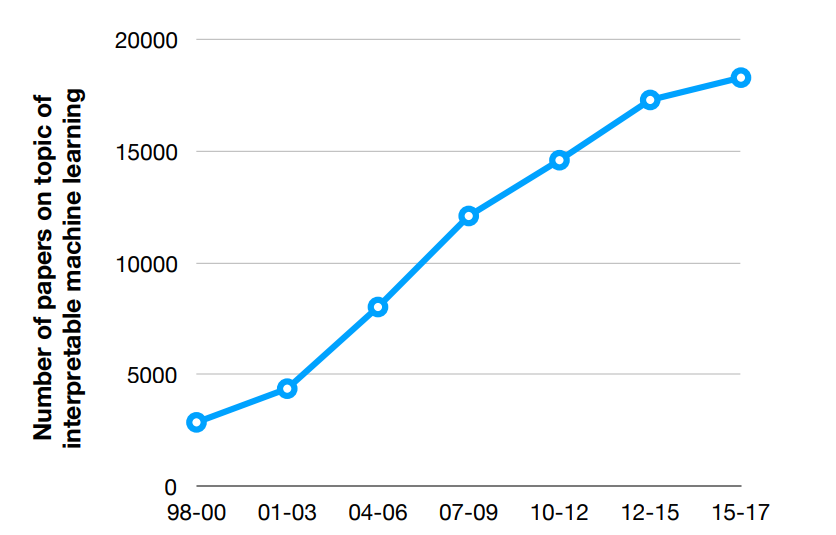

The reasons behind this are: widespread data collection, availability of vast computation resources and active open-source community. ML adoption growth is accompanied by the increase in ML-interpretability research driven by regulations like GDPR, EU’s “right to explain”, concerns about safety (medicine, autonomous vehicles), reproducibility and bias or end-users expectations (debug the model to improve it or learn something new about the studied subject).

Source: MIT

Black-box algorithms interpretability possibilities

As data scientists, we should be able to provide an explanation to end users about how a model works. However, this not necessarily means understanding every piece of the model or generating a set of decision rules.

There could also be a case where this is not required:

- problem is well studied,

- model results has no consequences,

- understanding the model by the end-user could pose a risk of gaming the system.

If we look at the results from the Kaggle’s Machine Learning and Data Science Survey from 2018, around 60% of respondents think they could explain most of machine learning models (some models were still hard to explain for them). The most common approach used to ML understanding is analyzing model features by looking at feature importance and feature correlations.

Feature importance analysis offers first good insights into what the model is learning and what factors might be important. However, this technique can be unreliable if features are correlated. It can provide good insights only if model variables are interpretable. For many GBMs libraries it’s fairly easy to generate feature importance plots.

In the case of Deep Learning situation is much more complicated. When using neural networks you could look at weights, as they contain the information about the input, but the information is compressed. What’s more, you can only analyze the connections on the first level, since on further levels it’s too complicated.

No wonder that when in 2016 LIME (Local Interpretable Model-Interpretable Explanations) paper was presented at NIPS conference it had a huge impact. The idea behind LIME is to locally approximate a black-box model with an easier to understand white-box model constructed on interpretable input data. It has proven great results providing interpretation for image classification and text. However, for tabular data, it’s difficult to find interpretable features and their local interpretation might be misleading.

LIME is implemented in Python (lime and Skater) and R (lime package and iml package, live package) and is very easy to use.

Another promising idea is SHAP (Shapley Additive Explanations). It’s based on game theory. It assumes that features are players, models are coalitions and Shapley values tell how to fairly distribute the “payout” among the features. This technique distributes the effects fairly, is easy to use and offers visually compelling implementation.

DALEX package (Descriptive Machine Learning Explanations) available in R offers a set of tools that help to understand how complex models are working. Using DALEX you can create model explainer and inspect it visually e.g. breakdown plots. You might also be interested in DrWhy.Ai which is developed by the same group of researchers as DALEX.

Practical use cases

Detecting objects on the pictures

Image recognition is already widely used, among others in autonomous cars to detect if cars, traffic lights etc. are on the picture, in wildlife conservation to detect if a certain animal is in the picture or in the insurance to detect flooding of crops.

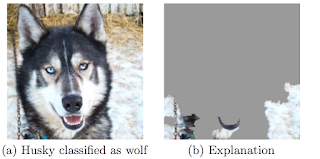

We will use the “Husky vs Wolf example” from the original LIME paper to illustrate the importance of model interpretation. The classifier task was to identify if a wolf was on the picture or not. It falsely misclassified Siberian Husky as a wolf. Thanks to LIME researchers were able to identify what areas of the pictures were important for the model. It turned out that if the picture contains snow it is classified as a wolf.

Source: LIME paper

The algorithm was using the background of the picture and totally ignoring animal characteristics. The model should look at the animal eyes instead. Thanks to this discovery it was possible to fix the model and extend the training examples to prevent the reasoning snow = wolf.

Classification as decision support system

Intensive Care Unit of Amsterdam UMC wants predict the probabilities of patient’s readmission and/or mortality at the moment of discharge. The goal is to help doctors pick the right moment to move the patient from ICU. If the doctor understands what the model is doing is more likely to use it’s recommendation in making the final judgement.

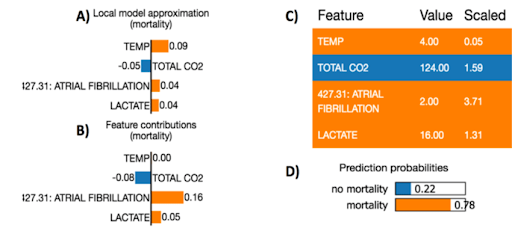

In order to demonstrate how such model can be interpreted using LIME, we can have a look at the example from another study that aims to do early prediction of the mortality at the ICU. Random Forest model (a black-box model) is used to predict mortality status and lime package is used to locally explain the prediction score for every patient.

Source: ResearchGate

A patient from the selected example has high death probability (78%). The model features that contribute to mortality are higher counts of atrial fibrillation and higher lactate level, which is consistent with current medical understanding.

Humans and machines – a perfect match

In order to achieve success in building an interpretable AI we need to combine data science knowledge, algorithms and end users expertise. Data science work doesn’t finish after creating the model. It’s an iterative, usually long process with feedback loops provided by the experts, making sure the outcome is solid and understandable by humans.

We strongly believe that by combining humans expertise and machines performance we can obtain the best conclusion: improve machine results and overcome human gut-feel bias.

originally published by Olga Mierzwa-Sulima