This article was written by Ed Sperling.

Semiconductor Engineering sat down with Rob Aitken, an Arm fellow; Raik Brinkmann, CEO of OneSpin Solutions; Patrick Soheili, vice president of business and corporate development at eSilicon; and Chris Rowen, CEO of Babblelabs. What follows are excerpts of that conversation.

SE: Where are we with machine learning? What problems still have to be resolved?

Aitken: We’re in a state where things are changing so rapidly that it’s really hard to keep up with where we are at any given instance. We’ve seen that machine learning has been able to take some of the things we used to think were very complicated and rendered them simple to do. But simple can be deceiving. It’s not just a case of, ‘I’ve downloaded TensorFlow and magically it worked for me, and now all the problems I used to have 100 people do are much simpler.’ The problems move to a different space. For example, we took a look at what it would take to do machine learning for verification test generation for processors. What we found is that machine learning is very good at picking from a set of random test programs the ones are more likely to be useful test vectors than others. That rendered a complicated task simpler, but it moved the problem to a new space. How do you convert test data into something that a machine learning algorithm can optimize? And then, how do you take what it told you and bring it back to the realm of processor testing? So we find a lot of moving the problem around, in addition to clever solutions for problems that we had trouble with before.

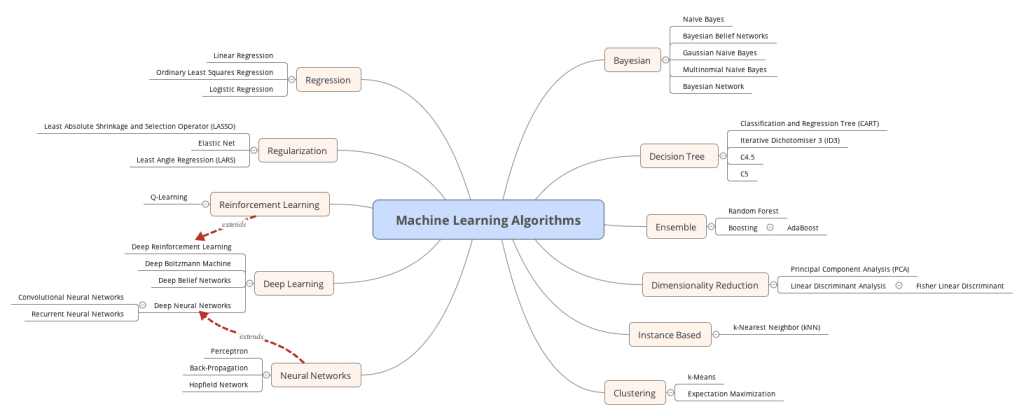

Rowen: Machine learning and deep learning are giving us some powerful tools in what, for most of us, is an entirely new area of computing. It’s statistical computing, with the creation of very complex models from relatively unstructured data. There are a bunch of problems that historically have been difficult or esoteric, or really hard to get a handle on, which now we can systematically do better than we were ever able to do in the past. That’s particularly true when it’s in the form of, ‘Here’s some phenomenon we’re trying to understand and we’re trying to reproduce it in some fashion. We need an approximate model for that.’ There are a whole bunch of problems that fall into that domain. This new computing paradigm gives algorithm designers and software developers a new hammer, and it turns out it’s a pretty big hammer with a wide variety of nails, as well as some screws and bolts. But it is not universal. There are lots of kinds of problems that are not statistical in nature, where you’re not trying to reproduce the statistical distribution you found in some source data, and where other methods from AI or other classical methods should be used. It is fraught with issues that have to do with a lack of understanding of statistics. People don’t entirely understand bias in their data, which results in bias in their model. They don’t understand the fragility of the model. You generally can’t expect it to do anything reasonable outside of the strict confines of the statistical distribution on which it was trained. And often, today, these models fail the reasonableness test. You expect them to know things they can’t possibly know because they weren’t trained for that. One of the big challenges for all of this is to not only train the deep learning models for the behaviors that you want, but to also view some of the reasonableness principles. If you’re dealing with visual systems, you want them to know about gravity and the 3D nature of objects. Those tend to be outside the range of what we can do with these models today.

Brinkmann: I agree. It’s a powerful tool in the engineering toolbox of any company that does scientific or technical work. The best applications of this are when you control the space you’re using it in, and when a human is still in the loop to tell the difference between when it behaves reasonably and when it does not. So whenever you optimize your business processes or your test vectors, or something you don’t understand the nature of, it may be a good use of machine learning. But when people have certain expectations, and it hits data it may not have seen before, it starts to fail. That’s where the trouble starts. People believe that machine learning is a universal solution. That’s not the case. We need to make sure people understand the limits of these technologies, while also removing the fear that this technology will take over their jobs. That’s not going to happen for a long time. If you want to use machine learning in applications that are related to safety, like automotive, one key component that’s missing is these systems do not explain themselves. There is no reasoning that you can derive from a network that has been trained about why it does what it does, or when does it fail. There is lots of research going on right now in this area to make these systems more robust and to find a way to verify them. But it has to come with a good understanding of the statistical nature of what you’re dealing with. Applying machine learning is not easy. You need a lot more than a deep learning algorithm. There are other ideas around vision learning and new technologies that make it easier to explain how these things work. This is one of the biggest differences with classical engineering, where you always had an engineer in the loop to explain why something works.

To read the rest of the conversation, click here.