Mobile health (mHealth) is considered one of the most transformative drivers for health informatics delivery of ubiquitous medical applications. Machine learning has proven to be a powerful tool in classifying medical images for detecting various diseases. However, supervised machine learning requires a large amount of data to train the model, whose storage and processing pose considerable system requirements challenges for mobile applications. Therefore, many studies focus on deploying cloud-based machine learning, which takes advantage of the Internet connection to outsource data-intensive computing.

However, this kind of approach comes with certain drawbacks:

▸Latency: It takes time for a cloud-based service to respond to a client request.

▸Privacy: Privacy issues might arise by sending sensitive data to the cloud, especially in the context of medical and health applications.

▸Cost: Cloud-based approaches incur financial costs from cloud service providers.

▸Connectivity: a Network connection is essential to run the cloud-based app, but cloud-based service may not always be available.

▸Customization: In general, cloud services provide generic models based on their common datasets. These models may not be appropriate or customizable for specific health problems.

To tackle these challenges of mHealth applications, we present an on-device inference App.

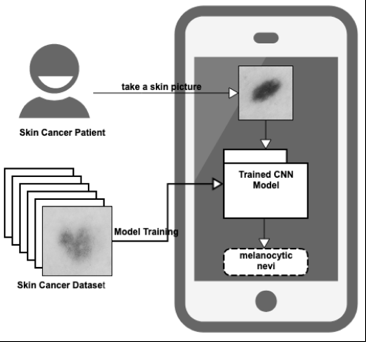

On-device Inference for Skin Cancer Detection

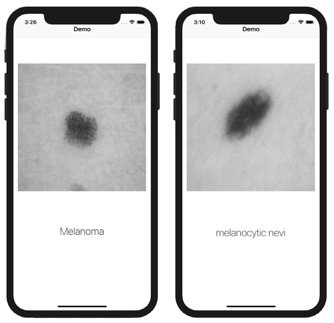

We solved this problem by performing the training phase on a powerful computer and the inference phase on a mobile device. the classification model is pre-trained and stored on a mobile device, where it is used to perform classification of new data, which, consequently, does not need to be shared externally. the model is then deployed on a mobile device, where the inference process takes place, i.e. when presented with new test image all computations are executed locally where the test data remains

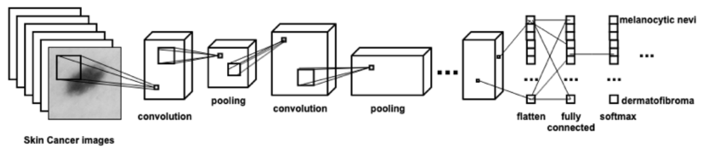

The architecture of CNNs for Skin Cancer Detection

The convolution and pooling layers perform feature extraction by capturing the general characteristics of the images. The fully connected layers assign a probability for the input image according to the given features.

Data Augmentation

One of the difficulties of automatically processing skin images is that an image may be taken under a variety of conditions (e.g., brightness, angle, focal distance), which makes the comparison of images and identification of pertinent features difficult. To minimize the impact of image parameters on the classification model, we incorporated data augmentation, which in our case produces new images by randomly rotating, zooming, shifting, and cropping from the center of existing images. These additional images can then be used to boost training.

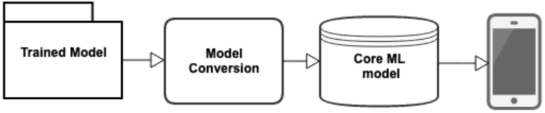

Model Conversion and Integrating Pre-trained Model into the App

this on-device inference approach substantially reduces latency. it improves privacy because it does not require a patient to send images to a third-party cloud service. it eliminates the overhead and cost of running and maintaining cloud services.

The main limitation of our approach is that the pre-trained model is not easily updated because the offline model is integrated in the app.