Logistic Regression is one of the most powerful classification methods within machine learning and can be used for a wide variety of tasks. Think of pre-policing or predictive analytics in health; it can be used to aid tuberculosis patients, aid breast cancer diagnosis, etc. Think of modeling urban growth, analysing mortgage pre-payments and defaults, forecasting the direction and strength of stock market movement, and even predicting sport outcomes.

Reading all of this, the theory[1] of Maximum Entropy Classification might look difficult. In my experience, the average Developer does not believe they can design a proper Maximum Entropy / Logistic Regression Classifier from scratch. I strongly disagree: not only is the mathematics behind is relatively simple, it can also be implemented with a few lines of code.

I have done this in the past month, so I thought I’d show you how to do it. The code is in Python but it should be relatively easy to translate it to other languages. Some of the examples contain self-generated data, while other examples contain real-world (medical) data. As was also done in the blog-posts about thebag-of-words model and the Naive Bayes Classifier, we will also try to automatically classify the sentiments of Amazon.com book reviews.

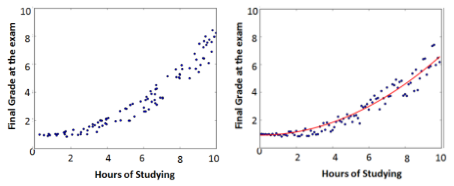

To read the rest of this long blog-post, including source code, detailed explanations, and charts (such as the above one), click here.