This article was written by Devin Soni.

Markov chains are a fairly common, and relatively simple, way to statistically model random processes. They have been used in many different domains, ranging from text generation to financial modeling. A popular example is r/SubredditSimulator, which uses Markov chains to automate the creation of content for an entire subreddit. Overall, Markov Chains are conceptually quite intuitive, and are very accessible in that they can be implemented without the use of any advanced statistical or mathematical concepts. They are a great way to start learning about probabilistic modeling and data science techniques.

Scenario

To begin, I will describe them with a very common example:

Imagine that there were two possible states for weather: sunny or cloudy. You can always directly observe the current weather state, and it is guaranteed to always be one of the two aforementioned states.Now, you decide you want to be able to predict what the weather will be like tomorrow. Intuitively, you assume that there is an inherent transition in this process, in that the current weather has some bearing on what the next day’s weather will be. So, being the dedicated person that you are, you collect weather data over several years, and calculate that the chance of a sunny day occurring after a cloudy day is 0.25. You also note that, by extension, the chance of a cloudy day occurring after a cloudy day must be 0.75, since there are only two possible states.You can now use this distribution to predict weather for days to come, based on what the current weather state is at the time.

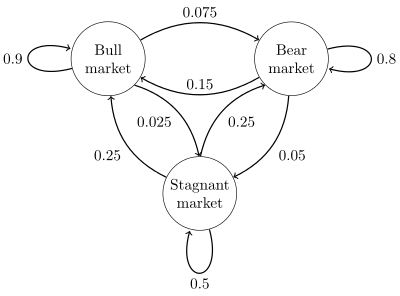

This example illustrates many of the key concepts of a Markov chain. A Markov chain essentially consists of a set of transitions, which are determined by some probability distribution, that satisfy the Markov property.

To read the rest of this article, with illustrations, click here.