Today we are really happy to host a post from Ariadni-Karolina Alexiou or Caroline in short. Caroline is a Data Engineer, with deep expertise in Python for Data Applications, Web Development and building Data Pipelines. Problems with “beasts” like pandas, matplotlib, or numpy ? Caroline will be there for you. Data transformation issues? No worries Caroline is your person to go. You get the idea.

George:

So, Caroline tell me a few words about you.

Caroline:

I am a Data Engineer, straddling the line between Software Engineer and Data Scientist. I am originally from Greece — a graduate from the Department of Informatics in Athens and an MSc Degree holder from ETH Zurich. I’ve been living and working in Zurich for the past five years. In addition to working with data, I also have fullstack experience and I mentor people online via the Codementor platform.

Finding the best way to look at available data so that we can make decisions

George:

What makes you so interested in your industry?

Caroline:

What interests me the most is the following, which doesn’t necessarily have to do with TeraBytes of data, size is incidental; my interest lies in finding the best way to look at available data so that we can make decisions. Perhaps the best way is to build the best visualization or exploration tool so that the domain expert in house can have an a-ha moment, without being bogged down doing ETL or chasing down incompatible data sources. It’s a fact of life that the people most suited for extracting insight out of a dataset may not be the same people that are suited for building a data analysis pipeline out of it, so collaboration is necessary.

People most suited for extracting insight out of a dataset may not be the same people that are suited for building a data analysis pipeline out of it.

I am interested in building the tools to facilitate this collaboration — essentially, making it possible to extract value from data, leveraging diverse skillsets from teams of people and diverse datasets.

TimeManager

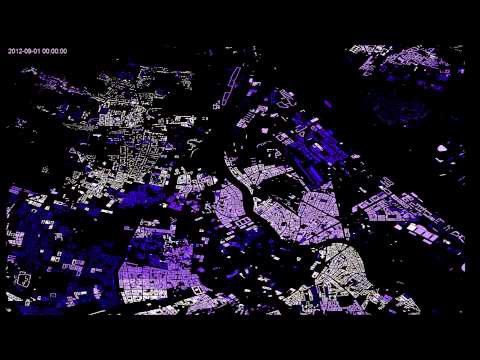

Caroline has also built together with Anita Graser (Researcher at the AIT — Austrian Institute of Technology) a general-purpose tool that aids scientists to look into datasets that contain both space and time dimensions.

It is called Time Manager and is available as open source here.

Courtesy of TimeManager

Building Data pipelines and challenges

Caroline:

Data pipelines are useful to model and structure the transformation of data in a modular way, as opposed to it taking place in just one big script, which is what ends up happening when no design process takes place. There are several things to take care of, and in fact I have talked about pitfalls in a previous article — mostly the last 4 items and a new, more comprehensive guideline for designing data pipelines is in the works. In a nutshell, the biggest challenges in building data pipelines, have been those pertaining to ensuring data format compatibility and integrity –designing an interface that has strictly defined I/O, if you will, and is easy to be worked on by multiple people. It is also crucial to design your data pipeline in anticipation of variance in the data formats and data sources, because the available data is always changing and expanding.

design your data pipeline in anticipation of variance in the data formats and data sources.

Where is the technology going?

Caroline:

I believe the underlying technology is becoming more and more complex, creating the need for more and more abstractions. The web development process has abstractions and patterns to allow teams of people to make progress simultaneously on different parts of the codebase without stepping on each other’s toes. Data pipelines are not there yet, I would say, although for me it’s clear that, same as how you have Model-View-Controller in web development, in Data Engineering you have the different (and independent) stages of Data Preparation-Algorithms-Evaluation. For me this is a much more interesting issue to get right than any speculation about what the dominant language/framework is going to be.

What about the frameworks that are keep pop-ing up, now days?

Caroline:

The framework’s role in all this is to make sure the data processing is done fast — if the problem doesn’t have a speed problem, then it is ill-advised to introduce a complexity problem by using a framework when a Python script would do. I have actually spoken about this (see the presentation bellow), where I point out that even if your raw data is big, the filtered down results may not be, so they can be analyzed and visualized using simpler methods.

http://www.slideshare.net/SwissHUG/closing-the-loop-for-evaluating-…

Caroline:

There is a fourth facet to data-related development, which is the parallelization problem. I believe that whichever data processing framework manages to become easy to use for people that are good at writing non-parallel code and has a web interface that is easy to understand, will become widely used and set the trend for the what’s to come. Hadoop required a rigid adherence to the Map/Reduce paradigm to get work done, but Spark lets the programmer more or less write serial code and takes the parallelization details away, which is a big improvement. What is still missing is guidelines and frameworks that make collaboration between the people dealing with data easy and less prone to yield either wrong or incomplete information in any of the three stages of data preparation, algorithmic analysis and evaluation.

Originally published a tblog.blendo.co