Introduction:

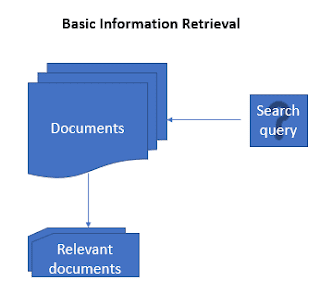

In this post, we learn about building a basic search engine or document retrieval system using Vector space model. This use case is widely used in information retrieval systems. Given a set of documents and search term(s)/query we need to retrieve relevant documents that are similar to the search query.

Problem statement:

The problem statement explained above is represented as in below image.

Before we get into building the search engine, we will learn briefly about different concepts we use in this post:

Vector Space Model:

A vector space model is an algebraic model, involving two steps, in first step we represent the text documents into vector of words and in second step we transform to numerical format so that we can apply any text mining techniques such as information retrieval, information extraction,information filtering etc.

Let us understand with an example. consider below statements and a query term. The statements are referred as documents hereafter.

Document 2: Dog runs behind cat

Query: rat

Document vectors representation:

In this step includes breaking each document into words, applying preprocessing steps such as removing stopwords, punctuations, special characters etc. After preprocessing the documents we represent them as vectors of words.

Below is a sample representation of the document vectors.

Document 2: (Dog, runs, behind, cat)

Query: (rat)

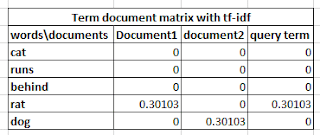

Next step is to represent the above created vectors of terms to numerical format known as term document matrix.

Term Document Matrix:

A term document matrix is a way of representing documents vectors in a matrix format in which each row represents term vectors across all the documents and columns represent document vectors across all the terms. The cell values frequency counts of each term in corresponding document. If a term is present in a document, then the corresponding cell value contains 1 else if the term is not present in the document then the cell value contains 0.

After creating the term document matrix, we will calculate term weights for all the terms in the matrix across all the documents. It is also important to calculate the term weightings because we need to find out terms which uniquely define a document.

We should note that a word which occurs in most of the documents might not contribute to represent the document relevance whereas less frequently occurred terms might define document relevance. This can be achieved using a method known as term frequency – inverse document frequency (tf-idf), which gives higher weights to the terms which occurs more in a document but rarely occurs in all other documents, lower weights to the terms which commonly occurs within and across all the documents.

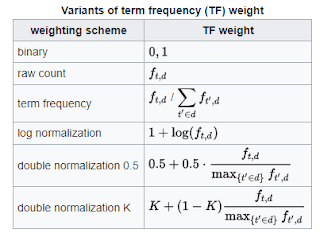

tf = term frequency is the number of times a term occurs in a document

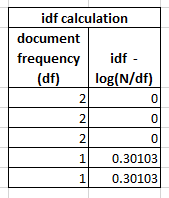

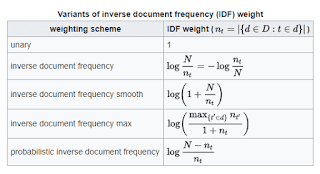

idf = inverse of the document frequency, given as below

idf = log(N/df), where df is the document frequency-number of documents containing a term

Note, there are many variations in the way we calculate the term-frequency(tf) and inverse document frequency (idf), in this post we have seen one variation. Below images show as the other recommended variations of tf and idf, taken from wiki.

Similarity Measures: cosine similarity

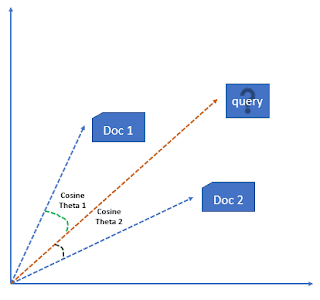

Mathematically, closeness between two vectors is calculated by calculating the cosine angle between two vectors. In similar lines, we can calculate cosine angle between each document vector and the query vector to find its closeness. To find relevant document to the query term , we may calculate the similarity score between each document vector and the query term vector by applying cosine similarity . Finally, whichever documents having high similarity scores will be considered as relevant documents to the query term.

When we plot the term document matrix, each document vector represents a point in the vector space. In the below example query, Document 1 and Document 2 represent 3 points in the vector space. We can now compare the query with each of the document by calculating the cosine angle between them.

Apart from cosine similarity, we have other variants for calculating the similarity scores and are shown below:

- Jaccard distance

- Kullback-Leibler divergence

- Euclidean distance

Now that we have learnt the important concepts required for implementing our problem statement, we now look at the data which will be used in this post and its implementation in R programming language.

Data description:

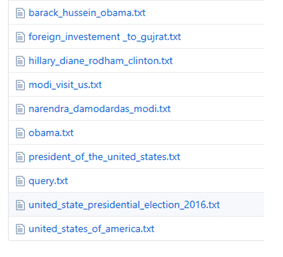

For this post, we use 9 text files containing news articles and a query file containing search queries. Our task is to find top-3 news articles relevant to each of the query in the queries files.

The dataset which we will be using is uploaded to GitHub and is located at below location:

https://github.com/sureshgorakala/machinelearning/tree/master/data ;

The news articles data is available in txt files as shown in below image:

Below is the snippet of news article in the first txt file baract_hussein_obama.txt,

“Barack Hussein Obama II (US Listeni/bəˈrɑːk huːˈseɪn oʊˈbɑːmə/;[1][2] born August 4, 1961) is the 44th and current President of the United States. He is the first African American to hold the office and the first president born outside the continental United States. Born in Honolulu, Hawaii, Obama is a graduate of Columbia University and Harvard Law School, where he was president of the Harvard Law Review. He was a community organizer in Chicago before earning his law degree. He worked as a civil rights attorney and taught constitutional law at the University of Chicago Law School between 1992 and 2004. While serving three terms representing the 13th District in the Illinois Senate from 1997 to 2004, he ran unsuccessfully in the Democratic primary for the United States House of Representatives in 2000 against incumbent Bobby Rush ….”

Below are the sample queries to which we will extract relevant documents, available in query.txt file, is shown below:

“largest world economy

barack obama

united state president

donald trump and united state

donald trump and barack obama

current President of the United States”

our task is to create a system in which for each of the query terms retrieve top-3 relevant documents.

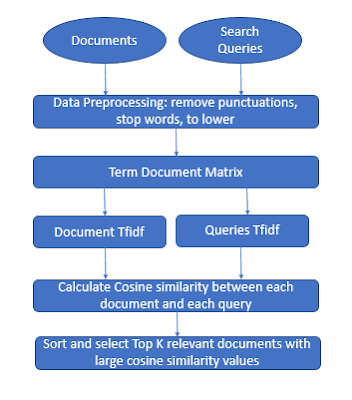

High level system design:

In this section we show the high-level design implementation. The implementation steps are as follows:

- Load documents and search queries into the R programming environment as list objects.

- Preprocess the data by creating a corpus object with all the documents and query terms, removing stop words, punctuations using tm package.

- Creating a term document matrix with tf-idf weight setting available in TermDocumentMatrix() method.

- Separate the term document matrix into two parts- one containing all the documents with term weights and other containing all the queries with term weights.

- Now calculate cosine similarity between each document and each query.

- For each query sort the cosine similarity scores for all the documents and take top-3 documents having high scores.

Full code implementation available here.