Source

Background and Origin of Service Mesh Network – Istio

In enterprise AI architectures, Kubernetes is a preferred choice for container-orchestration and for automating computer application deployment, scaling, and management.

Kubernetes with Istio creates a smooth experience of separating traffic flow by compartmentalizing —

- The flow of business-related traffic through data plane and

- The flow of messages and interactions between Istio components to control mesh behavior.

Istio is an open-source mesh service first created by Google, IBM, and Lyft, as a collaborative effort.

A Service mesh is a shared set of names and identities that allows for common policy enforcement and telemetry collection, where Service names and workload principals remain unique. It is an abstraction for inter-connected services interacting with each other to reduce the complexity of managing connectivity, security, and observability of applications in a distributed environment.

Istio, the “service mesh” is intended to connect application components and thereby boost the capabilities of the Kubernetes cluster orchestrator, by managing popular micro-services. It is widely known for its ability to simplify enterprise deployment of micro-services by layering itself transparently onto existing distributed infrastructure and allowing developers to add, change, and route them within cloud-native applications.

In this blog, we describe the importance of Istio in the management of Enterprise-grade API Solutions. Istio’s traffic management and telemetry features help to deploy, serve, and monitor ML models in the cluster.

The detailed working components of Istio are out of the scope of this blog, but the links mentioned in the References section can be referred.

Motivation – Why Istio

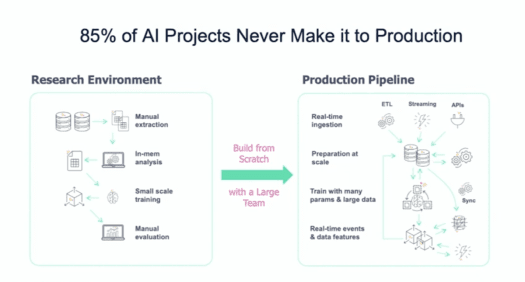

The real challenge in of ML models arises when they are deployed in production with incoming live-traffic. The situation becomes more critical when a host of micro-services need to managed, monitored, and updated with new ML models getting published and their end-user API interfaces getting released.

Istio is known for providing a complete solution with insights and operational control over connected services within the “mesh”. Some of the core features of Istio includes:

- Load balancing on HTTP, gRPC, TCP connections.

- Traffic management control with routing, retry, and failover capabilities.

- A monitoring infrastructure that includes metrics, tracing, and observability components

- End to end TLS security – Securing communication by encrypting the data flows for communication between services as well as between services and end-users.

- Binary processes and written in different languages.

- Managing timeouts and communication failures in distributed micro-services, when the services can lead to cascading failures.

- Updating simultaneous containers when microservices are triggered by multiple functions or by user actions.

- Service meshes help with modernization, allowing organizations to upgrade their services inventory without rewriting applications, adopting microservices or new languages, or moving to the cloud.

- API Gateway: Without having Kubernetes applications up and running, service mesh – Istio can be used to measure API usage.

Architecture

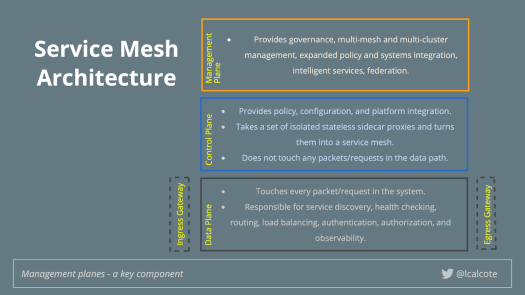

The different architectural components of Istio are illustrated in the figure below, where 3 different planes namely the Data Plane, the Control Plane and the Management Plane provides policy-driven routing request along with configuration management of distributed clusters.

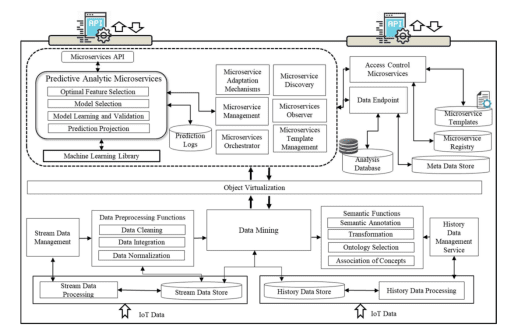

Enterprise AI Microservices

Any predictive system in an Enterprise AI system is built of a host of micro-services, starting from data pre-processing, modeling, and data post-processing. Such an architecture necessitates individual micro-service deployment and traffic routing. And that’s where Istio seats on top of Kubernetes to provide those functionalities.

Istio Configuration and Deployment

Custom resource definitions (CRDs) from downloaded Istio package directory:

kubectl apply -f install/kubernetes/helm/istio/templates/crds.yaml

Deployment of Istio operator resources to the cluster from the packaged “all-in-one” manifest:

kubectl apply -f install/kubernetes/istio-demo.yaml

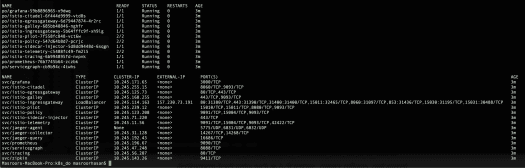

Once deployed, we should see the following list of services in the istio-system namespace:

All pods should be in Running state and the above services are available. The below command displays mechanism to enable side-car injection to the default namespace:

kubectl label namespace default istio-injection=enabled --overwrite

Istio Architecture and Traffic Management

Istio provides easy rules and traffic routing configurations to setup service-level properties like circuit-breakers, timeouts, and retries as well as deployment-level tasks such as A/B testing, canary rollouts, and staged rollouts.

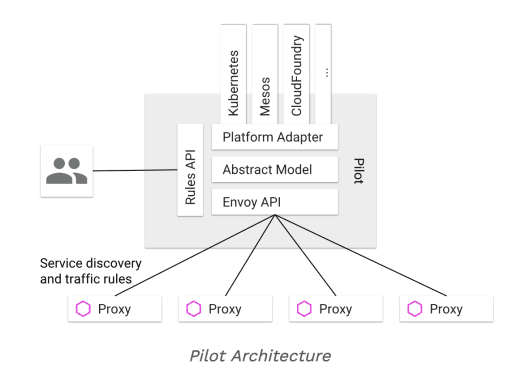

The most important components of Istio traffic management are Pilot and Envoy. Pilot is the central operator that manages service discovery and intelligent traffic routing between all services. It applies conversion rules to high-level routing and propagates them to necessary Envoy side-car proxies.

The Istio data plane is composed of Envoy sidecar proxies running in the same network space as each service to control all network communication between services, as well as Mixer, to provide extensible policy evaluation between services.

The Istio control plane is responsible for the APIs used to configure the proxies and Mixer as part of the data plane. The key components of the control plane are Pilot, Citadel, Mixer, and Galley.

The below figures illustrates a typical Istio Architecture which depicts the Istio components used to implement the ser‐

vice mesh reference architecture.

Envoy is a high-performance proxy responsible for controlling all inbound and outbound traffic for services in the mesh, which is deployed as a side-car container with all Kubernetes pods within the mesh. Some built-in features of Envoy include:

- Service discovery

- Load balancing

- HTTP and gRPC proxies

- Staged rollouts with %-based traffic split

- Rich metrics

- TLS termination, circuit breakers, fault injection, and many more!

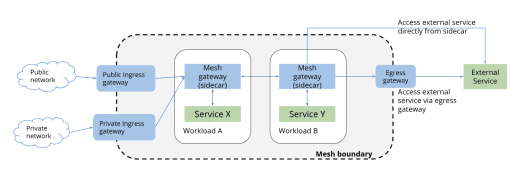

Istio Egress and Ingress

Istio de-couples traffic management from infrastructure with easy rules configuration to manage and control the flow of traffic between services.

In order for traffic to flow in and out of our “mesh” the following Istio configuration resources, must be setup properly

Gateway: Load-balancer operating at the edge of the “mesh” handling incoming or outgoing HTTP/TCP connections.VirtualService: Configuration to manage traffic routing between Kubernetes services within “mesh”.DestinationRule: Policy definitions intended for service after routing.ServiceEntry: Additional entry to internal Service Configuration; can be specified for internal or external endpoints.

Configure Egress

By default, Istio-enabled applications are unable to access URLs outside the cluster. Since we will use S3 bucket to host our ML models, we need to setup a ServiceEntry to allow for outbound traffic from our Tensorflow Serving Deployment to S3 endpoint.

Create the ServiceEntry rule to allow outbound traffic to S3 endpoint on defined ports:

kubectl apply -f algorithm-selector_egress.yaml serviceentry "featurestore-s3" created (metadata as specified in yaml file)

Configure Ingress

To allow incoming traffic into our “mesh”, we need to setup an ingress Gateway. Our Gateway that will act as a load-balancing proxy by exposing port 31400 to receive traffic:

Use Istio default controller by specifying the label selector istio=ingressgateway so that our ingress gateway Pod will be the one that receives this gateway configuration and ultimately expose the port.

Create the Gateway the resource we defined above:

kubectl apply -f algorithm-selector_gateway.yamlgateway "algorithm-selector-gateway" created

Canary Routing

With the new model deployment, gradual rolling it out to a subset of the users is easy with Istio. This can be done by updating our VirtualService to route a small % of traffic to v2 subset. Istio allows updating VirtualService to route 30% of incoming requests to v2 model deployment:

kubectl replace -f algo-selector_v2_canary.yaml Replace virtualservice and destinationrule with "micro-service name" .

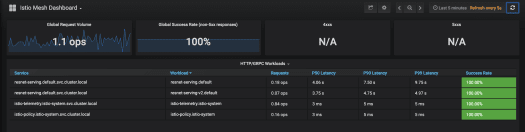

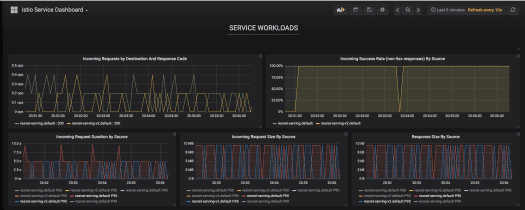

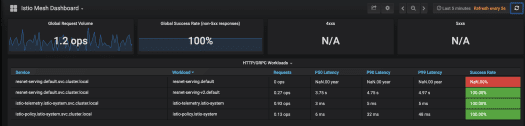

Istio further supports Dashboards to load test and observe the Istio Mesh dashboard for latency metrics across two versions of the algo-selector-serving workloads.

The requests by destination show a similar pattern with traffic split between "microservice-v1" and algo-selector "microservice-v2" deployments.

V2 Routing

Once the canary version satisfies the model behavior and performance thresholds, the deployment can be promoted to be GA to all users. The following VirtualService and DestinationRule is configured to route 100% of traffic to v2 of our model deployment.

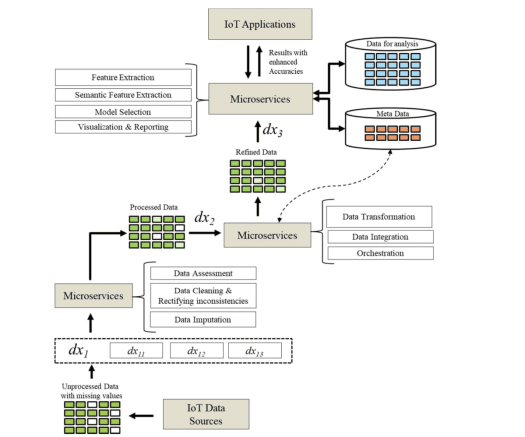

IoT Enterprise AI Solution

The following architecture demonstrates Iot based distributed AI solution with a host of micro-services where Istio plays a foremost role due to the following reasons.

- Rolling out new micro-services that facilitates installation or removal of Iot devices and applications.

- Updating micro-services due to servicing, rolling out new features or Iot device recalibration.

- Ensuring privacy and Confidentiality of Iot data

Automating Canary Releases

Istio traffic routing configuration allows :

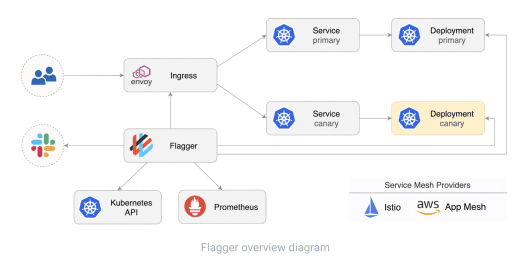

- Perform canary releases by programmatically adjusting the relative weighting of traffic between downstream service versions. Such automatic canary deployments are possible using an open-source progressive canary deployment tool by Weaveworks: Flagger.

- In Auto Canary Deployment, Flagger (Kubernetes operator) automates iterative deployment and promotion, along with Istio and App Mesh traffic routing features based on custom Prometheus metrics.

Istio Addons

Istio plugins integrate service-level logs with the cloud backend monitoring systems that are usually done for cluster-level logging (e.g., Fluentd, Elasticsearch, Kibana). Istio uses Prometheus– the same metrics collection and alarming utility that are used across different cloud vendor platforms like Azure, AWS, and GCP.

Addition of services to Mesh

The below-mentioned code snippets demonstrate the creation of algorithm-selector enabled with automatic sidecar injection by adding the istio-injection label to the namespace. These steps allow to identify the namespace that will be used to add services from the algorithm-selector application into the mesh:

Create the algorithm-selector namespace

$ kubectl create namespace algorithm-selector

Enable the stocker-trader namespace for Istio automatic sidecar injection

$ kubectl label namespace algorithm-selector istio-injection=enabled

Validate the namespace is annotated with istio-injection

Istio makes it even easier to add services to the mesh by enabling automatic sidecar injection per Kubernetes namespace. The most differentiating characteristic feature of Istio is the ability to control which services are added to the mesh to enable incremental adoption of services.

New AI/ML algorithms based SAAS services, feature-stores, or cloud bases services for caching or database can be rolled out new to sit between existing micro-services. The flexibility that Istio allows launching of these services in existing infrastructure gives it an important role in Enterprise AI.

$ kubectl get namespace -L istio-injection

Conclusion

Service meshes help in the modernization of cloud service management, deployment, and monitoring. In addition, it can provide a facade to facilitate evolutionary architecture.

References

- https://www.oreilly.com/library/view/istio-up-and/9781492043775/ch0…

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6308717/

- https://www.ibm.com/downloads/cas/XWN1WV9Q

- https://medium.com/better-programming/how-istio-works-behind-the-sc…

- https://towardsdatascience.com/deploy-tensorflow-models-with-istio-…

- https://istio.io/latest/blog/2018/v1alpha3-routing/