Experiments

In his book, The Master Algorithm, Pedro Domingos imagines the following experiments:

Take a building, extremely well–built for two purposes: Nothing can enter and most importantly nothing can leave. The former is to assure the safety of any human from the danger inside, the latter is to assure the safety of everyone on the planet from what is inside. Inside the building, one unlucky fellow finds a 3D printer and the robots it manufactures.

The design and algorithms that define and govern each robot are subject to both “mutation” and “mating”. And each created robot has one purpose – to eradicate every other robot. Such a strategy, through selection of the fittest, could create specialized killer robots and serve any army.

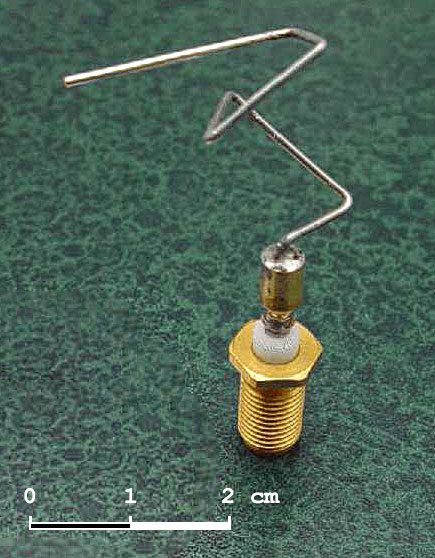

This is an example of an application of genetic algorithms, a family of potent artificial intelligence algorithms. Genetic algorithms have already been used in real life. For example, NASA designed antennas for satellites with a genetic algorithm.

This method created antennas whose shape had never before been seen and that were specific to each satellite orbit.

But why mimic natural selection in AI? If you think about it, and if you don’t listen to creationists, natural selection has already created intelligence several times over life history. The human brain is indeed a product of the selection of the fittest.

The ability of any living thing to use a central nervous system to process signals from its environment evolved one generation after the other.

So it could make sense trying to channel the powerful force of evolution and selection in order to evolve AI algorithms once more.

How novelty is generated in life?

For any living thing, novelty can occur through mutation. The genetic code of any living organism (excluding viruses from the living, whose genome can be made of RNA) is made of DNA. The DNA is just a polymer made up of 4 constituents, called nucleobases and denoted by the four letters ATGC (Adenine, Thyime, Guanine, and Cytosine).

Each three consecutive letters are called a codon and can be interpreted by the cell machinery as part of a protein, called an amino acid. It is the interpretation of the codon unit of DNA that directs protein synthesis and is responsible for protein shape and function.

From time to time, through chemical stress or copy error, a nucleobase can be inverted by another (a mutation), which can change the interpretation of a codon, hence altering the properties of a given protein. Through many generations and after subsequent selection, novelties are introduced in every species.

Sexual species can also introduce novelties through the mating process. Basically, two mates are sampling half of their DNA to create a third individual. The DNA polymer of the offspring can be reasserted randomly during the mating process, which can create a new mutation, hence creating new proteins. For example, in humans, each non-sexual chromosome is present in two copies. It is possible for two chromosomes of the same type to exchange part of their DNA. This can create a totally new variation of a given gene. This process is called a cross-over.

Also, through copy errors, some genes can be duplicated or removed, which can create innovation. Then after mutation, two proteins with the same ancestor can differ and display different properties.

How to mimic evolution inside a computer

In a way, one can look at a genetic algorithm simply as an optimization technique. Let’s say you want to minimize a function f. At first, you would randomly create several “individuals”, say a hundred. Each individual would receive random attributes, which are inputs of f. Then, individuals would be evaluated through f, and you would only retain the 20 best combinations of inputs.

For each remaining survivor, some of the inputs could vary by a little amount: You need to define the probability for it to happen and the magnitude of the change. This mimics the biological process of mutation.

Now you can also imagine a mating strategy. Take two individuals and make them exchange some attributes. If an attribute is a list, you could break the list at a point for both individuals and each would exchange a part of the list. This can be seen as the cross-over of living things. This will produce the offspring of the previous generation.

By repeating the process of mutating, mating, and of cross-over for several generations, it’s possible to learn to minimize f.

Generic algorithms for hyper-parameters selection

Genetic algorithms can be used for a variety of machine learning task and it has recently gained traction for the machine-learning hyper-parameters search. There is a python library dedicated to this use: TPOT.

It’s usage is rather simple:

from tpot import TPOTClassifier

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

digits = load_digits()

X_train, X_test, y_train, y_test = train_test_split(digits.data, digits.target,

train_size=0.75, test_size=0.25)

tpot = TPOTClassifier(generations=5, population_size=20, verbosity=2)

tpot.fit(X_train, y_train)

print(tpot.score(X_test, y_test))

tpot.export(‘tpot_mnist_pipeline.py’)

Conclusion

Machine learning algorithm is often inspired by natural processes. Artificial Neural Networks are inspired by the neural organization of the visual cortex in humans. Genetic algorithms is about channeling evolution for optimization purposes. Here is described how it is possible through mutations, mating and cross-over processes to learn a function.

Genetic algorithms have recently been used for hyper-parameters search, as a replacement of gradient descent for very large networks, as a way to find new neural architectures and many more machine learning tasks.