Resume making is very tricky. A candidate has many dilemmas,

- whether to state a project at length or just mention the bare minimum

- whether to mention many skills or just mention his/her core competency skill

- whether to mention many programming languages or just cite a few

- whether to restrict the resume to 2 pages or 1 page

These dilemmas are equally hard for Data Scientists looking for a change or even for aspiring Data Scientist.

Now before you wonder where this article is heading, let me give you the reason of writing this article.

The Context

A friend of mine has his own Data Science consultancy. He recently bagged a good project which required him to hire 2 Data Scientist. He had put a job posting on LinkedIn and to his surprise he received close to 200 resumes. When I met him in person he remarked, “if only there was a way to select the best resumes out of this lot in a faster manner than manually going through all resume one by one”.

I had been working on few NLP projects both as part of work and as a hobby for past 2 years. I decided to take a shot at my friend’s problem. I told my friend perhaps we can solve this problem or at least bring down the time of manual scanning through some NLP techniques.

The Exact Requirement

My friend wanted a person with Deep Learning as his/her core competency along with other Machine Learning algorithms know how. The other candidate was required to have more of Big Data or Data Engineering skill set like experience in Scala, AWS, Dockers, Kubernetes etc.

The Approach

Once I understood what my friend was ideally looking for in a candidate, I devised an approach on how to solve this. Here is what I had listed down as an approach

- Have a dictionary or table which has all the various skill sets categorized i.e. if there are words like keras, tensorflow, CNN, RNN then put them under one column titled ‘Deep Learning’.

- Have an NLP algorithm that parses the whole resume and basically search for the words mentioned in the dictionary or table

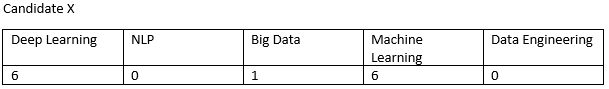

- The next step is to count the occurrence of the words under various category i.e. something like below for every candidate

The above candidate would be a good match for the ‘Deep Learning Data Scientist’ that my friend is looking for.

- Represent the above information in a visual way so that it becomes easy for us to choose the candidate

The Research

Now that I had finalized on what my approach was going to be, the next big hurdle was how to accomplish what I had just stated.

NLP Part — Spacy

I was on a look out for a library that kind of does ‘phrase/word matching’. My search requirement was satisfied by Spacy. Spacy has a feature called ‘Phrase Matcher’. You can read more about it here.

Reading the Resume

There are many off the shelf packages which help in reading the resume. Luckily all the resume that my friend had got was of the PDF format. So, I decided to explore PDF packages like PDFminer or PYPDF. I chose PYPDF.

Language: Python

Data Visualization: Matplotlib

The Code & Explanation

The complete Code

Here is the full python code.

#Resume Phrase Matcher code

#importing all required libraries

import PyPDF2

import os

from os import listdir

from os.path import isfile, join

from io import StringIO

import pandas as pd

from collections import Counter

import en_core_web_sm

nlp = en_core_web_sm.load()

from spacy.matcher import PhraseMatcher

#Function to read resumes from the folder one by one

mypath=’D:/NLP_Resume/Candidate Resume’ #enter your path here where you saved the resumes

onlyfiles = [os.path.join(mypath, f) for f in os.listdir(mypath) if os.path.isfile(os.path.join(mypath, f))]

def pdfextract(file):

fileReader = PyPDF2.PdfFileReader(open(file,’rb’))

countpage = fileReader.getNumPages()

count = 0

text = []

while count < countpage:

pageObj = fileReader.getPage(count)

count +=1

t = pageObj.extractText()

print (t)

text.append(t)

return text

#function to read resume ends

#function that does phrase matching and builds a candidate profile

def create_profile(file):

text = pdfextract(file)

text = str(text)

text = text.replace(“\\n”, “”)

text = text.lower()

#below is the csv where we have all the keywords, you can customize your own

keyword_dict = pd.read_csv(‘D:/NLP_Resume/resume/template_new.csv’)

stats_words = [nlp(text) for text in keyword_dict[‘Statistics’].dropna(axis = 0)]

NLP_words = [nlp(text) for text in keyword_dict[‘NLP’].dropna(axis = 0)]

ML_words = [nlp(text) for text in keyword_dict[‘Machine Learning’].dropna(axis = 0)]

DL_words = [nlp(text) for text in keyword_dict[‘Deep Learning’].dropna(axis = 0)]

R_words = [nlp(text) for text in keyword_dict[‘R Language’].dropna(axis = 0)]

python_words = [nlp(text) for text in keyword_dict[‘Python Language’].dropna(axis = 0)]

Data_Engineering_words = [nlp(text) for text in keyword_dict[‘Data Engineering’].dropna(axis = 0)]

matcher = PhraseMatcher(nlp.vocab)

matcher.add(‘Stats’, None, *stats_words)

matcher.add(‘NLP’, None, *NLP_words)

matcher.add(‘ML’, None, *ML_words)

matcher.add(‘DL’, None, *DL_words)

matcher.add(‘R’, None, *R_words)

matcher.add(‘Python’, None, *python_words)

matcher.add(‘DE’, None, *Data_Engineering_words)

doc = nlp(text)

d = []

matches = matcher(doc)

for match_id, start, end in matches:

rule_id = nlp.vocab.strings[match_id] # get the unicode ID, i.e. ‘COLOR’

span = doc[start : end] # get the matched slice of the doc

d.append((rule_id, span.text))

keywords = “\n”.join(f'{i[0]} {i[1]} ({j})’ for i,j in Counter(d).items())

## convertimg string of keywords to dataframe

df = pd.read_csv(StringIO(keywords),names = [‘Keywords_List’])

df1 = pd.DataFrame(df.Keywords_List.str.split(‘ ‘,1).tolist(),columns = [‘Subject’,’Keyword’])

df2 = pd.DataFrame(df1.Keyword.str.split(‘(‘,1).tolist(),columns = [‘Keyword’, ‘Count’])

df3 = pd.concat([df1[‘Subject’],df2[‘Keyword’], df2[‘Count’]], axis =1)

df3[‘Count’] = df3[‘Count’].apply(lambda x: x.rstrip(“)”))

base = os.path.basename(file)

filename = os.path.splitext(base)[0]

name = filename.split(‘_’)

name2 = name[0]

name2 = name2.lower()

## converting str to dataframe

name3 = pd.read_csv(StringIO(name2),names = [‘Candidate Name’])

dataf = pd.concat([name3[‘Candidate Name’], df3[‘Subject’], df3[‘Keyword’], df3[‘Count’]], axis = 1)

dataf[‘Candidate Name’].fillna(dataf[‘Candidate Name’].iloc[0], inplace = True)

return(dataf)

#function ends

#code to execute/call the above functions

final_database=pd.DataFrame()

i = 0

while i < len(onlyfiles):

file = onlyfiles[i]

dat = create_profile(file)

final_database = final_database.append(dat)

i +=1

print(final_database)

#code to count words under each category and visulaize it through Matplotlib

final_database2 = final_database[‘Keyword’].groupby([final_database[‘Candidate Name’], final_database[‘Subject’]]).count().unstack()

final_database2.reset_index(inplace = True)

final_database2.fillna(0,inplace=True)

new_data = final_database2.iloc[:,1:]

new_data.index = final_database2[‘Candidate Name’]

#execute the below line if you want to see the candidate profile in a csv format

#sample2=new_data.to_csv(‘sample.csv’)

import matplotlib.pyplot as plt

plt.rcParams.update({‘font.size’: 10})

ax = new_data.plot.barh(title=”Resume keywords by category”, legend=False, figsize=(25,7), stacked=True)

labels = []

for j in new_data.columns:

for i in new_data.index:

label = str(j)+”: ” + str(new_data.loc[i][j])

labels.append(label)

patches = ax.patches

for label, rect in zip(labels, patches):

width = rect.get_width()

if width > 0:

x = rect.get_x()

y = rect.get_y()

height = rect.get_height()

ax.text(x + width/2., y + height/2., label, ha=’center’, va=’center’)

plt.show()

Now that we have the whole code, I would like emphasize on two things

The Keywords csv

The keywords csv is referred in the code as ‘template_new.csv’

You can replace it with a DB of your choice (and make required changes in the code) but for simplicity I chose the good ol excel sheet (csv).

The words under each category can be bespoke, here is the list of words that I used to do the phrase matching against the resumes.

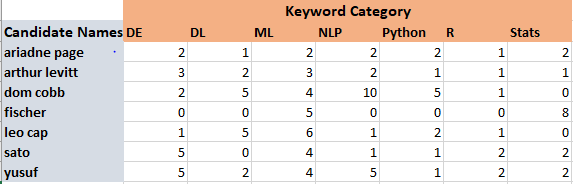

The Candidate — Keywords table

sample2=new_data.to_csv('sample.csv')

The execution of the above line of code produces a csv file, this csv file shows the candidates’ keyword category counts (the real names of the candidates have been masked) Here is how it looks.

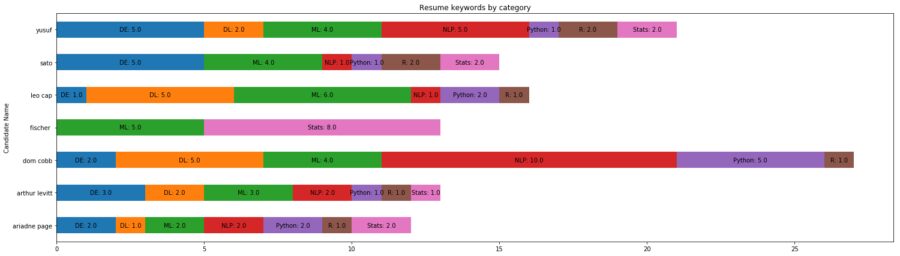

This may not be intuitive hence I have resorted to the data visualization through Matplotlib as depicted below

Here DE stands for Data Engineering, the others are self explanatory

Was the whole exercise beneficial?

My friend was really surprised with results achieved and it saved him a lot of time. Not to mention he did shortlist around 15 resumes out of nearly 200 resumes just by running the code.

Here is how the whole exercise was useful

Automatic reading of resume

Instead of manually opening each and every resume, the code automatically opens the resumes and parses the content. If this were to be done manually it would take a lot of time.

Phrase matching and categorization

If we were to manually read all the resume, it would be very difficult to say whether a person has expertise in Machine learning or Data engineering because we are not keeping a count of the phrases while reading. The code on the other hand just hunts for the keywords ,keeps a tab on the occurrence and the categorizes them.

The Data Visualization

The Data Visualization is a very important aspect here. It speeds up the decision making process in the following ways

- We get to know which candidate has more keywords under a particular category, there by letting us infer that he/she might have extensive experience in that category or he/she could be a generalist.

- We can do a relative comparison of candidates with respect to each other, there by helping us filter out the candidates that don’t meet our requirement.

How you can use the code

Data Scientist seeking job change / Aspiring Data Scientist:

Chances are that many companies are already using codes like above to do initial screening of candidates. It is hence advisable to tailor make your resume for the particular job requirement with the required keywords.

A typical Data Scientist has two options either position himself/herself as a generalist or come across as an expert in one area say ‘NLP’. Based on the job requirement, a Data Scientist can run this code against his/her resume and get to know which keywords are appearing more and whether he/she looks like a ‘Generalist’ or ‘Expert’. Based on the output you can further tweak your resume to position yourself accordingly.

Recruiters

If you are a recruiter like my friend and are deluged with resumes then you can run this code to screen candidates.

Hope you liked the article. Stay tuned for more article.

You can reach out to me on Linkedin